Scientists are tackling the challenge of equipping robots with effective visuomotor skills despite limited data, a common hurdle in real-world robotic learning! Maanping Shao, Feihong Zhang, and Gu Zhang, all from Tsinghua University, alongside Baiye Cheng from Huazhong University of Science and Technology, Zhengrong Xue from Tsinghua University, and Huazhe Xu from the Institute for Interdisciplinary Information Sciences, Tsinghua University, and Shanghai Qi Zhi Institute/Shanghai Artificial Intelligence Laboratory, present X-Distill , a novel approach to bridge the gap between powerful but data-hungry Vision Transformers and compact, optimisable Convolutional Neural Networks! Their research introduces a cross-architecture knowledge distillation technique, transferring visual understanding from a large, pre-trained model to a smaller network, and then jointly training it for robotic manipulation tasks! This method demonstrably outperforms existing approaches using standard or fine-tuned encoders, even those leveraging more complex 3D data or larger language models, offering a significant step towards data-efficient and robust robotic control.

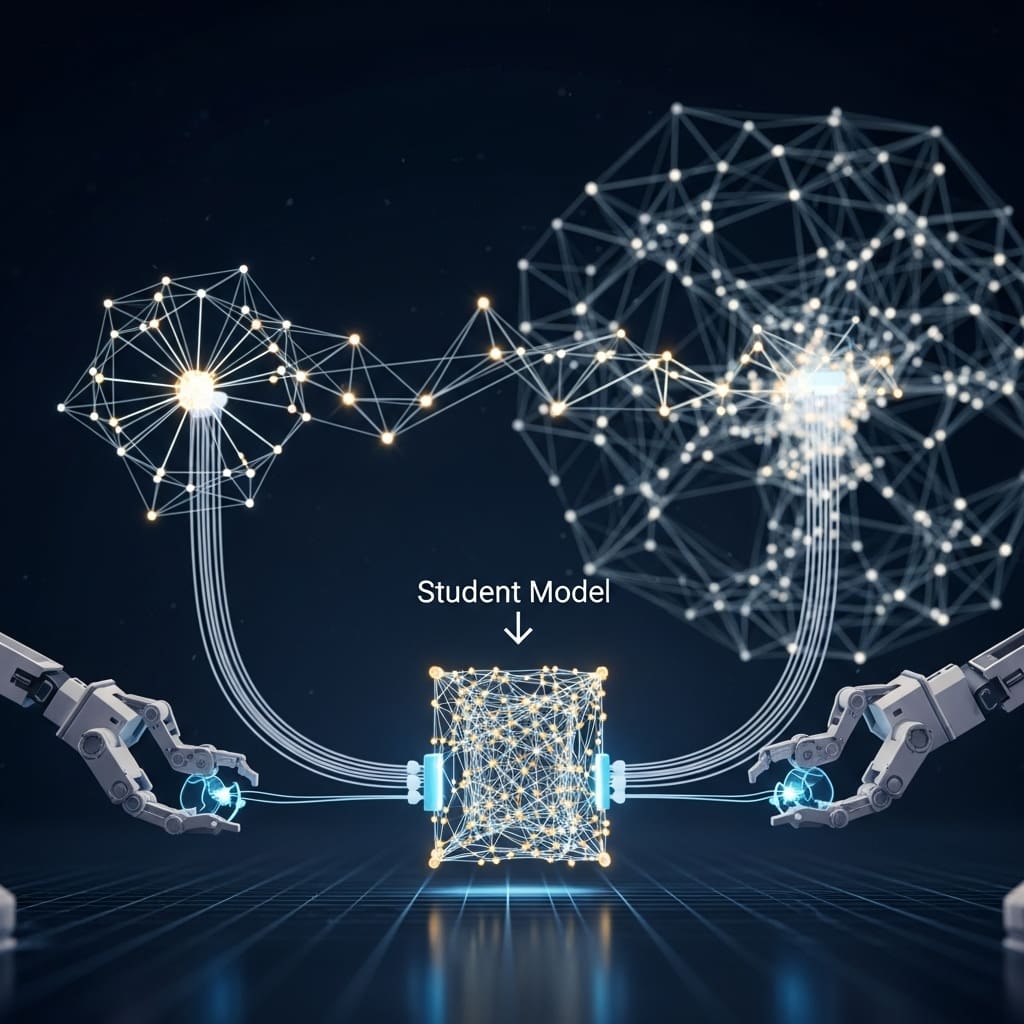

The research team transferred rich visual representations from a frozen DINOv2 teacher, a large ViT, to a compact ResNet-18 student on the ImageNet dataset, effectively imbuing the CNN with powerful visual priors. This distilled encoder is then jointly fine-tuned with a diffusion policy head on specific manipulation tasks, creating a robust and data-efficient visuomotor system.

Extensive experiments were conducted on 34 simulated benchmarks, including MetaWorld, Adroit, and DexArt, alongside 5 challenging real-world tasks, demonstrating X-Distill’s consistent outperformance over policies utilizing from-scratch ResNets or fine-tuned DINOv2 encoders. The study reveals that X-Distill achieves state-of-the-art performance with only 10 demonstrations per task in simulation and 20-25 demonstrations per task in the real world, highlighting its remarkable data efficiency. Notably, the X-Distill approach also surpassed 3D encoders relying on privileged point cloud observations and even outperformed models employing much larger Vision-Language Models, proving its efficacy in complex robotic scenarios. The core innovation lies in the offline, cross-architecture knowledge distillation process, which allows the CNN to benefit from the generalization capabilities of the ViT without inheriting its data-intensive requirements.

By distilling knowledge onto the ImageNet dataset, the researchers avoided overfitting to specific robotic scenarios, resulting in a more versatile and adaptable encoder. This distilled encoder, when integrated into a diffusion policy, enables significantly improved performance in data-efficient robotic manipulation, paving the way for more practical and accessible robotic systems. The work establishes a simple yet highly effective distillation strategy for achieving superior results in visuomotor learning! Furthermore, detailed qualitative analysis of the learned representations provides valuable insights into how X-Distill achieves its performance gains over baseline methods. The team’s findings underscore the importance of combining the inductive biases of CNNs with the semantic understanding of pre-trained ViTs, offering a promising direction for future research in data-efficient robotic manipulation. To bridge this gap, the team performed offline, cross-architecture knowledge distillation, transferring visual representations from a frozen DINOv2 teacher network to a ResNet-18 student network using the ImageNet dataset! Experiments employed a DINOv2 (ViT-L/14) model as the teacher, meticulously chosen for its robust visual priors, and a lightweight ResNet-18 as the student, facilitating efficient policy optimization.

The distillation process utilized mean squared error (MSE) loss calculated between the feature maps of the teacher and student networks, effectively transferring learned knowledge across architectures. This distilled encoder, now imbued with powerful visual understanding, was then jointly fine-tuned alongside a diffusion policy head on target manipulation tasks, enabling end-to-end learning! The study pioneered a unique approach by leveraging the general-purpose ImageNet dataset for distillation, preventing overfitting to specific robotic scenarios and promoting broader applicability. Researchers conducted extensive evaluations on 34 simulated benchmarks and 5 challenging real-world manipulation tasks, rigorously comparing X-Distill against policies utilizing from-scratch ResNets and fine-tuned DINOv2 encoders.

The system consistently delivered superior performance, demonstrating the efficacy of the distillation strategy! Notably, X-Distill outperformed 3D encoders relying on privileged point cloud observations and even surpassed larger Vision-Language Models, highlighting its surprising effectiveness0.2% across all tasks, consistently outperforming all 2D vision baselines by a significant margin! Specifically, X-Distill achieved 93.9% on MetaWorld (easy), 88.3% on MetaWorld (medium), 48.0% on MetaWorld (hard), and 88.0% on MetaWorld (very hard), validating the effectiveness of the distillation strategy in data-scarce environments! Ablation studies further confirmed the impact of key components, revealing that using a DINOv2-S teacher and a ResNet-18 student yielded an average success rate of 90. The resulting distilled encoder is then fine-tuned alongside a diffusion policy head for manipulation tasks, consistently exceeding the performance of policies utilising from-scratch ResNets or fine-tuned DINOv2 encoders. Extensive testing across 34 simulated and 5 real-world tasks demonstrates X-Distill’s superiority, even when compared to 3D encoders leveraging point cloud data or larger Language Models.

Analysis reveals the method learns a semantically meaningful and robust visual representation, effectively differentiating critical states and focusing on relevant visual cues during complex manipulation tasks. The authors acknowledge a limitation in their direct feature distillation technique, suggesting potential improvements through alignment of intermediate features and distillation from multimodal teachers incorporating language priors. Future research could also investigate X-Distill’s scalability in data-rich environments and its application to dynamic tasks such as mobile manipulation. This work establishes that a simple, well-founded distillation strategy is a key enabler for data-efficient visuomotor learning, offering a practical pathway for building capable robotic policies with limited data! The findings highlight the efficacy of combining the strengths of different neural network architectures to overcome data scarcity challenges in robotics and inspire further exploration of cross-architecture knowledge distillation techniques.

👉 More information

🗞 X-Distill: Cross-Architecture Vision Distillation for Visuomotor Learning

🧠 ArXiv: https://arxiv.org/abs/2601.11269