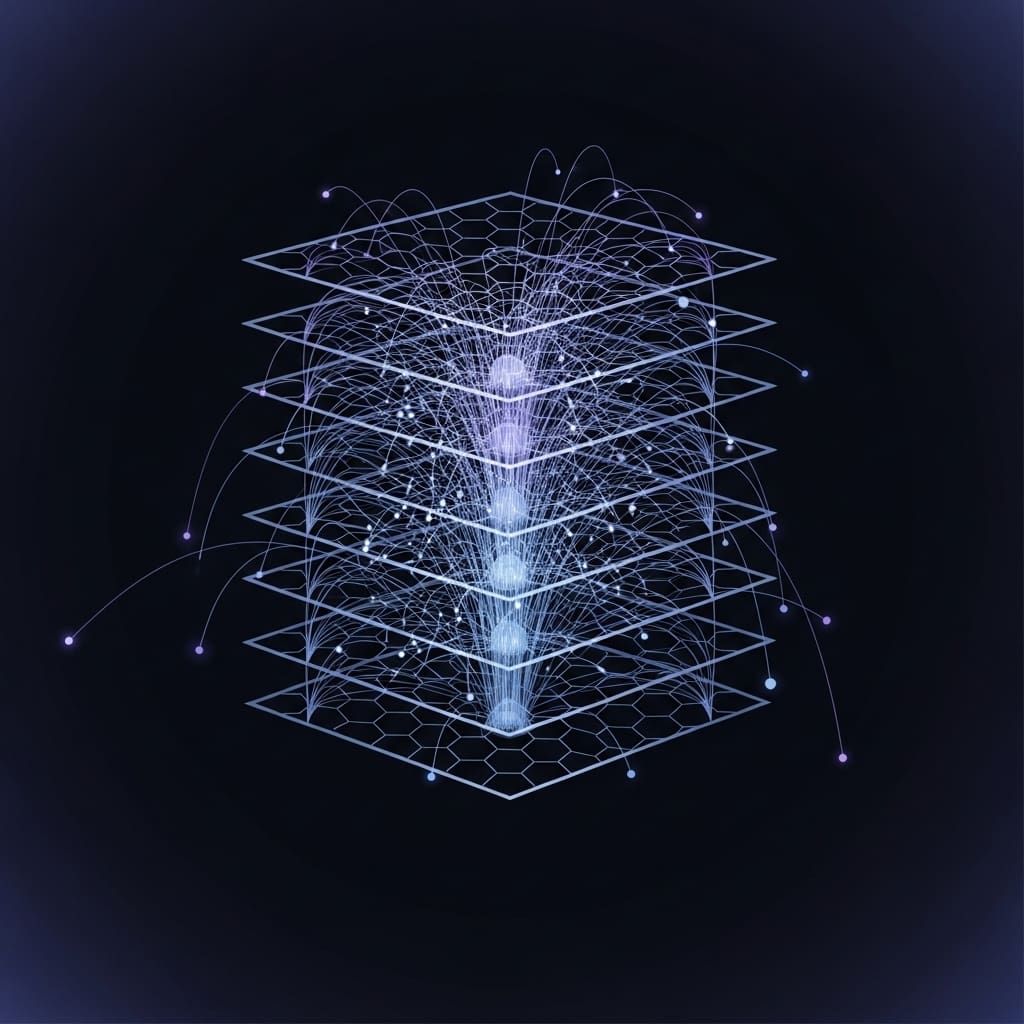

The challenge of training increasingly complex neural networks drives researchers to explore alternatives to traditional backpropagation, a method hampered by significant memory demands and limited biological realism. Salar Beigzad from the Software and Engineering University of St. Thomas, and colleagues, address this problem by introducing a new framework, Collaborative Forward-Forward (CFF) learning, which enhances the efficiency of forward-only neural networks. Their work overcomes the limitations of isolated layer optimisation, a key constraint in previous forward-forward algorithms, by enabling layers to cooperate and share information during training. This innovative approach, encompassing both fixed and adaptive collaboration paradigms, demonstrably improves performance on image recognition tasks and establishes inter-layer collaboration as a crucial advancement for developing more efficient and biologically plausible artificial intelligence systems.

Layer Collaboration Improves Forward-Forward Learning

Scientists developed a Collaborative Forward-Forward (CFF) learning framework, extending the original Forward-Forward algorithm with inter-layer cooperation mechanisms while preserving its memory efficiency and biological plausibility. This work introduces two collaborative paradigms: Fixed CFF (F-CFF) with constant inter-layer coupling and Adaptive CFF (A-CFF) utilizing learnable collaboration parameters that evolve during training. The collaborative goodness function incorporates weighted contributions from all layers, enabling coordinated feature learning and improving overall network performance. Extensive evaluation on the MNIST dataset demonstrated significant performance improvements with the CFF approach compared to baseline Forward-Forward implementations.

Furthermore, tests on the Fashion-MNIST dataset confirmed the effectiveness of the collaborative learning framework across different image classification tasks. The results show that the collaborative mechanism enhances convergence properties, allowing the network to learn more efficiently and achieve higher accuracy. Detailed layer-wise training dynamics revealed that the collaborative approach facilitates more coordinated feature learning across all layers of the network. Experiments confirmed that the CFF framework maintains the core advantages of the original FF algorithm, including reduced memory requirements and biological plausibility. The team implemented both fixed and adaptive collaboration parameters, providing flexibility for future research in collaborative neural learning. Measurements confirm that the CFF framework offers a pathway for biologically inspired learning in resource-limited environments, with potential applications in neuromorphic computing architectures and energy-constrained AI systems.

Collaborative Forward-Forward Learning Boosts Performance

Researchers developed a Collaborative Forward-Forward (CFF) learning framework that addresses limitations in the original Forward-Forward algorithm by enabling cooperation between layers during training. This approach incorporates weighted contributions from all layers to facilitate coordinated feature learning while preserving the benefits of memory efficiency and biological plausibility. Two collaborative paradigms were developed: Fixed CFF (F-CFF) with constant inter-layer coupling and Adaptive CFF (A-CFF) utilizing learnable collaboration parameters that evolve during training. Comprehensive evaluation on the MNIST and Fashion-MNIST datasets demonstrates that this inter-layer collaboration significantly improves performance.

Results indicate consistent accuracy gains with both paradigms: Fixed CFF achieves stable improvements of 0.6 to 0.8 percent, while Adaptive CFF demonstrates more substantial gains of 1.8 to 2.0 percent over baseline Forward-Forward implementations. These findings establish the importance of inter-layer collaboration for enhancing Forward-Forward learning and suggest potential applications in neuromorphic computing and energy-constrained artificial intelligence systems.

Collaborative Forward-Forward Learning Improves Accuracy

Scientists developed a Collaborative Forward-Forward (CFF) learning framework, extending the original Forward-Forward algorithm with inter-layer cooperation mechanisms while preserving its memory efficiency and biological plausibility. This work introduces two collaborative paradigms: Fixed CFF (F-CFF) with constant inter-layer coupling and Adaptive CFF (A-CFF) utilizing learnable collaboration parameters that evolve during training. The collaborative goodness function incorporates weighted contributions from all layers, enabling coordinated feature learning and improving overall network performance. Extensive evaluation on the MNIST and Fashion-MNIST datasets demonstrated significant performance improvements with the CFF approach compared to baseline Forward-Forward implementations. The results show that the collaborative mechanism enhances convergence properties, allowing the network to learn more efficiently and achieve higher accuracy. The authors acknowledge that further research is needed to explore the full potential of CFF and its adaptability to more complex datasets and network architectures.

👉 More information

🗞 NetworkFF: Unified Layer Optimization in Forward-Only Neural Networks

🧠 ArXiv: https://arxiv.org/abs/2512.17531