The increasing prevalence of online conflict presents a significant challenge to constructive digital communication, and researchers are now investigating whether artificial intelligence can offer solutions beyond simple content moderation. Dawei Li, alongside Abdullah Alnaibari and Arslan Bisharat from Arizona State University and Loyola University Chicago, respectively, with contributions from Manny Sandoval and Yasin Silva, explores the potential for large language models (LLMs) to act as mediators in online ‘flame wars’. This work demonstrates that LLMs can move beyond simply detecting harmful content and instead actively understand the dynamics of a conflict, judging fairness and emotional tone, then generating empathetic responses to guide participants toward resolution. By constructing a dataset based on Reddit conversations and employing a rigorous evaluation pipeline, the team shows that commercially available LLMs currently outperform open-source alternatives in both reasoning and effective intervention, offering a promising, though limited, step towards AI-assisted online social mediation.

LLMs Facilitate Constructive Online Dialogue

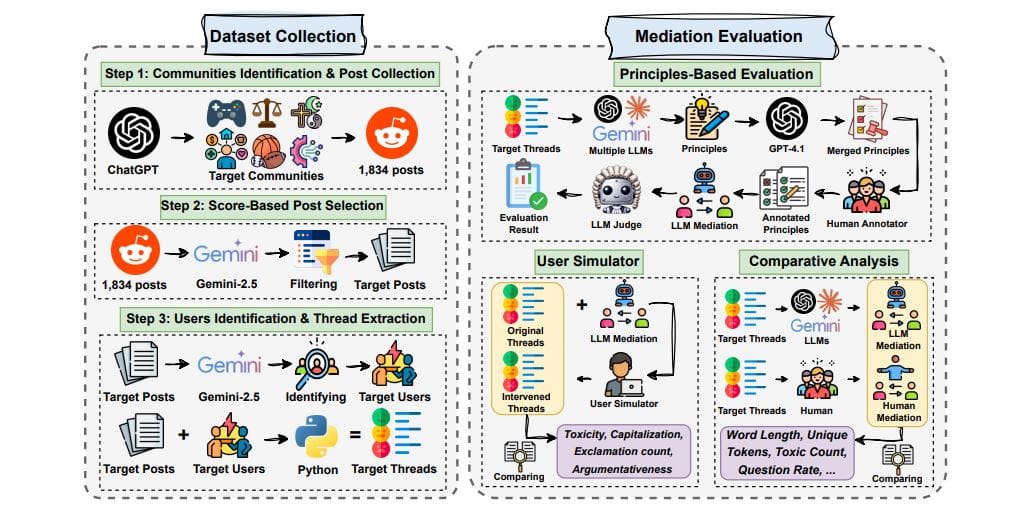

Fostering empathy and constructive dialogue represents an important frontier for responsible AI research. This work explores whether large language models (LLMs) can move beyond simply moderating online content to actively mediating online conflicts. The framework decomposes mediation into two key tasks: judgment, where an LLM evaluates the fairness and emotional dynamics of a conversation, and steering, where it generates empathetic responses to guide participants toward resolution. By assessing an LLM’s ability in both areas, scientists aim to create systems that not only detect harmful content but also promote constructive communication.

LLMs De-escalate Online Conflicts Effectively

The research demonstrates significant advances in leveraging large language models (LLMs) for online social mediation. Scientists constructed a framework that decomposes mediation into two key tasks: judgment, where the LLM assesses fairness and emotional dynamics, and steering, where it generates empathetic responses to guide conversations toward resolution. To rigorously evaluate performance, the team developed a novel evaluation pipeline combining principle-based scoring, user simulation, and direct human comparison. Experiments reveal substantial differences in mediation quality between different models, with closed-source systems consistently outperforming their open-source counterparts.

Specifically, models like Claude 3 and GPT-5 achieved high scores in principle-based evaluations, suggesting that larger datasets and intensive alignment training contribute to better performance. Analysis across various topical categories, including gaming, lifestyles, and religion, reveals consistent performance, with slight variations depending on the subject matter. Further analysis using user simulation demonstrates the impact of LLM mediation on linguistic characteristics, showing a reduction in toxicity rates and a promotion of more neutral responses. These findings highlight the potential of LLMs as emerging agents for online social mediation, while also revealing areas for continued improvement.

LLMs as Emerging Social Mediators

This work demonstrates that large language models possess emerging capabilities as online social mediators, moving beyond simple moderation to actively understand and de-escalate conflict. Researchers developed a framework that assesses mediation quality by evaluating an LLM’s ability to judge the fairness and emotional dynamics of a conversation, and then steer participants towards resolution. Through a multi-stage evaluation pipeline, combining principle-based scoring, user simulation, and human comparison, the team showed that current LLMs can perform both judgment and steering tasks, although with varying degrees of success. Notably, closed-source models consistently outperformed open-source counterparts in both areas, suggesting that extensive training and alignment optimisation are key to effective mediation.

The strong correlation between judgment and steering performance indicates a shared underlying competence, implying that improvements in one area can positively influence the other. While acknowledging the limitations of current models, the findings highlight the potential for LLMs to become valuable tools in fostering constructive online dialogue. The researchers note differences in pronoun usage between human mediators and LLMs, with the latter exhibiting a more neutral style, which contributes to reduced toxicity in their responses. Further research could explore how to refine these models to more closely emulate the nuanced communication strategies of human mediators.

👉 More information

🗞 From Moderation to Mediation: Can LLMs Serve as Mediators in Online Flame Wars?

🧠 ArXiv: https://arxiv.org/abs/2512.03005