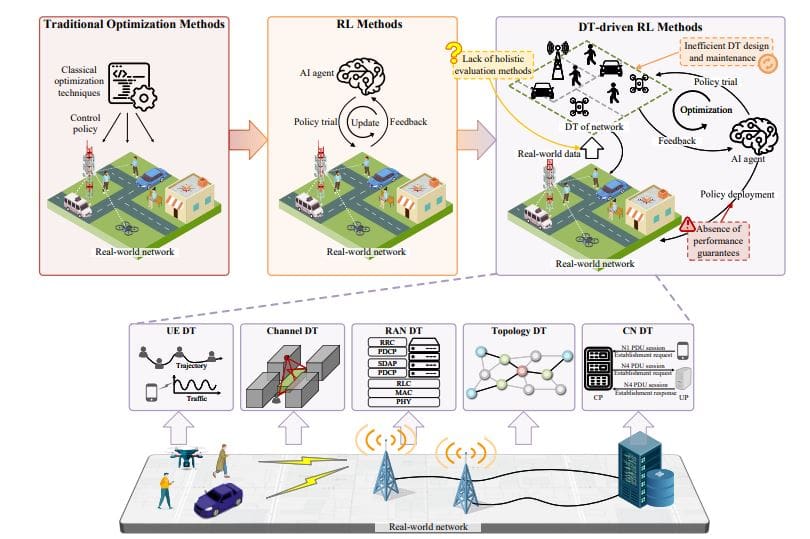

Optimizing increasingly complex wireless networks presents a significant challenge, but agentic artificial intelligence, driven by reinforcement learning, offers a potential solution hampered by the costs and risks of real-world experimentation. Researchers led by Zhenyu Tao and Xiaohu You, both from Southeast University and the Pervasive Communication Research Center, alongside Wei Xu, address this problem by focusing on the crucial role of digital twins, virtual replicas used for safe AI training. Their work introduces a new framework for evaluating digital twin trustworthiness, moving beyond simple accuracy measurements to assess how well a twin supports the specific task of network optimization. This holistic approach allows for the pre-selection of high-performing digital twins, substantially reducing training and testing expenses while maintaining, and even improving, real-world network performance, representing a major step towards practical, reliable agentic AI in wireless communications.

Digital Twin Fidelity for Reinforcement Learning

Researchers have introduced a new framework for evaluating the reliability of digital twins (DTs) used in wireless networks, enabling trustworthy virtual environments for training artificial intelligence (AI) agents. The core challenge addressed is ensuring that DTs accurately simulate real-world conditions, allowing agents trained within them to perform successfully when deployed in actual networks. This work proposes a Digital Twin Bisimulation Metric (DTBM) to quantify the discrepancy between the DT and the real world, assessing how well the DT simulates network behaviour under various conditions, with a lower mismatch score indicating a more reliable DT. Through theoretical analysis and experiments, the team demonstrates that this metric provides provable performance bounds for AI agents trained in the DT, and significantly reduces the cost of DT-driven AI workflows by enabling pre-filtering of less-reliable DTs.

The central contribution of this work is the DTBM, a quantitative measure of the difference between the DT and the real-world network, inspired by bisimulation concepts from computer science. This allows for guaranteed performance levels for AI agents trained within the DT, a significant advancement over existing empirical validation methods. The DTBM also enables a pre-filtering step, discarding less-reliable DTs before AI training begins, dramatically reducing computational cost and testing effort. Future research involves using the mismatch value to dynamically synchronise the DT with the real world and to refine the AI training process.

The primary novelty lies in the formalisation of DT fidelity and the provision of provable guarantees for AI performance. Most existing DT validation methods are empirical or heuristic, whereas this work provides a mathematically grounded approach crucial for deploying AI-powered solutions in critical infrastructure where safety and reliability are paramount. Key takeaways include the critical importance of DT fidelity for successful AI agent training, the necessity of quantitative evaluation beyond empirical validation, the resource savings achieved through pre-filtering, and the increased confidence provided by formal performance guarantees.

Data-Driven Wireless Network Digital Twin Evaluation

Scientists have developed a comprehensive framework for evaluating digital twins (DTs) used in optimising wireless networks, shifting from assessing individual component accuracy to a holistic, task-centric approach. This work addresses the challenge of training artificial intelligence agents in virtual environments before deployment in real-world networks, where inaccurate DTs can lead to significant performance degradation. The evaluation framework provides a quantitative solution for determining the minimum amount of data required to build a reliable DT, transforming data collection from an open-ended process into a targeted endeavour. Experiments demonstrate that the framework effectively guides the design, selection, and lifecycle management of wireless network DTs.

For instance, the team quantified explicit sample complexity, enabling calculation of the minimum data needed to achieve a target accuracy level, ensuring efficient resource use. When applied to neural network-based DTs, the holistic evaluation method assesses long-term behavioural fidelity, preventing both overfitting and underfitting, yielding a more reliable model. Researchers successfully applied the framework to complex, environment-level DTs composed of multiple components, such as channel, mobility, and traffic models. By constructing two candidate DT environments for a user association task, the team calculated a mismatch value against the real network, identifying the demonstrably better DT with a lower mismatch.

This approach provides a systematic methodology for composing the most effective DT environment. Furthermore, the team demonstrated that the framework can identify the optimal modelling granularity, balancing fidelity with cost, and finding the level of detail that minimises expense without sacrificing training utility. Specifically, tests showed that decreasing radio map resolution from 0. 1 meters to 1 meter did not significantly increase mismatch, indicating robustness to that level of detail.

Trustworthy Digital Twins For Wireless Networks

This research presents a novel evaluation framework designed to ensure the trustworthiness of digital twins used in the optimisation of modern wireless networks. Recognising the limitations of current methods that focus solely on physical accuracy, the team developed a holistic, task-centric approach to assess how well a digital twin reflects the real-world network’s performance during artificial intelligence training. This framework moves beyond simply measuring similarities in wireless channels or user movement, instead quantifying the mismatch between the digital twin and the real world based on actual task performance. Through a case study on a real-world wireless network testbed, the team achieved significant reductions in both training and testing costs, exceeding 97%, without compromising the performance of deployed policies.

Importantly, the framework effectively identifies and excludes inferior digital twins, avoiding the risk of filtering out potentially optimal solutions, a common problem with existing methods. This work establishes a theoretically grounded tool for reliable artificial intelligence-based wireless network optimisation. The authors acknowledge that current digital twins often rely on fixed synchronisation schedules with the real world, which can be inefficient. Future research directions include using the mismatch value generated by the framework as a dynamic trigger for synchronisation, updating the digital twin only when divergence from reality exceeds a predetermined threshold. Further work could also explore predicting the transferability of digital twins between different network environments, and incorporating the mismatch value as a regularisation term during artificial intelligence training to improve policy generalisation.

Digital Twin Evaluation for AI Agent Training

Researchers developed a unified digital twin (DT) evaluation framework to ensure trustworthy virtual environments for training artificial intelligence (AI) agents used in wireless network optimisation. This work addresses the limitations of current methods, which focus on evaluating the physical accuracy of individual DT components, such as channel and user equipment models, rather than the holistic performance of the integrated system. The team recognised that a DT’s effectiveness for agentic AI training arises from the interactions between all its constituent components, necessitating a new, task-centric assessment approach. The study pioneered a methodology that moves beyond isolated physical accuracy metrics to evaluate DTs based on their ability to support effective AI agent training. The evaluation framework provides a pathway for principled refinement of DT design and maintenance, moving beyond empiricism and expert intuition. The team demonstrated a significant reduction in both training and testing costs, as the evaluation framework allows for the efficient selection of high-fidelity DTs, avoiding the need for extensive experimentation with inadequate virtual environments.

👉 More information

🗞 Toward Trustworthy Digital Twins in Agentic AI-based Wireless Network Optimization: Challenges, Solutions, and Opportunities

🧠 ArXiv: https://arxiv.org/abs/2511.19961