The increasing demands of large language models (LLMs) place significant strain on memory systems, particularly when handling extensive conversational histories, known as the KVCache. Xinjun Yang, Qingda Hu, and Junru Li, alongside Feifei Li, Yuqi Zhou, and Yicong Zhu, address this challenge with Beluga, a novel memory architecture that leverages the Compute Express Link (CXL) standard. Beluga enables GPUs and CPUs to share a large, unified memory pool, overcoming the capacity limitations of traditional memory configurations and the performance bottlenecks associated with remote direct memory access (RDMA). By facilitating near-local memory access speeds over the CXL fabric, this design dramatically reduces latency and simplifies programming complexities, achieving substantial performance gains. Beluga-KVCache delivers an 89. 6% reduction in time to first token and a 7. 35x throughput improvement, representing a significant advancement towards efficient and scalable LLM inference.

CXL is a dominant theme, investigated for memory expansion, pooling, and acceleration, while RDMA offers an alternative approach to efficient data transfer. These systems often integrate traditional DRAM with newer technologies like Non-Volatile Memory Express (NVMe) storage, and researchers are exploring mechanisms to optimize memory attributes and bypass the CPU for I/O operations. Several system and storage architectures are under investigation, including scalable storage engines like Scalestore and learned key-value stores such as ROLEX.

Research extends to scalable and transactional indexes, all designed for distributed storage systems. A significant focus lies on enabling CXL memory expansion for in-memory databases, enhancing their performance and capacity. Large language models (LLMs) and AI acceleration are major drivers of this research, with techniques developed to speed up LLM generation and reduce latency. Researchers are leveraging CXL for efficient training of large models and optimizing the key-value cache used in LLMs, with work on adaptive compression and techniques to address processing very long sequences. Model compression is also being explored to reduce computational costs.

Performance optimization techniques are central to these advancements, with extensive research focusing on caching strategies for both storage and LLM workloads, alongside data prefetching to improve performance. Utilizing parallel processing speeds up computations, while transaction processing ensures data consistency and reliability. Minimizing data movement through data locality techniques reduces latency. Notable systems and projects include Scalestore and CHIME, demonstrating a significant effort to build more flexible, scalable, and performant systems for demanding workloads like large language models and data-intensive applications. The focus is on disaggregating memory, optimizing data access, and accelerating computations.

CXL Interconnect Accelerates Large Language Model Inference

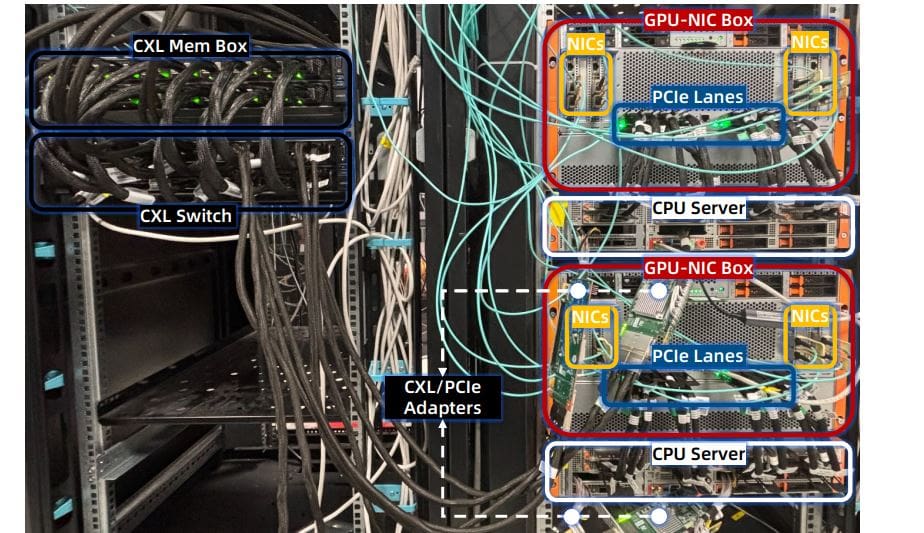

Research addresses a critical bottleneck in large language model (LLM) inference, memory capacity, and introduces Beluga, a novel memory architecture designed to overcome limitations in current systems. Recognizing that high-bandwidth memory on GPUs is insufficient for the expanding demands of LLMs, the team engineered a system leveraging CXL switches to connect GPUs and CPUs to a shared, large-scale memory pool. This approach delivers near-local memory latency by supporting native load/store access semantics over the CXL fabric, significantly reducing programming complexity and overhead. To validate the design, the team constructed an experimental platform featuring dual Intel Xeon Platinum 8575C CPUs, 2TB of DDR5 DRAM, and eight NVIDIA H20 GPUs, each with 96GB of memory.

They implemented an 8TB CXL memory pool using 32 DDR5 modules, connected via PCIe 5. 0 x16 adapters and CXL switches. A key focus was a comprehensive performance analysis of the CXL 2. 0 hardware, particularly for direct GPU-to-memory access. The researchers systematically investigated data consistency, recognizing that CXL 2.

0 does not inherently support cache coherence across multiple CPUs. They proposed and tested methods for ensuring data reaches CXL memory from the host, and for readers to ensure fresh data. Through rigorous experimentation, they determined that configuring memory as uncacheable provides the most reliable solution for maintaining data integrity. Beluga-KVCache, a system tailored for managing the large-scale KVCache in LLM inference, achieved an 89. 6% reduction in Time-To-First-Token and a 7. 35x throughput improvement compared to RDMA-based solutions.

Beluga Accelerates LLM Inference with CXL Memory

Beluga represents a significant advancement in memory architecture, addressing the growing demands of large language model (LLM) inference by enabling efficient access to vast memory pools. Researchers developed Beluga, a system leveraging CXL switches to allow GPUs and CPUs to share a large-scale memory pool, overcoming limitations imposed by traditional memory constraints. This innovative approach delivers near-local memory latency while simplifying programming and minimizing synchronization overhead. Detailed characterization of a commercial CXL switch-based memory pool informed the design of Beluga-KVCache, a system tailored for managing the large-scale KVCache crucial for LLM inference.

Experiments demonstrate that Beluga-KVCache achieves an 89. 6% reduction in Time-To-First-Token (TTFT) and a 7. 35x improvement in throughput within the vLLM inference engine, when compared to systems relying on RDMA-based solutions. These results confirm Beluga’s ability to substantially accelerate LLM performance. Further investigations into latency optimization revealed critical performance characteristics.

For CPU-to-CXL writes, utilizing non-temporal stores achieved a significantly faster latency than standard writes or accessing uncacheable memory. Similarly, for GPU-to-host transfers, disabling the Direct Data I/O (DDIO) cache yielded a faster latency than methods relying on CPU flushes. For 16KB transfers, the Intel DSA engine achieved top-tier performance using uncacheable memory.

👉 More information

🗞 Beluga: A CXL-Based Memory Architecture for Scalable and Efficient LLM KVCache Management

🧠 ArXiv: https://arxiv.org/abs/2511.20172