Adversarial robustness represents a critical challenge for vision-language models, particularly as these systems find increasing application in sensitive areas. Yuqi Li, Junhao Dong from Nanyang Technological University, Chuanguang Yang from Nanyang Technological University, and colleagues address this issue by introducing a new framework, MMT-ARD, which significantly improves a model’s ability to withstand deliberately misleading inputs. The team achieves this through a novel approach to knowledge distillation, leveraging multiple ‘teacher’ models to provide a more diverse and comprehensive learning experience for the ‘student’ model. This method not only boosts robustness, delivering a substantial improvement in accuracy when faced with adversarial attacks, but also accelerates the training process, achieving over twice the efficiency of conventional single-teacher techniques and demonstrating a scalable solution for building more reliable multimodal vision-language models.

Safety-critical applications demand robust performance, making adversarial robustness a crucial concern. While adversarial knowledge distillation shows promise in transferring robustness from teacher to student models, traditional single-teacher approaches suffer from limited knowledge diversity and slow convergence. To address these challenges, researchers propose MMT-ARD, a Multimodal Multi-Teacher Adversarial Robust Distillation framework. The key innovation lies in a dual-teacher knowledge fusion architecture that collaboratively optimises clean feature preservation and robust feature enhancement, improving both the reliability and performance of machine learning systems in critical applications.

Adversarial Robustness via Knowledge Fusion Distillation

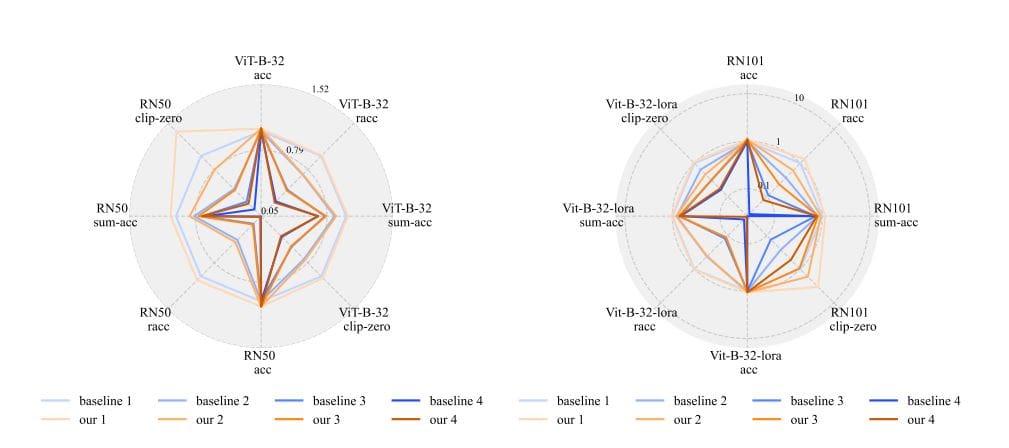

This research introduces MMT-ARD, designed to improve the adversarial robustness of vision-language models against attacks. The core idea revolves around a dual-teacher knowledge fusion architecture combined with a dynamic weight allocation strategy. The system utilises multiple teacher models to provide more robust and diverse knowledge for the student model, combining knowledge rather than simply averaging it. Dynamic weight allocation adjusts the importance of each teacher’s knowledge during training, prioritising the most reliable and informative teachers. This method improves robust accuracy by 4.

32% on the ImageNet dataset and enhances zero-shot accuracy by 3. 5%, also resulting in a 2. 3-fold increase in training efficiency.

Multimodal Distillation Boosts Robust Vision-Language Models

The research team developed a novel Multimodal Multi-Teacher Adversarial Robust Distillation (MMT-ARD) framework to significantly enhance the adversarial robustness of vision-language models. Experiments demonstrate a substantial improvement of +4. 32% in robust accuracy and +3. 5% in zero-shot accuracy when utilising the ViT-B-32 model. This breakthrough was achieved through a multi-teacher knowledge fusion architecture designed to synergistically optimise both clean feature preservation and robust feature enhancement.

A key innovation within MMT-ARD is the Dynamic Importance Weighting (DIW) algorithm, which adaptively balances knowledge transfer from multiple teachers based on confidence and feature relevance, leading to more reliable and accurate results. The method achieves a 2. 3-fold increase in training efficiency, demonstrating its practical viability for real-world applications.

Multi-Teacher Distillation Boosts Vision-Language Robustness

This research presents a new framework, MMT-ARD, designed to improve the robustness of vision-language models against adversarial attacks. The team addressed limitations in existing knowledge distillation techniques by developing a multi-teacher approach, fusing knowledge from multiple models to enhance both clean accuracy and resilience to adversarial examples. A key innovation lies in a dynamic weighting strategy that prioritises learning from teachers best equipped to handle challenging inputs, and an adaptive function that balances knowledge transfer across different data types. Experiments on the ImageNet dataset demonstrate that MMT-ARD improves robust accuracy by 4.

32% and zero-shot accuracy by 3. 5% when applied to a ViT-B-32 model, while also achieving a 2. 3-fold increase in training efficiency. These results suggest that the framework effectively enhances the reliability of multimodal systems in potentially sensitive applications.

👉 More information

🗞 MMT-ARD: Multimodal Multi-Teacher Adversarial Distillation for Robust Vision-Language Models

🧠 ArXiv: https://arxiv.org/abs/2511.17448