The pursuit of artificial intelligence increasingly demands efficient systems, and recent advances in multimodal learning, combining vision and language, have often relied on ever-larger models. Mark Endo and Serena Yeung-Levy, conducting research into this area, now demonstrate that simply shrinking these models does not proportionally reduce all capabilities, and instead reveals critical bottlenecks in visual understanding. Their work identifies that reducing the capacity of the language component disproportionately harms the system’s ability to process visual information, rather than its inherent language skills. To overcome this limitation, the researchers developed a novel approach, termed Extract+Think, which focuses on explicitly training the system to extract relevant visual details before applying reasoning, and achieves a new standard for performance and efficiency in multimodal AI.

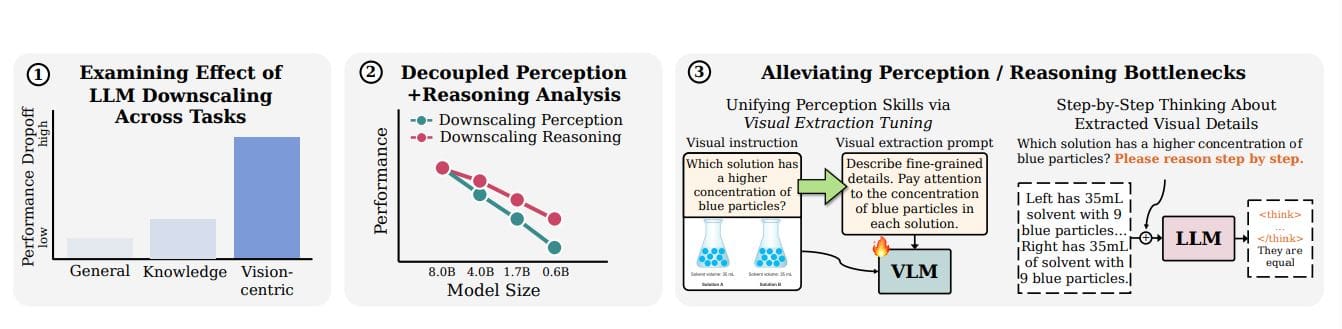

Reducing large language model (LLM) capacity impacts multimodal capabilities, with visual processing proving particularly vulnerable. Initial findings reveal that decreasing model size disproportionately affects a model’s ability to interpret visual information, rather than its core language skills. Experiments demonstrate that tasks reliant on visual processing experience a larger performance drop than those driven primarily by language understanding.

Visual Extraction Tuning and Reasoning Steps

To understand this decline, scientists isolated the effects of language model downscaling on both visual perception and reasoning. They discovered that even when focusing solely on visual perception, performance drops sharply, often matching or exceeding the impact on reasoning tasks. This suggests a fundamental loss of the ability to interpret visual information, not simply a decline in visual reasoning ability. To address this bottleneck, researchers introduced visual extraction tuning, a training method that explicitly teaches the model to consistently extract instruction-relevant visual details. They created a dataset to improve the model’s ability to describe images in detail, converting question-answer pairs from existing visual instruction datasets into declarative statements, such as “The cheese is on the left side of the plate. ” These statements then prompted the model to generate detailed image descriptions.

Visual Capacity Limits Multimodal Model Scaling

Scientists conducted a detailed analysis of how reducing the size of large language models impacts their ability to process visual information, revealing critical bottlenecks in multimodal systems. The research demonstrates that decreasing model capacity disproportionately affects visual capabilities, rather than the core language understanding abilities inherited from the language model itself. To pinpoint the source of this decline, the team isolated the effects of language model downscaling on both perception and reasoning, discovering that even when focusing solely on visual perception, performance still drops sharply, often matching or exceeding the impact on reasoning tasks. This suggests a fundamental loss of the ability to interpret and extract visual information, not simply a decline in visual reasoning.

To address this bottleneck, researchers introduced visual extraction tuning, a training method that explicitly teaches the model to consistently extract instruction-relevant visual details across different tasks. The team then combined this enhanced visual extraction with step-by-step reasoning applied to the extracted details, substantially improving performance without requiring additional visual training data. This two-stage approach, named EXTRACT+THINK, demonstrates exceptional efficiency, surpassing a baseline framework using a perception module that is significantly smaller and a reasoning module that is also considerably reduced in size. Even when training from scratch, the new approach improves upon existing models while utilizing far fewer visual training samples. These findings offer the first systematic characterization of downscaling effects in multimodal models and provide effective solutions to overcome their limitations, paving the way for future advances in small-scale multimodal intelligence.

Visual Reasoning Improves with Extract+Think Framework

This research presents a systematic investigation into the effects of reducing the size of large language models within multimodal systems, revealing that visual capabilities are disproportionately impacted by downscaling. The team identified that both the initial perception of visual information and subsequent reasoning abilities become significant bottlenecks as model size decreases. To address these limitations, they developed a novel two-stage framework, termed Extract+Think, which employs visual extraction tuning to improve the model’s ability to identify relevant visual details across different tasks. This extracted information is then used in a step-by-step reasoning process, achieving strong performance without requiring additional visual training data.

The results demonstrate that this approach establishes a highly efficient paradigm for training small multimodal models, achieving a new standard for both performance and data efficiency. Notably, the team’s model outperforms larger baseline models trained directly on in-domain data and exceeds the performance of comparable systems trained with extensive additional captioning examples, all while using significantly fewer visual samples. Future research directions include exploring downscaling across a wider range of model sizes, comparing the effects of downscaling visual and language components, and investigating the impact of varying data set sizes.

👉 More information

🗞 Downscaling Intelligence: Exploring Perception and Reasoning Bottlenecks in Small Multimodal Models

🧠 ArXiv: https://arxiv.org/abs/2511.17487