Federated learning presents a powerful approach to training machine learning models on decentralised data, safeguarding data privacy throughout the process, but the emergence of enormous large language models poses significant challenges to this technique. Ziyue Xu, Zhihong Zhang, Holger R. Roth, and colleagues at Nvidia Corp. tackle the escalating communication and resource demands of federated learning with large language models through innovations in data transmission. Their work introduces a system that combines message quantization, which shrinks the size of data exchanged between devices, with container and file streaming, enabling efficient memory management. This combination dramatically improves the scalability and practicality of federated learning, allowing complex models to be trained across distributed networks with greater robustness and efficiency in real-world applications.

Federated Learning for Large Language Models

This research addresses the challenges of scaling Federated Learning (FL) to very large language models (LLMs), tackling communication costs and memory constraints. LLMs are immense, making the transmission of model updates between clients and the server expensive, and limited client memory hinders their ability to store and process these large models. To overcome these hurdles, the team combined message quantization and streaming techniques. Quantization reduces the precision of model parameters, decreasing the size of transmitted updates, while streaming breaks down updates into smaller chunks, enabling efficient memory usage and potentially real-time updates.

The researchers explored various quantization levels, including 32-bit, 16-bit, 8-bit, and 4-bit precision, utilizing specific 4-bit schemes like NF4 and FP4. The streaming implementation involved breaking down model updates into smaller chunks and transmitting them sequentially, comparing regular transmission, container streaming, and file streaming. Experiments utilized the Llama-3 1B parameter model and the Databricks-dolly-15k dataset, employing Supervised Fine-Tuning to evaluate the impact of quantization and streaming on model convergence. Results demonstrate that quantization significantly reduces message size, with 4-bit quantization achieving 14% of the original size, without significantly degrading model convergence as training loss curves remained similar to full-precision models.

Streaming significantly reduces peak memory usage, with file streaming achieving the lowest levels. The combination of quantization and streaming provides the most significant benefits in terms of both communication reduction and memory efficiency. Future research will focus on extensive multi-client FL evaluations with larger LLMs, investigating per-layer sensitivity to quantization and developing adaptive quantization schemes. The team also plans to develop adaptive streaming mechanisms that dynamically adjust to network conditions and hardware capabilities, alongside comprehensive systems characterization and compatibility with other privacy-preserving mechanisms like Secure Aggregation and Differential Privacy. These advancements promise to unlock the full potential of federated learning for increasingly complex language models.

Federated Learning with Filtered Task Data

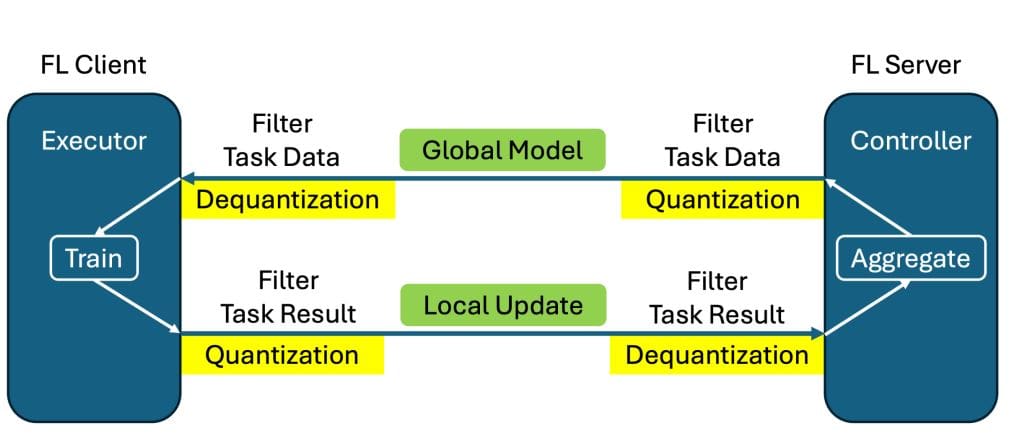

This study addresses communication and memory constraints in federated learning, particularly when training large language models. Researchers developed a system, built upon the open-source FLARE SDK, to enhance efficiency and scalability. This system employs a client-server architecture where a Controller orchestrates task execution across remote Executors deployed on individual client nodes, distributing ‘Task Data’ and aggregating ‘Task Result’ returns, facilitating a modular workflow for data transformation and security enhancements. This allows for integration of techniques like homomorphic encryption and differential privacy.

A core innovation lies in a two-way quantization workflow designed to reduce message size and bandwidth consumption. Quantization and dequantization filters are applied at all communication points, compressing outgoing data from both clients and the server before transmission and restoring it to original precision upon receipt, ensuring both server-side aggregation and client-side training operate with full precision. Addressing substantial local memory usage, the researchers pioneered a streaming functionality. Recognizing that while entire large language models can be immense, individual layers typically require far less memory, the team designed a system to stream data layer by layer.

This avoids the need to pre-allocate large amounts of memory to reassemble entire messages, significantly reducing memory overhead. For example, with the Llama-3. 2-1B model, the embed token layer consumes approximately 1002 MB, while other layers remain within manageable limits, allowing the system to effectively mitigate memory constraints.

FLARE Reduces Federated Learning Communication Overhead

This research team has achieved significant advancements in federated learning, a technique for training machine learning models across distributed data sources while preserving data privacy. Recognizing the challenges posed by large language models, they developed FLARE, an open-source SDK designed to address communication overhead and local resource constraints. The core of this breakthrough lies in message quantization and efficient data streaming. Experiments using the Llama-3. 2-1B model demonstrate that message quantization substantially reduces communication demands without sacrificing model accuracy.

Specifically, applying 4-bit quantization reduced message size to just 14% of the original size, while training loss curves closely aligned with centralized training results, confirming that quantization maintains model convergence quality. Detailed measurements showed that a candidate model’s size under 4-bit quantization was 714. 53 MB, compared to 5716. 26 MB with 32-bit precision. Further enhancing efficiency, the researchers implemented data streaming techniques to minimize peak memory usage.

Local simulations revealed substantial memory reductions: regular transmission required 42,427 MB, container streaming reduced this to 23,265 MB, and file streaming further lowered it to 19,176 MB. While file streaming offered the greatest savings, it took 170 seconds to complete the job, compared to 47 and 50 seconds for regular and container streaming respectively. These results demonstrate that streaming enables feasible federated learning even when resources are imbalanced, potentially enabling real-time processing and reduced idle time. This work paves the way for more efficient and scalable federated learning.

Efficient Federated Learning for Large Models

This work demonstrates significant advancements in federated learning, specifically addressing the challenges posed by large language models and their substantial computational demands. Researchers developed techniques to alleviate communication bottlenecks and memory constraints by integrating message quantization and streaming.

👉 More information

🗞 Optimizing Federated Learning in the Era of LLMs: Message Quantization and Streaming

🧠 ArXiv: https://arxiv.org/abs/2511.16450