The challenge of building artificial intelligence that truly reasons, rather than simply mimicking intelligence, drives a new investigation into the cognitive foundations of reasoning, led by Priyanka Kargupta from University of Illinois Urbana-Champaign, Shuyue Stella Li from University of Washington, and Haocheng Wang from Princeton University, alongside several collaborators. This research systematically connects 28 key elements of human cognition to the behaviour of large language models, revealing fundamental differences in how humans and AI approach problem-solving. The team analysed over 170,000 reasoning traces, alongside detailed human thought processes, and discovered that while large language models often succeed at complex tasks, they do so using shallow strategies, unlike the hierarchical and self-monitoring approaches employed by humans. This work not only identifies a critical gap in current AI development, where easily measured behaviours overshadow crucial meta-cognitive controls, but also demonstrates a pathway to significantly improve performance, up to 60% on complex problems, by guiding models to adopt more human-like reasoning structures.

Large language models solve complex problems yet fail on simpler variants, suggesting they achieve correct outputs through mechanisms fundamentally different from human reasoning. Researchers synthesised cognitive science research into a taxonomy of 28 cognitive elements, encompassing computational constraints, meta-cognitive controls, knowledge representations, and transformation operations. They then analysed the behavioural manifestations of these elements in reasoning traces, seeking to understand how models arrive at their conclusions. The team proposes a fine-grained cognitive evaluation framework, designed to assess not just whether a model produces the correct answer, but how it reaches that answer, mirroring human cognitive processes. This framework allows for detailed analysis of the reasoning steps undertaken by the model, identifying strengths and weaknesses in its cognitive abilities.

Diverse Reasoning Tasks Across Language Models

This work presents a detailed analysis of the performance of various Large Language Models (LLMs) across a range of reasoning tasks. The team evaluated these models on diverse problem types, including algorithmic challenges, factual recall, dilemma reasoning, diagnosis and solution finding, case analysis, creative expression, and logical reasoning. Models tested ranged from large flagship systems, such as DeepSeek-R1-671B, to smaller, distilled versions like R1-Qwen-1. 5B and DeepScaleR-1. 5B, based on both Qwen and Llama architectures.

The analysis highlights the impact of different training methodologies. Models like DeepSeek-R1-671B and the Qwen series benefited from Reinforcement Learning (RL) training, particularly multi-stage RL. Knowledge distillation was used to create smaller, more efficient models from larger teacher models. Importantly, the research demonstrates that high-quality, verified data can outperform models trained on larger, less curated datasets, as seen with OpenThinker-32B. DeepSeek-R1-671B consistently achieved the highest overall accuracy, establishing a performance ceiling.

The Qwen series (32B, 14B, 8B) also performed well, demonstrating effective knowledge transfer through distillation. Larger models generally outperformed smaller models, particularly on more complex tasks, and data quality proved crucial. Qwen models appear to be more parameter-efficient than Llama-based models for reasoning tasks. Performance varied significantly depending on the problem type. Algorithmic problems and factual recall showed relatively consistent performance across models.

Dilemma reasoning exhibited the highest variance, with smaller models struggling significantly, indicating a need for substantial capacity to articulate and balance conflicting positions. Diagnosis and solution finding showed the largest absolute variance, suggesting a limitation in smaller models’ ability to maintain and process information in working memory. Larger models performed well on case analysis, demonstrating the ability to process complex information, while results for creative expression were unreliable due to the small sample size. Logical reasoning showed moderate performance, with larger models generally outperforming smaller ones.

Smaller models, such as R1-Qwen-1. 5B and DeepScaleR-1. 5B, experienced performance drops on complex tasks, suggesting a limitation in their ability to maintain and process information in working memory. This work provides a comprehensive analysis of LLM performance on a diverse set of reasoning tasks, highlighting the importance of model scale, training methodology, data quality, and the limitations of smaller models on complex reasoning tasks.

Humans and AI Reasoning Differ Systematically

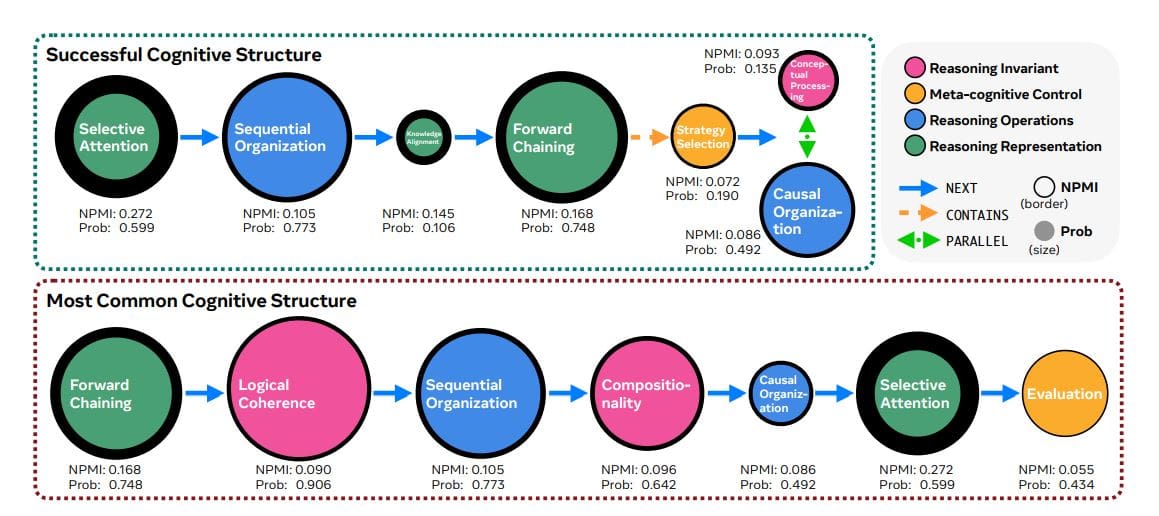

This research presents a comprehensive analysis of reasoning behaviours in large language models, revealing systematic differences between how these models and humans approach problem-solving. Researchers synthesised cognitive science research into a taxonomy of 28 cognitive elements, encompassing computational constraints, meta-cognitive controls, knowledge representations, and transformation operations, and then analysed their presence in reasoning traces. The team conducted a large-scale analysis of 170,000 reasoning traces from models across text, vision, and audio modalities, alongside 54 human think-aloud traces, creating a publicly available dataset for further research. Results demonstrate that humans consistently employ hierarchical nesting and meta-cognitive monitoring during reasoning, while language models primarily rely on shallow forward chaining.

This divergence is most pronounced when tackling ill-structured problems, suggesting fundamental differences in the underlying cognitive mechanisms. A meta-analysis of 1,598 research papers on language model reasoning revealed a concentration on easily quantifiable behaviours, with 55% examining sequential organisation and 60% focusing on decomposition. In contrast, research addressing meta-cognitive controls, such as self-awareness, accounted for only 16% of studies, and evaluation comprised just 8%. The team discovered that language models possess behavioural repertoires associated with successful reasoning, but often fail to deploy them spontaneously. Leveraging these patterns, researchers developed test-time reasoning guidance that automatically scaffolds successful structures, improving performance by up to 60% on complex problems. This work establishes a foundation for developing language models that reason through principled cognitive mechanisms, rather than relying on brittle shortcuts or memorisation, and opens new directions for testing theories of human cognition at scale.

Human and AI Reasoning Differences Revealed

This research establishes a detailed understanding of reasoning processes in large language models by drawing connections to cognitive science. The team conducted a large-scale analysis of reasoning traces from both language models and human subjects, identifying key structural differences in how each approaches problem-solving. Humans consistently employ hierarchical reasoning and self-monitoring, while language models tend to rely on more straightforward, sequential processing. Furthermore, the study demonstrates that data quality is paramount in training effective language models, surpassing the importance of sheer data quantity.

Through the development of automated verification processes, the researchers created cleaner training signals, resulting in models that exhibit improved performance on tasks requiring objective correctness, such as story problems and case analysis. Analysis of model performance across different problem types reveals that certain reasoning skills, like balancing contradictory positions in dilemma problems or coordinating fault identification in diagnosis-solution tasks, are particularly sensitive to model capacity. The researchers acknowledge limitations in sample sizes for certain problem types, particularly creative and expressive tasks. Future work could focus on developing methods to enhance meta-cognitive controls in language models, bridging the gap between their reasoning capabilities and those of humans.

👉 More information

🗞 Cognitive Foundations for Reasoning and Their Manifestation in LLMs

🧠 ArXiv: https://arxiv.org/abs/2511.16660