Recognising engagement in video presents a significant challenge for computer vision systems, largely due to the subjective nature of assessing human behaviour and the resulting noise in training data. Alexander Vedernikov, Puneet Kumar, Haoyu Chen, and colleagues at the University of Oulu address this problem by harnessing the power of Vision Large Language Models (VLMs) to improve the accuracy of engagement analysis. Their innovative framework refines existing video annotations by identifying and leveraging behavioural cues, effectively separating reliable data from ambiguous examples. The team demonstrates that by training computer vision systems on this refined data, and employing a carefully designed curriculum learning strategy, they achieve substantial performance gains on established engagement benchmarks, surpassing previous state-of-the-art results on datasets such as EngageNet, DREAMS, and PAFE. This work highlights the potential of VLMs not just for understanding visual content, but also for actively improving the quality of the data used to train other artificial intelligence systems.

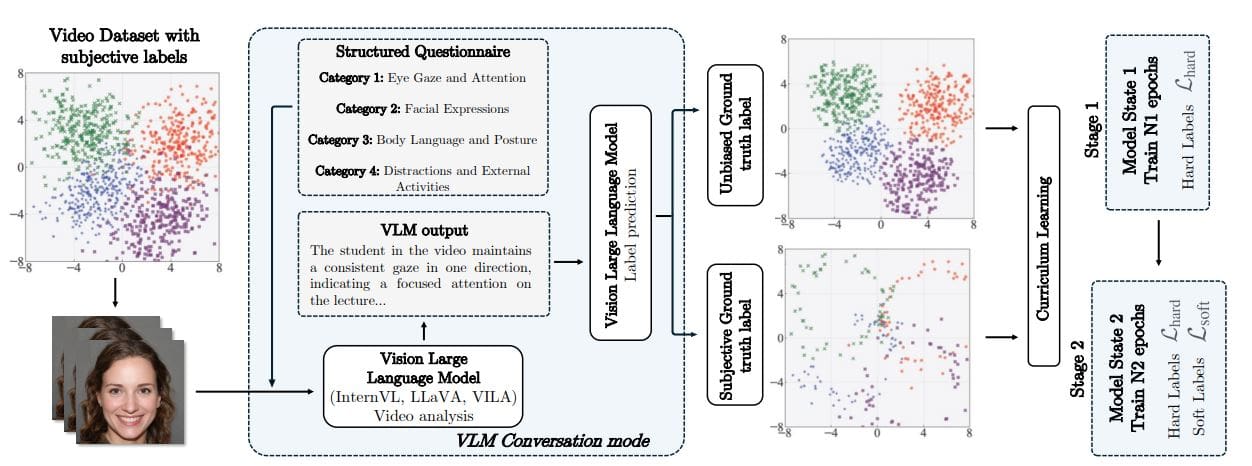

The approach involves using a questionnaire to extract behavioural cues and subsequently splitting the data into high- and low-reliability subsets. Furthermore, the team introduces a training strategy that combines curriculum learning with soft label refinement, gradually incorporating ambiguous samples while simultaneously adjusting supervision to reflect inherent uncertainty. The work demonstrates a clear trend towards utilizing VLMs for tasks traditionally tackled by specialized computer vision and machine learning models. VLMs offer the ability to process both visual and textual information, enabling a more nuanced understanding of human emotions and engagement. Instruction Tuning, a technique involving training models to follow instructions related to emotion recognition or engagement prediction, proves crucial for adapting VLMs to these tasks.

Researchers are actively working to close the performance gap between open-source VLMs and commercial models, focusing on scaling up models, improving reasoning abilities, and enhancing world knowledge. A significant portion of the research centers on detecting student engagement in online learning environments, a complex task as engagement manifests in subtle behavioral cues. Several datasets are being developed and utilized to address this challenge, including DREAMS, which focuses on diverse reactions of engagement and attention mind states, and datasets from Singh et al. and Lee et al., which focus on engagement prediction and mind-wandering detection in lectures, respectively.

Recognizing emotions and engagement requires capturing subtle cues from facial expressions, body language, and contextual information. Researchers are exploring techniques for label correction, partial label learning, and the use of soft-target labels to address noisy or inconsistent labels in affective computing datasets. Curriculum learning, which involves training models with progressively more difficult examples, and ensemble methods, which combine multiple models to enhance robustness and accuracy, are also proving effective. Convolutional Neural Networks (CNNs) and Temporal Convolutional Networks (TCNs) remain widely used for feature extraction from video data, but Transformer-based architectures are becoming increasingly popular due to their ability to model long-range dependencies in sequential data.

Attention mechanisms are used to focus on the most relevant features in the input data, and hybrid approaches combining different model architectures leverage their respective strengths. In essence, the research landscape is shifting towards leveraging the power of large language models and vision-language models to build more robust and accurate systems for understanding human emotions and engagement. Addressing data quality issues and developing effective learning strategies are crucial for realizing the full potential of these technologies.

Refined Labels Boost Engagement Recognition Performance

Scientists have developed a new framework to improve engagement recognition in video data, addressing the challenges posed by subjective and noisy labels. Researchers extracted behavioral cues using a questionnaire, enabling them to split data into high- and low-reliability subsets, a crucial step in mitigating the impact of label ambiguity. Experiments demonstrate that classical computer vision models, when trained on these refined, high-reliability subsets and enhanced with a curriculum learning strategy, show marked improvements.

This curriculum gradually incorporates ambiguous samples while adjusting supervision to reflect uncertainty in the data. Specifically, the team achieved improvements of up to +1. 21% across three of six feature settings on the EngageNet benchmark, demonstrating a substantial advancement in accuracy. Furthermore, the method yielded F1 gains of +0. 22 on the DREAMS dataset and +0.

06 on the PAFE dataset, confirming its effectiveness across diverse video datasets. The research team implemented a training strategy combining curriculum learning with soft label refinement, allowing the model to learn from samples with varying levels of ambiguity. This approach closely matches human tolerance in engagement annotation, indicating a high degree of alignment between the model’s judgments and human perception. By decoupling label reliability from semantic content using a questionnaire and VLM, the team successfully organized learning by estimated reliability, delivering a robust and accurate engagement recognition system. By using a questionnaire to assess data reliability, the method successfully separates high- and low-confidence samples, allowing models to learn from cleaner, more consistent data. Furthermore, the researchers introduced a curriculum learning strategy, progressively incorporating ambiguous examples while adjusting the training signals to reflect uncertainty.

This approach not only improves overall classification accuracy on benchmark datasets such as EngageNet, DREAMS, and PAFE, but also enhances robustness against noisy labels. Ablation studies demonstrated the importance of carefully designed prompts and consideration of temporal context for maximizing VLM performance and downstream benefits. The authors acknowledge that the datasets used are not publicly available due to data sharing restrictions, which limits broader validation of the framework. Future work will focus on integrating interactive VLM-assisted annotation protocols and developing evaluation metrics that better capture the subjective nature of engagement. The team also plans to validate the framework on gaming benchmarks to assess its generalizability to dynamic, interactive environments, ultimately aiming to create more reliable affective computing systems.

👉 More information

🗞 Vision Large Language Models Are Good Noise Handlers in Engagement Analysis

🧠 ArXiv: https://arxiv.org/abs/2511.14749