Accurate depth perception is fundamental for the reliable operation of autonomous systems and intelligent robots, and researchers are increasingly focused on self-supervised methods for achieving this. Zeyu Cheng, Tongfei Liu, and Tao Lei, from Shaanxi University of Science and Technology, alongside Xiang Hua and Chengkai Tang from Northwestern Polytechnical University, have developed a new approach called RTS-Mono that addresses a critical limitation of existing techniques, the substantial computational resources they demand. This research introduces a lightweight and efficient system for real-time monocular depth estimation, achieving state-of-the-art performance with a remarkably small number of parameters. Through a novel encoder-decoder architecture and a multi-scale sparse fusion framework, RTS-Mono not only minimises redundancy but also delivers significant improvements in accuracy and inference speed, demonstrated by achieving 49 frames per second on a Jetson Orin platform and substantial gains over existing lightweight methods on the KITTI dataset.

The team addressed the challenge of creating depth perception systems that can operate quickly and effectively on devices with limited computing power. They developed a network architecture based on MobileNetV3, reducing the number of parameters and computational demands. A key innovation is a module that effectively combines both global and local contextual information, capturing long-range dependencies with a global attention mechanism and refining local features using a deformable convolution module.

The model learns by predicting depth from video sequences, without requiring pre-existing depth data, and is trained using a combination of photometric reconstruction loss, smoothness loss, and a novel edge-aware smoothness loss to improve depth map quality. The results demonstrate that this method achieves competitive or superior performance compared to other self-supervised monocular depth estimation methods on standard benchmarks, including KITTI and NYU Depth V2. Importantly, the model achieves real-time performance on embedded systems, such as a Raspberry Pi 4, with a frame rate of approximately 20 frames per second. The model boasts a significantly lower number of parameters and computational cost compared to other state-of-the-art methods. Qualitative results show that the generated depth maps demonstrate improved accuracy and detail, particularly in challenging scenarios with complex geometry and dynamic objects.

Real-time Depth Estimation with Sparse Fusion

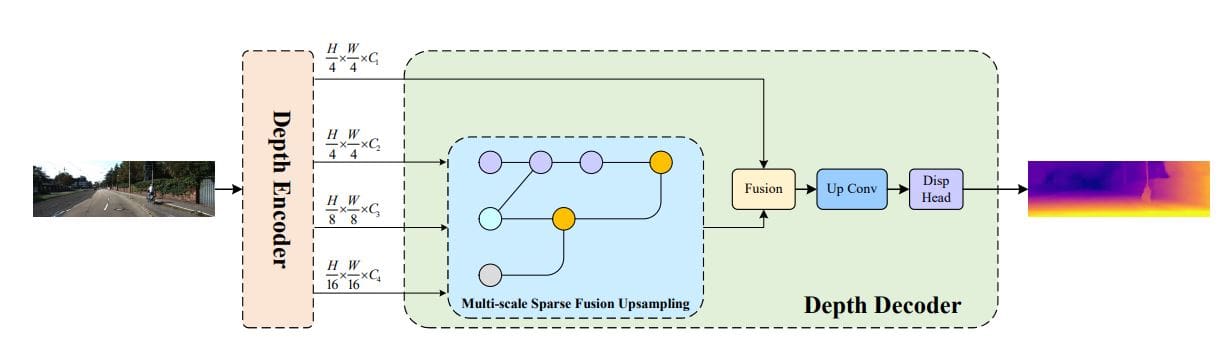

Scientists developed RTS-Mono, a real-time self-supervised monocular depth estimation method designed for practical deployment in fields like autonomous driving and robotics. Recognizing that existing methods often demand substantial computing resources, hindering real-world application, the team engineered a lightweight encoder-decoder architecture to balance accuracy and efficiency. The encoder leverages the established Lite-Encoder, minimizing initial computational load, while the innovative decoder employs a multi-scale sparse fusion framework. This framework strategically reduces redundancy within the network, ensuring optimal performance without sacrificing inference speed.

Researchers rigorously tested RTS-Mono using the KITTI dataset, demonstrating state-of-the-art performance with an exceptionally low parameter count of only 3 million. Comparative analysis against other lightweight methods revealed significant improvements in accuracy, with RTS-Mono achieving gains in Absolute Relative error and Squared Relative error at low resolutions, and further improvements in Squared Relative error and Root Mean Squared Error at higher resolutions. To validate real-world applicability, the team deployed RTS-Mono on an Nvidia Jetson Orin platform, confirming the system’s ability to perform real-time inference at 49 frames per second, a speed sufficient for many dynamic applications.

Real-time Depth Estimation with Minimal Parameters

Scientists have developed RTS-Mono, a real-time self-supervised monocular depth estimation method designed for efficient performance in applications like autonomous driving and robotics. This work addresses the challenge of balancing computational efficiency with depth estimation accuracy, a critical factor for real-world deployment of these systems. The team designed a lightweight encoder-decoder architecture, utilizing Lite-Encoder for the encoder and a novel multi-scale sparse fusion framework for the decoder, to minimize redundancy and maximize performance. Experiments conducted on the challenging KITTI dataset demonstrate state-of-the-art performance at both low and high resolutions, achieved with an exceptionally low parameter count of only 3 million. Specifically, RTS-Mono improved Absolute Relative error and Squared Relative error at low resolution, and improved Squared Relative error and Root Mean Squared Error at high resolution, when compared to current lightweight methods. Further validation involved real-world deployment on an intelligent UAV system in urban traffic environments, achieving real-time depth estimation with an impressive inference speed of 49 frames per second.

Real-time Depth Estimation with Sparse Fusion

This research presents RTS-Mono, a novel method for self-supervised monocular depth estimation designed for real-time performance and efficient computation. The team developed a lightweight encoder-decoder architecture, combining a CNN-Transformer hybrid encoder with a multi-scale sparse fusion decoder, to minimise redundancy and maximise inference speed. Experiments on the KITTI dataset demonstrate that RTS-Mono achieves state-of-the-art performance compared to methods with similar parameter counts, achieving significant improvements in metrics such as Absolute Relative difference and Squared Relative difference at both low and high resolutions. Furthermore, the researchers successfully deployed RTS-Mono on a Jetson Orin platform, demonstrating real-time inference at 49 frames per second within an intelligent UAV depth perception system. The team acknowledges that performance remains dependent on the quality of the input data and the complexity of the environment, and future work may focus on enhancing robustness and exploring further optimisations to the network architecture.

👉 More information

🗞 RTS-Mono: A Real-Time Self-Supervised Monocular Depth Estimation Method for Real-World Deployment

🧠 ArXiv: https://arxiv.org/abs/2511.14107