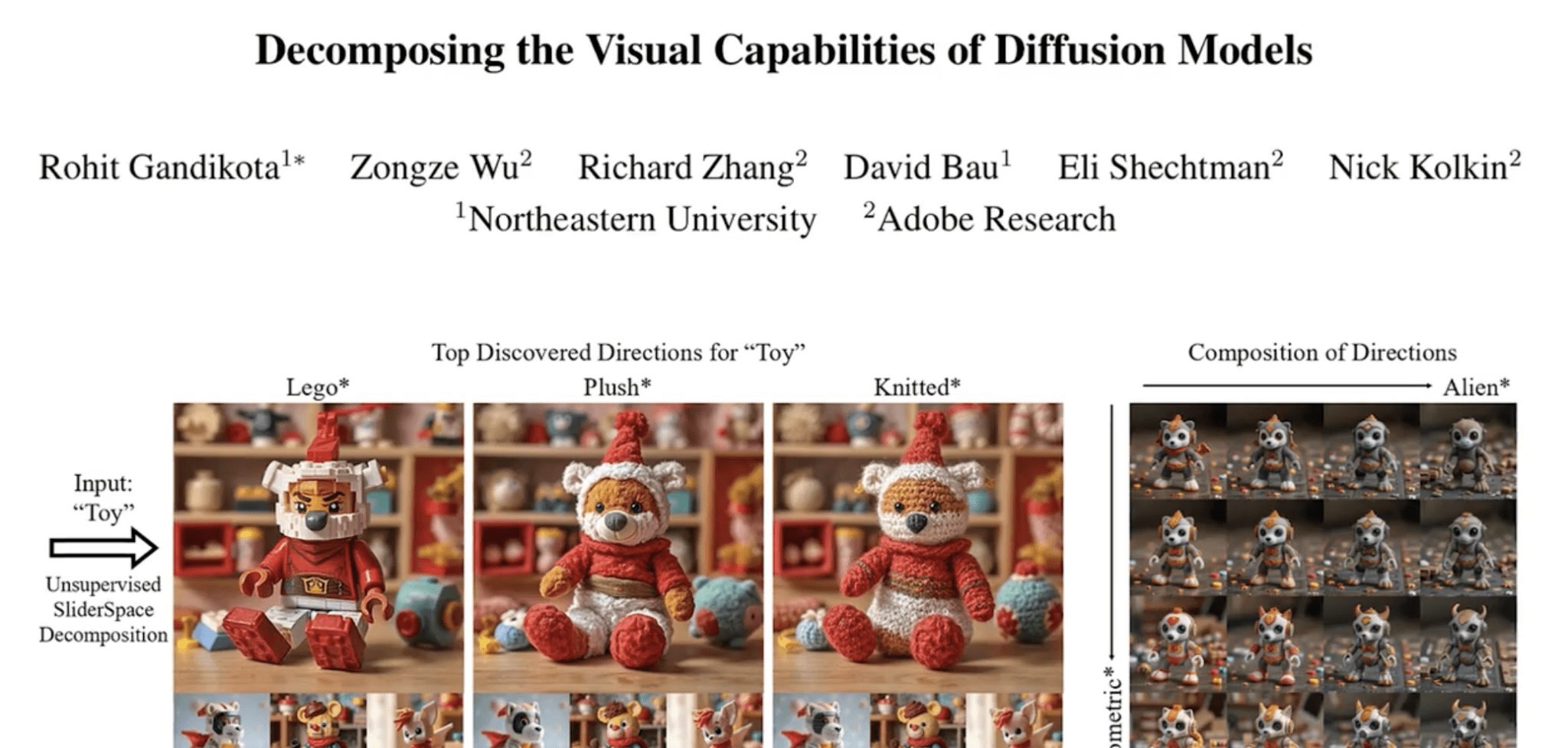

Adobe Research’s Rohit Gandikota, guided by Senior Research Engineer Nick Kolkin, has developed a novel methodology for mapping the latent visual knowledge embedded within diffusion models—a prevalent class of generative AI. Their work, presented at the International Conference on Computer Vision (ICCV) 2025, details a technique for identifying and quantifying the primary axes of variation within these models’ image generation capabilities. By analyzing the model’s internal representations, the team aims to move beyond stochastic “slot machine” outputs and provide users with more precise and intuitive control over generated imagery, ultimately unlocking a deeper understanding of how AI “conceives” visual concepts.

Mapping Visual Knowledge in Generative AI

Researchers at Adobe are actively “mapping” the visual knowledge within diffusion models – a core technology powering generative AI image creation. Their work isn’t focused on what images are created, but how the model understands visual concepts. They discovered models don’t think in terms of specific object parts (eyes, tails) but rather along spectrums of variation – like “golden retriever-ish” versus “pit bull-y”. This insight led to a framework that analyzes these key variations, enabling the creation of simple control sliders for users.

This framework isn’t just about artistic control. It addresses a significant problem: generative models can exhibit biases (like disproportionately generating male doctors). By analyzing and weighting visual variations during model training – or even allowing end-users to adjust sliders – researchers can actively increase diversity in generated outputs. This is particularly valuable for “distilled” diffusion models, which prioritize speed but may amplify existing biases towards common visual preferences.

The long-term goal extends beyond user-friendly sliders. Researchers envision a future where we can actively “interrogate” these AI models, essentially building an “atlas of visual knowledge”. This would allow us to not just control image generation, but understand how the AI “sees” the world, ultimately enhancing human capabilities by leveraging the vast data and processing power embedded within these complex systems.

Understanding How Models “Think” About Images

Researchers at Adobe are working to understand how diffusion models “think” about images, moving beyond simply what they create. Their approach focuses on mapping the internal variations these AI models produce – for example, recognizing that a model might differentiate dogs not by eye or tail type, but by how “golden retriever-ish” or “pit bull-y” they appear. This isn’t about mirroring human artistic thought, but discovering the model’s inherent visual language, unlocking greater control for users and developers alike.

This research enables a new level of controllability in generative AI. Instead of random “slot machine” results, the team developed a framework to analyze and create sliders representing key visual variations a model can produce. This means users can precisely adjust characteristics, or developers can quickly correct biases—like a tendency to generate more male doctors—by re-weighting underrepresented visual attributes during model training.

Ultimately, this work aims to move beyond simply using these powerful models to actually understanding their internal knowledge. Researchers envision a future where custom sliders appear automatically alongside prompts, providing instant control and revealing the full range of a model’s capabilities. This could foster a more collaborative relationship between humans and AI, enhancing creativity and unlocking the full potential of image generation.

Increasing AI Controllability and Visual Diversity

Researchers at Adobe are developing methods to map the “visual knowledge” within diffusion models – the AI behind many image generators – to improve user control and diversity. Traditionally, controlling these models felt like chance, but the team discovered the AI doesn’t “think” like humans. Instead of adjusting attributes like “eye type,” the models vary along latent dimensions – for example, leaning towards a “golden retriever-ish” versus “pit bull-y” dog appearance. Understanding these inherent variations is key to building effective control mechanisms.

This new approach focuses on automatically identifying key visual variations a model already produces, then creating simple sliders to control those variations. This bypasses the need for extensive prompt engineering – often taking weeks to master – and immediately exposes users to the model’s capabilities. Beyond usability, this framework allows for “rebalancing” results; for instance, increasing the representation of underrepresented concepts like female doctors within generated images, counteracting potential biases embedded in training data.

The team’s work has implications for both artists seeking precise control and developers aiming to correct model biases. Future iterations aim for speed and automation, envisioning custom sliders appearing alongside prompts. Ultimately, this research aspires to move beyond simply using AI image generators to actively communicating with the knowledge contained within them, potentially unlocking even greater creative and analytical capabilities.

Future Applications of AI Image Sliders

Recent research from Adobe and Northeastern University is unlocking greater control over AI image generation through “concept sliders.” Instead of relying on trial-and-error prompting, researchers mapped the internal “visual knowledge” of diffusion models – the algorithms powering many AI image generators. They discovered these models don’t “think” like humans, categorizing images by parts, but rather by broader variations – like “golden retriever-ish” versus “pit bull-y.” This allows for the creation of sliders that directly manipulate these inherent variations, offering users precise control over generated outputs.

This technology isn’t just about artistic finesse; it addresses critical issues of bias and diversity. AI models often reflect the skewed datasets they’re trained on, potentially underrepresenting certain demographics or styles. By analyzing and weighting visual variations, the framework can “up-weight” underrepresented features – for example, increasing the depiction of female doctors – ensuring more equitable and inclusive image generation. This is especially impactful for “distilled” diffusion models, which prioritize speed and often reinforce common preferences.

Future iterations aim for seamless integration – imagine automatically generated, customized sliders appearing alongside every prompt. Beyond usability, researchers envision this framework as a tool for understanding how AI models “see” the world. By creating an “atlas of knowledge” within these models, we can potentially unlock even greater creative potential and enhance human capabilities through collaboration with powerful AI image generators – moving beyond simple image creation to true visual communication.