Developing high-performance artificial intelligence kernels typically requires writing complex code in low-level assembly language, a significant barrier to wider innovation. William Hu, Drew Wadsworth, Sean Siddens, Stanley Winata, Daniel Y. Fu, and Ryann Swann investigate how to simplify this process for AMD GPUs, building on recent advances in domain-specific languages. Their work introduces HipKittens, a new programming framework that encapsulates key insights into performant AMD AI kernel development, demonstrating that tile-based programming abstractions can generalise across different AMD architectures. The team validates HipKittens on both CDNA3 and CDNA4 platforms, achieving competitive performance with hand-optimized assembly kernels for tasks like matrix multiplication and attention, and consistently surpassing standard compiler outputs, ultimately paving the way for a more portable and accessible software layer for high-performance AI.

FP6 GEMM Optimisation on CDNA4 Architecture

This study focuses on maximizing the performance of GEMM (General Matrix Multiplication) using the FP6 (6-bit floating point) data type on AMD’s MI350X and MI355X GPUs. FP6 offers the potential for twice the FLOPS compared to other devices, but realizing this potential requires careful optimization. Researchers benchmarked their optimized FP6 kernel against AMD’s own CK library implementation and an FP8 (8-bit floating point) implementation to demonstrate its effectiveness. The work delves into instruction scheduling, memory access, and register usage to achieve peak performance. A major challenge lies in efficiently loading data from global memory to shared memory, significantly impacting overall performance.

Several global-to-shared memory load instructions were evaluated, including buffer_load_dwordx4, buffer_load_dwordx3, and buffer_load_dwordx1. While buffer_load_dwordx4 initially appeared promising, it caused shared memory alignment issues. buffer_load_dwordx3 proved most effective, avoiding wasted memory and allowing for data rearrangement. Proper alignment of data in shared memory is crucial to prevent conflicts where multiple threads attempt to access the same memory location simultaneously. The team selected a smaller MFMA instruction for FP6 to optimize performance and carefully managed registers to ensure data was available in the correct order.

Initially, the code spilled registers to slower scratch memory, reducing performance. This was resolved by explicitly scheduling registers and optimizing data flow. The results demonstrate that FP6 shows promise in terms of raw FLOPS, but careful optimization is essential to unlock its full potential. The team addressed the difficulty of mapping AI algorithms to AMD hardware, traditionally requiring hand-optimized assembly code. The core of HipKittens lies in C++ embedded tile-based programming primitives, allowing developers to express AI operations in a more abstract and portable manner. To maximize performance on AMD GPUs, the team focused on optimizing memory access patterns and maximizing occupancy, the ratio of active threads to available processing units.

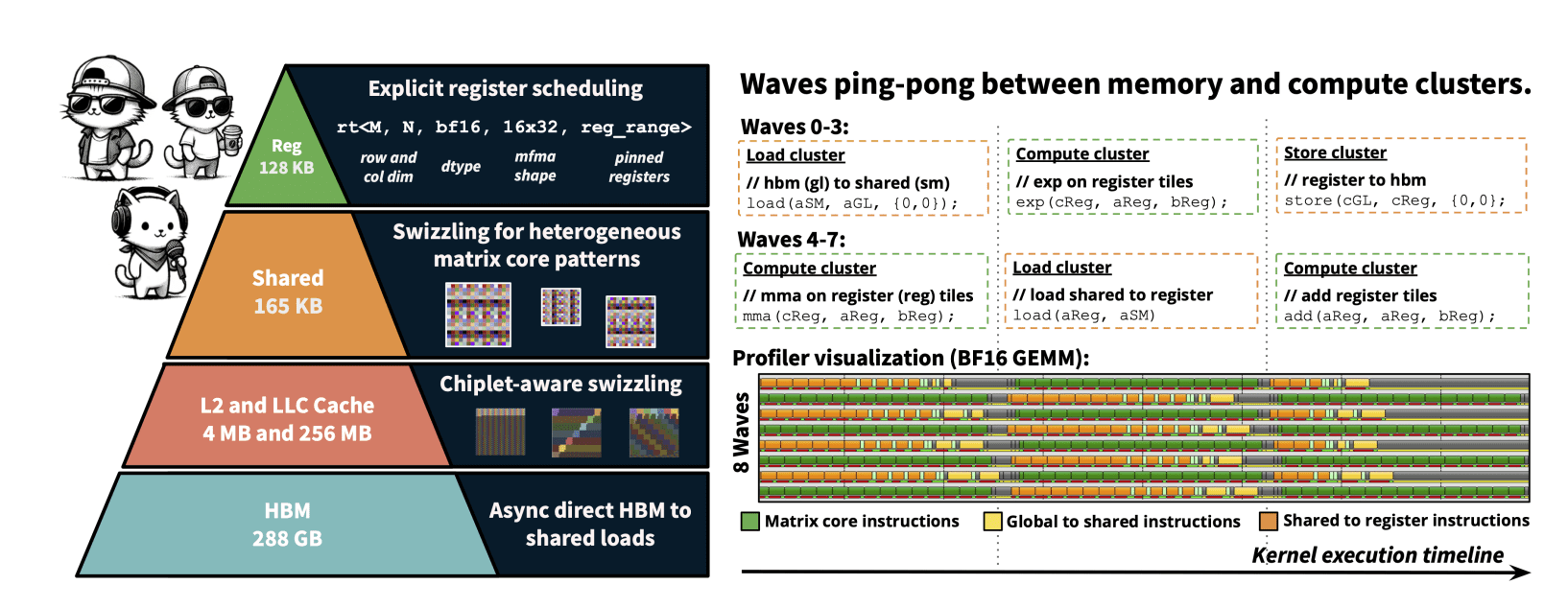

AMD GPUs organize memory in a hierarchical structure, including vector registers, L1 cache, shared memory, L2 cache, and high-bandwidth memory (HBM). HipKittens kernels are designed to exploit this hierarchy, carefully managing data movement between levels to minimize latency and maximize throughput. Each compute unit contains four single instruction, multiple data (SIMD) units, and the framework ensures efficient utilization of these units by organizing threads into waves of 64 and blocks of waves. The AMD MI325X and MI355X GPUs served as the primary testbeds for the research, featuring 256 compute units organized into eight accelerator complex dies (XCDs) of 32 compute units each.

Researchers validated HipKittens across a range of AI workloads, including GEMM, GQA/MHA attention, RoPE, and LayerNorm, comparing its performance against both AMD’s hand-optimized assembly kernels and compiler-based approaches like Triton and Mojo. Results demonstrate that HipKittens kernels compete with, and in some cases outperform, the highly optimized assembly kernels, achieving performance improvements of up to ten times on memory-bound kernels and outperforming Mojo’s MHA kernel by a factor of two. Furthermore, HipKittens improves performance by 19% over a naive row-major baseline, contributing a systematic set of AMD AI kernel primitives and a suite of performant kernels, paving the way for a unified programming model across AI accelerators.

HipKittens Deliver Near-Assembly Performance for AI Kernels

Scientists achieved significant performance in AI kernel development on AMD GPUs through the creation of HipKittens (HK), a new programming framework. The work addresses the difficulty of mapping AI algorithms to hardware by providing a tile-based programming interface inspired by PyTorch and NumPy, simplifying high-performance kernel creation. HK automatically optimizes memory access patterns for tiles, leveraging familiar programming primitives while bypassing compiler limitations. Experiments demonstrate that HK kernels compete with hand-optimized assembly kernels for both GEMMs and attention operations, consistently outperforming compiler baselines.

Specifically, a four-wave MHA non-causal backwards kernel implemented in HK achieves 855 TFLOPS with a sequence length of 4096, and 909 TFLOPS with a sequence length of 8192. By incorporating explicit register scheduling and pinning register tiles, HK further improves performance, reaching 1024 TFLOPS at a sequence length of 4096 and 1091 TFLOPS at 8192, effectively matching the performance of AMD’s raw assembly kernel (AITER) which achieves 1018 and 1169 TFLOPS respectively. The team discovered that HK outperforms all available kernel baselines in certain settings, including attention, GQA backwards, and memory-bound kernels. Measurements confirm that HK’s tile-based approach enables efficient memory management across the GPU hierarchy, from register and shared memory to HBM and the last level cache. This framework provides a systematic set of AMD AI kernel primitives, paving the way for a single, tile-based software layer that translates across different GPU vendors and unlocks the compute capacity needed to achieve AI’s full potential.

HipKittens Unlocks AMD GPU AI Performance

This research presents HipKittens, a new programming framework designed to unlock the full performance potential of AMD GPUs for artificial intelligence workloads. Scientists systematically analyzed the principles that enable high-performance AMD AI kernels and encapsulated these insights into a minimal set of C++ embedded programming primitives. The team demonstrates that tile-based abstractions, previously used in other systems, generalize effectively to AMD GPUs, but require careful adaptation.

👉 More information

🗞 HipKittens: Fast and Furious AMD Kernels

🧠 ArXiv: https://arxiv.org/abs/2511.08083