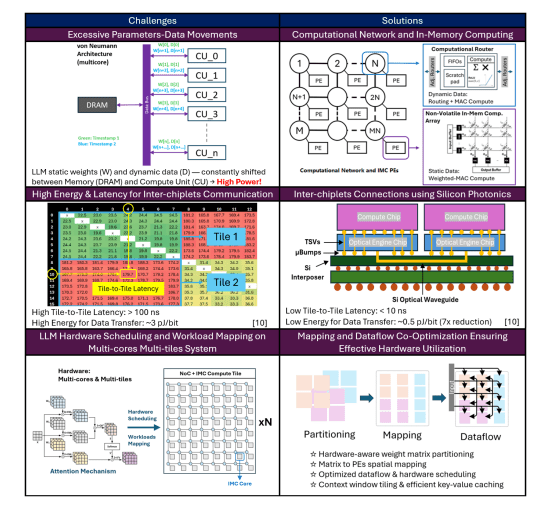

Large language models demand ever-increasing computational resources, and current systems struggle with the communication bottlenecks that limit performance. Yue Jiet Chong, Yimin Wang, and Zhen Wu, alongside Xuanyao Fong from the National University of Singapore, present a novel architecture that tackles this challenge with a 3D-stacked chiplet design. Their system integrates non-volatile in-memory computing with a silicon photonic network, dramatically accelerating the processing of large language models. The team demonstrates significant speed and efficiency gains over existing technologies like the A100 and H100, and importantly, their design allows for further scalability to accommodate even more complex models, representing a substantial step forward in artificial intelligence hardware.

In-memory-computing processing elements (PEs) and an Inter-PE Computational Network (IPCN) interconnect to effectively address communication bottlenecks. A Large Language Model (LLM) mapping scheme optimises hardware scheduling and workload mapping. Simulation results demonstrate a 3. 95× speedup and 30× efficiency improvement over the Nvidia A100 before chiplet clustering and power gating scheme (CCPG).

D Chiplets and In-Memory Computing for LLMs

This research presents PICNIC (Photonic Interconnect Compute-in-Memory Network), a novel architecture designed to accelerate Large Language Model (LLM) inference. It tackles the increasing energy demands and performance limitations of LLMs by integrating 3D-IC chiplet design, compute-in-memory (CIM) with RRAM, an Inter-PE Computational Network (IPCN), silicon photonics, and efficient hardware scheduling. The chiplet-based design and silicon photonics enable scalability, while CIM reduces data movement and energy consumption. PICNIC achieves substantial improvements in both performance and energy efficiency compared to GPUs, demonstrating a 3.

95× speedup and 30× efficiency improvement over Nvidia A100 in Llama-8B inference, processing 309 tokens per second with 10. 9 tokens per joule. Implementing Chiplet-level Clock and Power Gating (CCPG) further reduces power consumption by 80%, resulting in a 57× efficiency improvement over Nvidia H100 at similar throughput. Overall, the research proposes a promising architecture for LLM inference that overcomes the limitations of traditional computer architectures and offers a path towards more sustainable and efficient AI computing. The key aspects and technologies used include 3D-IC chiplets for scalability and resource utilisation, RRAM CIM for in-memory computing, IPCN for data exchange and in-network computation, silicon photonics for high-bandwidth, low-latency communication, and CCPG for further optimisation. Performance is measured by speedup, energy efficiency (tokens/J), and throughput (tokens/s), with comparisons against GPUs (Nvidia A100 and H100). The research highlights the potential for further optimisation and scalability of the PICNIC architecture.

PICNIC Accelerates LLM Inference with Silicon Photonics

Scientists have developed a novel large language model (LLM) inference accelerator, named PICNIC, achieving significant performance improvements through a 3D-stacked chiplet design. The system utilises non-volatile in-memory computing processing elements (PEs) and an Inter-PE Computational Network (IPCN), interconnected via silicon photonics to overcome communication bottlenecks inherent in traditional architectures. This innovative approach focuses on minimising data movement, a key limitation in current LLM accelerators. The architecture incorporates a dedicated context window tiling and key-value caching mechanism, ensuring balanced network traffic and efficient utilisation of processing elements.

Each compute tile consists of multiple PEs interconnected via a 2D-mesh IPCN, where PEs perform static weight multiply-accumulate operations and the IPCN manages dataflow and dynamic data multiply-accumulate operations. Further enhancements through a chiplet clustering scheme with power gating (CCPG) enable scalability for larger models, delivering a 57× improvement in efficiency over the Nvidia H100 at similar throughput levels. The PEs incorporate a non-volatile resistive random access memory array compute-in-memory macro, storing weights as resistance states, reducing reconfiguration overhead and enabling efficient parallel computation directly in the analog domain. A feedback-loop calibration mechanism mitigates hardware non-idealities, ensuring accurate and reliable performance during inference.

PICNIC Accelerates LLM Inference Dramatically

The research team has developed a novel accelerator, PICNIC, for large language model (LLM) inference, employing a 3D-stacked chiplet design with non-volatile in-memory computing elements and a silicon photonic interconnect. This architecture addresses communication bottlenecks that typically limit performance in LLM processing, integrating computation, memory, and network resources effectively. Results demonstrate that PICNIC achieves a 3. 95-fold speedup and a 30-fold improvement in energy efficiency compared to the Nvidia A100 when processing the Llama-8B model. Furthermore, the implementation of a chiplet clustering and power gating scheme (CCPG) significantly reduces system power consumption by 80%, leading to a 57-fold improvement in energy efficiency over the Nvidia H100 at comparable throughput levels. The team acknowledges that while PICNIC demonstrates substantial gains, future work will focus on scaling the architecture to even larger models and further optimising power efficiency. The design prioritises sub-linear power scaling, ensuring that performance improvements continue as model sizes increase, and positions PICNIC as a highly scalable solution for resource-constrained environments.

👉 More information

🗞 PICNIC: Silicon Photonic Interconnected Chiplets with Computational Network and In-memory Computing for LLM Inference Acceleration

🧠 ArXiv: https://arxiv.org/abs/2511.04036