The challenge of equipping artificial intelligence with robust mathematical reasoning abilities takes a significant step forward with the introduction of AMO-Bench, a new benchmark designed to rigorously test the limits of large language models. Shengnan An, Xunliang Cai, and Xuezhi Cao, along with colleagues, developed this demanding set of 50 original problems, each validated by experts to meet the standards of international mathematical competitions. Existing benchmarks are proving insufficient for evaluating the most advanced models, and AMO-Bench addresses this by presenting genuinely challenging, novel problems that require only a final answer for efficient and reliable assessment. Results demonstrate that even the highest-performing language models achieve only around 52% accuracy, revealing substantial opportunities to improve mathematical reasoning and highlighting the need for continued research in this critical area.

AMO-Bench, A Rigorous Mathematical Reasoning Benchmark

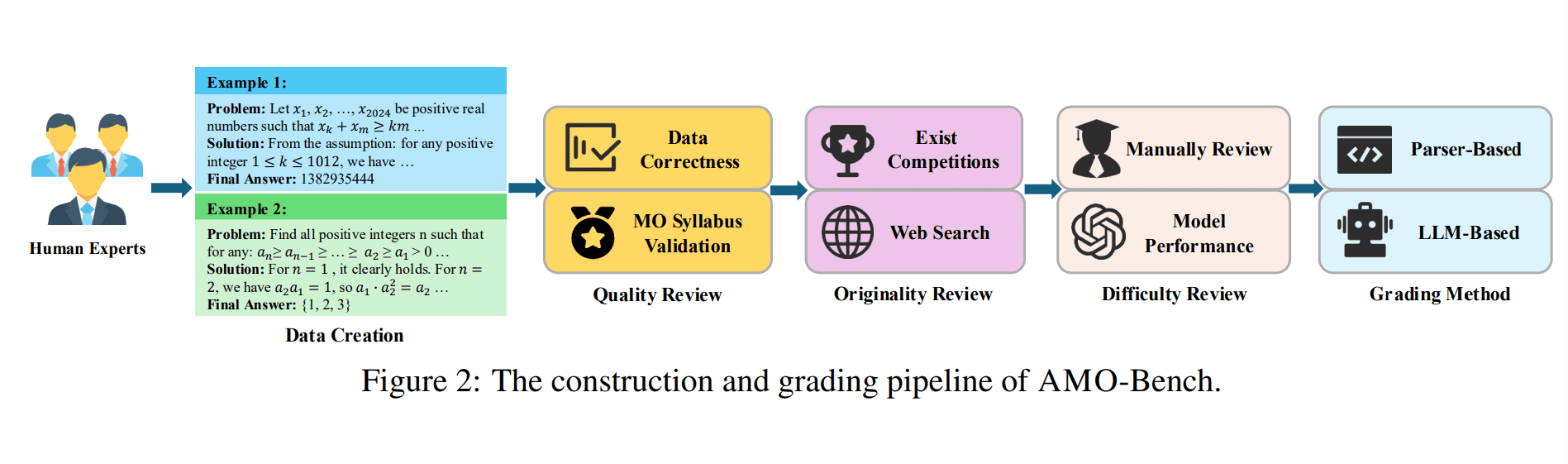

Researchers engineered AMO-Bench, a novel benchmark comprising 50 advanced mathematical reasoning problems designed to rigorously evaluate large language models. Recognizing performance saturation in existing benchmarks, the team focused on creating original problems exceeding International Mathematical Olympiad (IMO) difficulty standards. All problems were independently designed by mathematics experts, who also provided detailed step-by-step solutions for review. To ensure high quality and originality, the study pioneered a comprehensive, multi-stage construction pipeline. Candidate problems underwent blind review by at least three experts who assessed clarity, logical correctness, and alignment with IMO-level competitions.

The team then conducted an originality review, comparing each problem against existing datasets and performing web searches to identify potential overlaps. This process guaranteed that AMO-Bench presented genuinely novel challenges. The study also developed a robust grading methodology enabling automatic and reliable evaluation, employing either parser-based or language model-based grading methods depending on the answer type. Beyond the final answer, each problem includes a detailed, human-annotated reasoning path, enhancing solution transparency and facilitating further research into prompt engineering and error analysis. Experiments across 26 language models demonstrate that even the best-performing model achieves only 52. 4% accuracy on AMO-Bench, with most models scoring below 40%, highlighting the benchmark’s effectiveness in identifying limitations in current language model reasoning capabilities.

AMO-Bench Assesses Advanced Mathematical Reasoning Skills

Scientists present AMO-Bench, a new benchmark designed to rigorously assess mathematical reasoning in large language models, consisting of 50 original problems crafted to meet or exceed the difficulty of the International Mathematical Olympiad. Recognizing that existing benchmarks are becoming saturated and susceptible to data memorization, the team focused on creating novel challenges with guaranteed difficulty and automated grading. Each problem underwent expert cross-validation to ensure it aligns with IMO standards, and the benchmark utilizes final-answer based grading to enable efficient, large-scale evaluation. Experiments across 26 language models reveal that current state-of-the-art models still struggle with the challenges presented by AMO-Bench.

The best-performing model, GPT-5-Thinking (High), achieved an accuracy of only 52. 4% on the benchmark, while the majority of models scored below 40%. This demonstrates a significant gap in current reasoning capabilities, even as models excel on more established benchmarks. The team also observed that models consumed substantially more output tokens when solving problems on AMO-Bench, generating approximately 37,000 tokens compared to 7,000 and 6,000 tokens for other benchmarks, further highlighting the benchmark’s difficulty. Despite these limited performances, analysis reveals a promising scaling trend with increasing computational resources, suggesting that further improvements in mathematical reasoning are possible. The team also provided detailed, human-annotated reasoning paths for each problem, enhancing solution transparency and supporting future research into prompt engineering and error analysis. These results underscore the need for more challenging benchmarks to accurately assess and drive advancements in the reasoning abilities of language models.

AMO-Bench Reveals Limits of Model Reasoning

AMO-Bench represents a significant advancement in the evaluation of mathematical reasoning capabilities in large language models. Researchers have constructed a benchmark comprising 50 original, challenging problems designed to rigorously assess skills at the level of international mathematical Olympiads or beyond. This new benchmark addresses limitations in existing evaluations, which have shown signs of saturation with increasingly powerful models, by offering genuinely novel problems and focusing on advanced reasoning skills. The results of testing 26 large language models on AMO-Bench demonstrate that current models still struggle with complex mathematical reasoning, achieving an average accuracy of only 52.

4%, with most models scoring below 40%. However, analysis also reveals a promising trend, suggesting that increasing computational resources during problem-solving may lead to improved performance, indicating potential for future advancements. While acknowledging that current models have limitations, the research highlights substantial opportunities for improving their mathematical reasoning abilities. The authors note that the benchmark is publicly available to facilitate further research and development in this field.

👉 More information

🗞 AMO-Bench: Large Language Models Still Struggle in High School Math Competitions

🧠 ArXiv: https://arxiv.org/abs/2510.26768