Simulating the complex physics of rapidly changing, chemically active flows presents a major challenge for computational scientists, demanding enormous processing power and efficient algorithms. Anthony Carreon, Jagmohan Singh, Shivank Sharma, and colleagues at the University of Michigan have developed a new high-performance solver designed to overcome these limitations, specifically targeting applications where chemical reactions occur much faster than the overall flow changes. This research introduces a solver built on the AMReX framework and optimised for multiple graphics processing units, achieving significant improvements in both speed and efficiency. By addressing key bottlenecks in memory management, workload distribution, and the handling of localised chemical reactions, the team demonstrates near-ideal performance scaling across modern GPU architectures and unlocks the potential for more accurate and detailed simulations of phenomena like hydrogen-air detonations and jet flames.

AMReX Solver for Hypersonic Reacting Flows

This research details the development of a high-performance, scalable solver for simulating compressible reacting flows, crucial for understanding hypersonic propulsion systems. Scientists leveraged the AMReX framework and harnessed the power of Graphics Processing Units (GPUs) to achieve significant speedups in complex simulations. The work addresses the challenges of modeling flows with multiple interacting physical processes, including fluid dynamics, thermodynamics, and chemical kinetics. Simulating these flows is computationally demanding due to the wide range of length and time scales involved, the complexity of chemical reactions, and the need for highly accurate modeling.

The team employed adaptive mesh refinement (AMR), a technique that dynamically refines the computational mesh in regions of high gradients, such as shocks and flames, to efficiently resolve important features. The AMReX framework provides a robust infrastructure for parallelism, AMR, and modularity, simplifying the development and deployment of the solver. The solver utilizes a high-order accurate method for solving the compressible Navier-Stokes equations, governing fluid motion, and incorporates detailed chemical kinetics models to accurately represent chemical reactions. To accelerate the evaluation of chemical source terms, scientists explored matrix-based approaches and combined explicit and implicit time integration schemes, balancing accuracy and efficiency.

These methods preserve key physical properties of the solution, such as conservation laws, ensuring reliable results. Recognizing the limitations of traditional CPU-based computing, the team heavily optimized the solver for execution on GPUs. They focused on minimizing data transfers and maximizing memory access patterns, employing techniques like data layout optimization and coalesced memory access. Kernel optimization further reduced computational cost and improved parallelism, while the roofline model guided optimization efforts by identifying performance bottlenecks. The solver was successfully applied to simulate rotating detonation engines, promising propulsion concepts for hypersonic vehicles, and jet in supersonic crossflow configurations. Simulations were validated against experimental data, confirming the accuracy of the results. This research represents a significant advancement in computational fluid dynamics, enabling more accurate and efficient simulations of complex reacting flows relevant to hypersonic propulsion and other challenging applications.

High-Speed Simulations with Multi-GPU Optimisation

Scientists achieved substantial performance improvements in high-speed chemically active flow simulations by developing a new reacting flow solver built on the AMReX framework and optimized for multi-GPU settings. This work addresses critical bottlenecks in memory access, computational workload, and multi-GPU load distribution, delivering near-ideal scaling across multiple GPUs. Researchers tackled the challenge of disparate time and space scales inherent in these simulations, where chemical reactions often dominate computational time. The team optimized memory access patterns through column-major storage, significantly enhancing data handling efficiency.

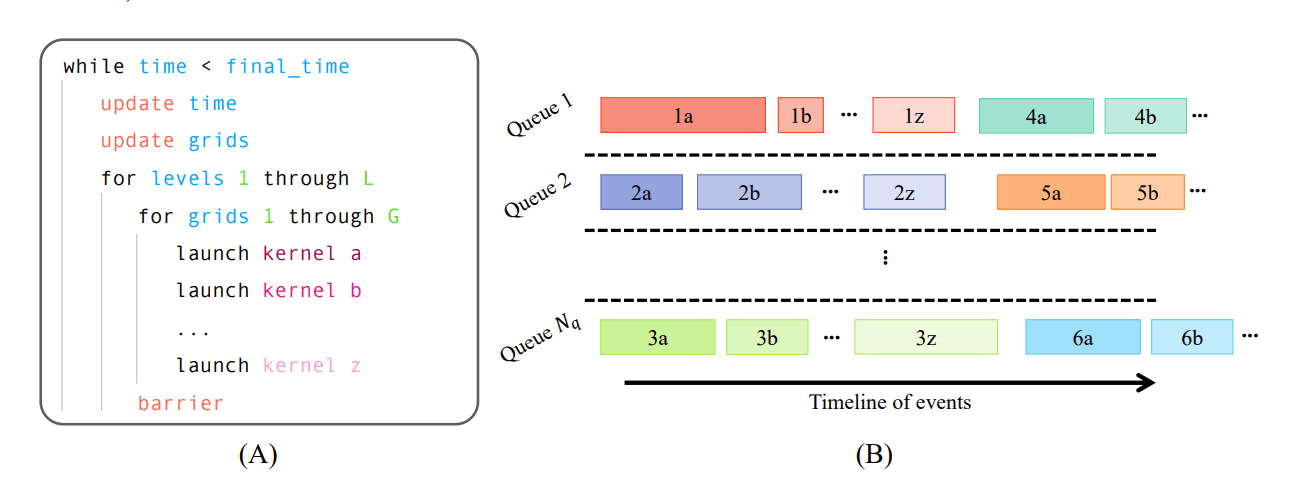

A bulk-sparse integration strategy was devised for chemical kinetics, addressing variability in computational workload by initially integrating all active cells in parallel, then switching to sparse integration for remaining cells. This approach dynamically adjusts to the changing number of active cells, maximizing GPU utilization. The algorithm strategically selects a maximum number of integration steps, balancing kernel launch overhead with potential inefficiencies caused by warp divergence. Sparse integration continues on remaining active cells, ensuring all cells complete integration. To further enhance performance in adaptive mesh refinement applications, the team implemented a single-kernel approach that processes all grids simultaneously using a cell index map, eliminating kernel launch costs. Roofline analysis revealed substantial improvements in arithmetic intensity for both convection and chemistry routines, confirming efficient utilization of GPU memory bandwidth and computational resources. The results demonstrate a significant advancement in the ability to simulate complex reacting flows with unprecedented efficiency and scalability.

GPU Solver Accelerates Combustion Simulations

Scientists presented a high-performance, GPU-accelerated solver for simulating compressible reacting flows, built upon the established AMReX framework. This work addresses key challenges in GPU computing for combustion simulations, specifically those related to memory access, load balancing across multiple GPUs, and efficiently handling the wide range of timescales inherent in chemical reactions. The solver achieves these improvements through optimized memory management, a bulk-sparse integration strategy for chemical kinetics, and refined load distribution techniques within the convection routine. Performance evaluations across hydrogen-air detonations and jet in supersonic crossflow configurations demonstrate substantial speedups compared to earlier GPU implementations, with the jet in supersonic crossflow case showing a five-fold improvement.

Weak scaling analysis, conducted across up to 96 GPUs, reveals near-ideal performance as both the problem size and the number of GPUs increase proportionally. Roofline analysis confirms these gains, showing significant improvements in arithmetic intensity for both convection and chemistry calculations, indicating more effective use of GPU resources. The authors acknowledge limitations in achieving further performance gains, noting that some computational kernels remain memory-bound. Future work may focus on utilizing shared and constant memory, exploring alternative memory layouts, fusing multiple kernels, and redesigning algorithms to address these bottlenecks. This research provides a pathway for existing combustion codes to leverage modern exascale computing architectures while maintaining the adaptive capabilities necessary for resolving complex, multiscale reacting flows.

👉 More information

🗞 A GPU-based Compressible Combustion Solver for Applications Exhibiting Disparate Space and Time Scales

🧠 ArXiv: https://arxiv.org/abs/2510.23993