Computational notebooks now dominate data science, uniquely blending executable code with explanatory documentation, and the rise of generative AI demands a clear understanding of how human and artificial intelligence differ in their creation. Tasha Settewong, Youmei Fan, and Raula Gaikovina Kula, from Nara Institute of Science and Technology, along with Kenichi Matsumoto, investigate these differences through detailed analysis of competitive notebook environments. Their work examines medal-winning Kaggle notebooks, revealing that top performers prioritise comprehensive documentation, and then contrasts human-authored notebooks with those generated by AI. The team demonstrates that while AI excels at producing clean, efficient code, human notebooks exhibit greater structural variety, complexity, and originality, suggesting a future where collaborative human-AI workflows can unlock the full potential of this powerful analytical tool.

Human and AI Notebook Performance Compared

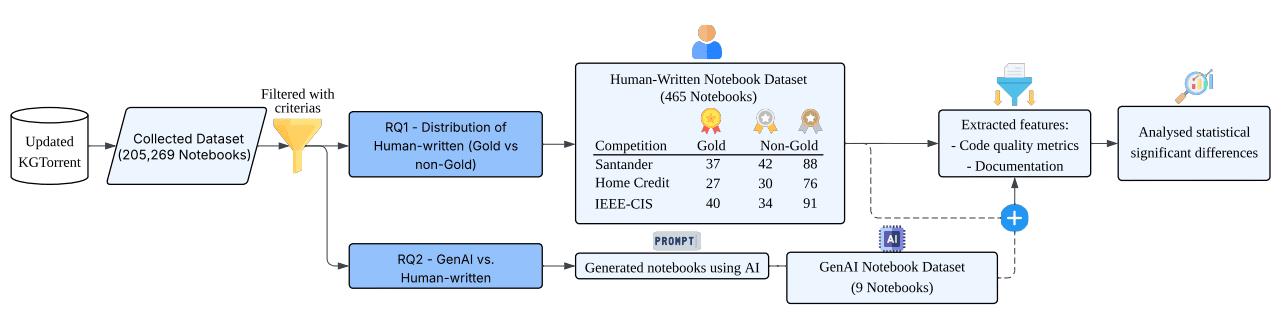

This research investigates the strengths of human-authored versus AI-generated Jupyter notebooks within competitive data science, specifically Kaggle competitions. The core finding is that AI excels at producing technically sound code, while humans excel at creating understandable and well-documented notebooks. While AI can generate clean code, top performance in these competitions still relies heavily on human skills like problem-solving, algorithmic innovation, and clear explanation. This study examines how these different approaches manifest in practice. Researchers analyzed 465 Jupyter notebooks from three Kaggle competitions, assessing both code quality and documentation.

Code quality was measured using a platform that identifies code smells and technical debt, while documentation quality was assessed through readability metrics and qualitative analysis. The team compared notebooks from medal-winning participants with those that did not win medals, using statistical tests to identify significant differences. The results demonstrate that AI-generated notebooks generally had fewer code smells and lower technical debt compared to human-authored notebooks. However, human-authored notebooks, particularly those from medal winners, were significantly more readable and well-documented.

Documentation features proved to be the strongest predictors of success, with high-performing notebooks prioritizing clear explanations and narrative. This highlights a complementary relationship, where AI can handle code generation while humans provide crucial context, explanation, and innovation. These findings have implications for the future of data science, suggesting a collaborative model where AI tools assist data scientists with code generation and optimization, but humans remain essential for problem-solving, innovation, and communication. Winning Kaggle competitions still requires strong documentation and a clear narrative, suggesting a need for tools that can help data scientists improve the documentation and readability of their notebooks.

Documentation Distinguishes Human and AI Notebooks

This study meticulously examined the characteristics of coding notebooks to distinguish between human-authored work and that generated by artificial intelligence. Researchers characterized 25 features within a dataset of Kaggle competition notebooks, focusing on both code quality and documentation attributes. This analysis revealed that gold medal-winning notebooks were primarily distinguished by significantly longer and more detailed documentation, suggesting a strong correlation between comprehensive documentation and competitive success. Statistical comparisons demonstrated that documentation features, including markdown characters, lines, and sentence counts, exhibited the most substantial differences between gold and non-gold medalists.

To further investigate this distinction, the team analyzed the differences between human-written notebooks and those generated by AI. Notebooks were generated using a specific prompt and converted into a standard Jupyter Notebook format, with structural validity confirmed through manual checks. Features were extracted using a platform that assesses code quality metrics like cyclomatic complexity, technical debt, and maintainability rating. The study found that while AI-generated notebooks tended to achieve higher scores on code quality metrics, human-written notebooks displayed greater structural diversity, complexity, and innovative approaches to problem-solving.

Statistical comparisons between AI-generated notebooks and those authored by humans with varying medal achievements utilized tests for normality assessment. The team employed established statistical tests to identify statistically significant differences in notebook features, revealing that AI notebooks often exhibited a higher number of functions and statements. However, the most striking differences were observed in code smells and technical debts, where AI notebooks consistently outperformed human-authored notebooks, demonstrating a potential advantage in generating cleaner, more maintainable code. These findings suggest that AI and humans excel in different aspects of notebook creation, with humans prioritizing comprehensive documentation and AI focusing on code quality.

Human and AI Notebook Feature Analysis

This research presents a detailed analysis of computational notebooks and investigates the distinctions between those created by humans and those generated by generative AI. The study focused on notebooks from three major Kaggle competitions to understand how humans and AI approach data science challenges. Researchers curated a dataset of 465 human-authored notebooks, focusing on gold medal-winning submissions, and compared them to 9 notebooks generated by leading large language models. The team extracted and analyzed 25 features related to both documentation and code quality to address two key research questions.

Initial findings reveal significant differences in how humans and AI prioritize aspects of notebook creation. Human-written notebooks, particularly those awarded gold medals, are distinguished by longer and more detailed documentation, suggesting a focus on clarity and accessibility. Conversely, AI notebooks demonstrate superior code quality, exhibiting fewer code smells, technical debt, and coding violations, indicating a strength in generating clean and efficient code. These results demonstrate a clear divergence in approach, with humans prioritizing comprehensive documentation and AI excelling in code optimization. This work establishes a crucial baseline for understanding the strengths of both human and AI contributions to computational notebooks, paving the way for future research into maximizing collaboration between the two. This highlights the potential for AI to assist in code generation while emphasizing the continued importance of human expertise in crafting clear, insightful documentation.

AI versus Human Notebook Strengths Revealed

This research presents a comparative analysis of data science notebooks created by humans and those generated by artificial intelligence, revealing distinct strengths in each approach. The findings demonstrate that while AI excels at producing technically sound code with minimal errors and technical debt, human-created notebooks are characterized by more comprehensive and accessible documentation, alongside greater structural diversity and innovative problem-solving techniques. Notably, the study indicates that success in competitive data science environments currently relies more heavily on these human-centric skills than on sheer code quality alone. These results have implications for several areas, potentially enabling competition hosts to identify the use of AI tools, guiding competitors on how to best integrate AI assistance, and informing researchers about skills that remain difficult for current AI to replicate. Ultimately, this work establishes a foundation for future exploration of hybrid approaches, where AI handles routine code generation and humans focus on creative problem-solving and clear communication of insights.

👉 More information

🗞 Human to Document, AI to Code: Three Case Studies of Comparing GenAI for Notebook Competitions

🧠 ArXiv: https://arxiv.org/abs/2510.18430