Understanding how artificial intelligence systems perceive and adapt to changing visual environments remains a significant challenge, particularly when dealing with objects that evolve over time. To address this, Frédéric Lin, Biruk Abere Ambaw, and Adrian Popescu, from Université Paris-Saclay and CEA List, alongside Hejer Ammar, Romaric Audigier, and Hervé Le Borgne, introduce CaMiT, a comprehensive dataset capturing the temporal evolution of car models. This new resource comprises nearly 800,000 labelled images spanning 190 car models from 2007 to 2023, alongside a further 5. 1 million unlabelled samples, and enables researchers to develop and test AI systems capable of recognising and generating images of cars across different years. The team demonstrates that training on this in-domain data achieves strong performance with improved resource efficiency, and importantly, introduces a novel time-incremental learning approach that enhances the robustness of these systems when faced with evolving visual characteristics and disappearing models, offering a rich benchmark for future research in temporal adaptation and fine-grained visual recognition.

Key datasets include ImageNet, a foundational resource for large-scale image analysis, and YFCC100M, a vast collection of images from Yahoo! Flickr used for multimedia research. LabelMe provides a database and tools for image annotation, while the Google Landmarks Dataset v2 focuses on instance-level recognition of landmarks. Researchers also utilize the iNaturalist Species Classification and Detection Dataset for species identification and the expansive LAION-5B dataset for training image-text models.

Amstertime presents a challenging visual place recognition benchmark with significant domain shift, and the Qwen2. 5 dataset supports the Qwen2. 5 model. Additionally, the Google Open Images Dataset and the Segment Anything Model (SAM 2) dataset are commonly used resources. Alongside these datasets, numerous research efforts explore continual learning, vision-language models, and fine-grained image analysis.

Theoretical frameworks for continual learning have been established, and comprehensive surveys of the field provide broad overviews. Researchers are investigating simpler continual learning approaches using pretrained backbones, and exploring whether random representations can outperform online continual learning techniques. Selective knowledge transfer and exemplar-free learning are also being actively researched. In the realm of vision-language models, models like DALL-E and DINOv2 demonstrate learning transferable visual features and robust representations without supervision. High-resolution image synthesis with latent diffusion models, such as Stable Diffusion, is also a key area of investigation. Researchers are developing taxonomy-aware evaluation methods for vision-language models and improving zero-shot classification by adapting these models. Fine-grained image analysis benefits from surveys of the field and large-scale datasets focused on specific categories, such as cars.

Car Model Dataset and Efficient Fine-tuning

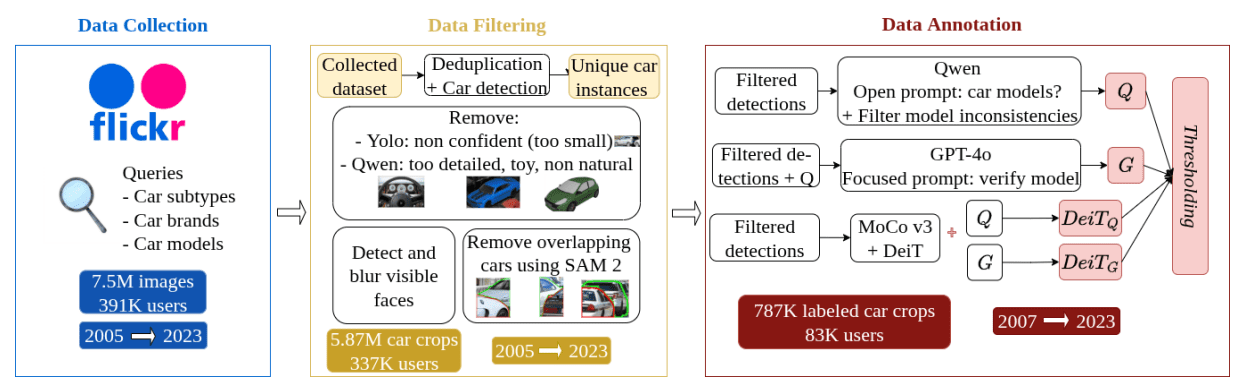

Scientists developed Car Models in Time, or CaMiT, a new dataset comprising 787,000 labelled and 5. 1 million unlabelled images of 190 car models spanning 2005 to 2023. This dataset addresses the challenge of adapting AI systems to evolving visual environments. To establish a strong baseline, researchers employed several pretraining strategies, including DINOv2, CLIP, and MoCo v3, utilizing datasets ranging from 1. 9 million to 2 billion images.

They then adapted CLIP and MoCo v3 using LoRA, a parameter-efficient technique, fine-tuning the models with data from 2007 to assess performance gains. This involved freezing pretrained image processing networks and training a nearest-class mean classifier, a powerful baseline for image classification. To rigorously evaluate temporal adaptation, the team designed experiments testing models trained on data from one year against images from other years, revealing performance degradation when tested with past and future data. They implemented a time-incremental classification setting, simulating real-world conditions with evolving classes.

Two strategies were explored: updating the core image processing network and updating only the final classification layer. The team also investigated time-aware image generation, fine-tuning Stable Diffusion 1. 5 with the CaMiT training subset using LoRA, generating more realistic images. Extensive statistical analysis ensured robust results, with reported p-values demonstrating statistical significance. Performance was measured using several metrics to comprehensively assess accuracy and robustness across temporal shifts. Specialized models initially perform well but degrade over time, while generic models benefit from domain adaptation with LoRA, achieving statistically significant improvements.

Car Model Evolution Dataset for Visual AI

Researchers introduced Car Models in Time (CaMiT), a new dataset designed to study how visual AI systems adapt to changing appearances of objects over time, focusing on the evolution of car models from 2005 to 2023. CaMiT comprises 787,000 labelled images representing 190 distinct car models, alongside a larger unlabelled set of 5. 1 million images, enabling both supervised and self-supervised learning approaches. The work demonstrates a clear temporal shift in how car models are depicted, with newer variants emerging and older ones becoming less frequent over time. This shift is quantified by increasing distances between car model embeddings as the time gap between image uploads increases, highlighting the need for methods to mitigate these effects.

Experiments using CaMiT in a time-incremental classification setting revealed that static pretraining, while achieving competitive performance with large-scale models, suffers accuracy declines when tested with images from different years. To address this, the team investigated two strategies: updating the core image processing network and updating only the final classification layer. Both strategies demonstrably improved temporal robustness, with updating the final layer achieving the best performance when combined with a specialized pretrained network. Furthermore, the researchers explored time-aware image generation, incorporating temporal metadata into the training process. This resulted in generated images that more accurately reflect the actual distribution of car model depictions over time, demonstrating improved realism compared to standard image generation techniques. The dataset and associated code have been released to encourage further research into fine-grained temporal shift modelling, offering a valuable resource for developing AI systems that can adapt to evolving visual environments.

Car Model Evolution and Temporal Adaptation

Researchers introduced Car Models in Time, or CaMiT, a new dataset designed to advance the field of fine-grained image classification and generation with a focus on temporal adaptation. This dataset comprises nearly 800,000 labelled images of 190 car models captured over a 17-year period, alongside a larger collection of unlabelled images spanning 19 years, offering a comprehensive resource for both supervised and self-supervised learning approaches. Analysis of the dataset revealed that car models evolve over time, creating a temporal shift that impacts the performance of standard image classification models. To address this challenge, the team investigated time-incremental learning strategies, including updating the core image processing network and refining only the final classification layer.

Results demonstrate that these techniques mitigate performance decline when models are tested on images from different years, with updating the final layer proving particularly effective and efficient. Furthermore, the researchers explored time-aware image generation, showing that incorporating temporal information during training yields more realistic outputs for evolving visual classes. The availability of CaMiT is expected to stimulate further investigation into integrating time into visual AI models, improving their robustness and preventing obsolescence as visual data changes over time.

👉 More information

🗞 CaMiT: A Time-Aware Car Model Dataset for Classification and Generation

🧠 ArXiv: https://arxiv.org/abs/2510.17626