Credit scoring represents a critical process in financial services, directly influencing access to loans and demanding robust, transparent decision-making, yet current methods often lack clear explanations. Abdul Samad Khan from Lahore University of Management Sciences, Nouhaila Innan from New York University Abu Dhabi, and Aeysha Khalique, along with their colleagues, address this challenge by presenting IQNN-CS, a novel framework for interpretable quantum neural networks specifically designed for multiclass credit risk classification. This architecture combines the power of quantum computation with techniques that reveal how the model arrives at its decisions, a crucial step towards building trust in financial applications. The team introduces a new metric, Inter-Class Attribution Alignment, which measures how consistently the model uses different factors to distinguish between various credit risk levels, and demonstrates that IQNN-CS achieves both competitive accuracy and significantly improved interpretability on real-world credit data, paving the way for transparent and accountable quantum machine learning in finance.

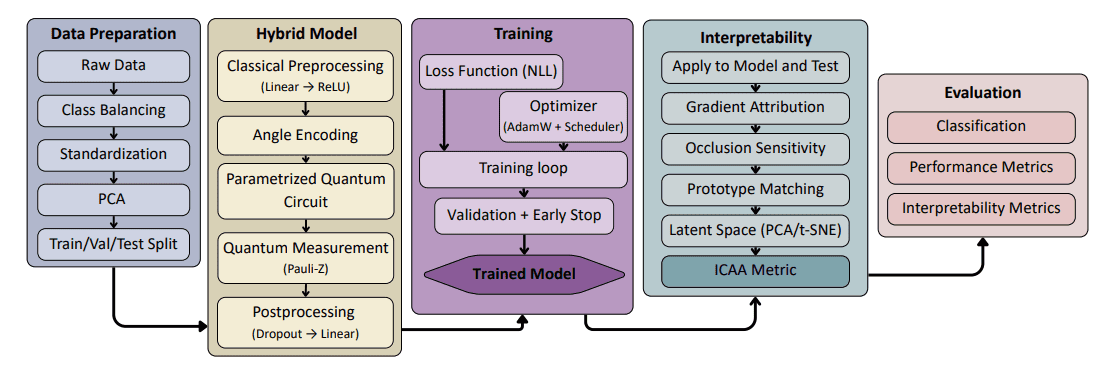

Credit scoring represents a high-stakes task within financial services, where model decisions directly impact individuals’ access to credit and are subject to strict regulatory scrutiny. Quantum Machine Learning (QML) offers new computational capabilities, but its complex nature poses challenges for adoption in domains that demand transparency and trust. This work presents IQNN-CS, an interpretable quantum neural network framework designed for multiclass credit risk classification. The architecture combines a variational quantum neural network with post-hoc explanation techniques tailored for structured data, addressing the need for transparency in financial applications. To understand how the model distinguishes between credit risk categories, researchers introduced Inter-Class Attribution Alignment (ICAA), a novel method for understanding feature importance across different risk categories, enhancing model transparency and facilitating regulatory compliance.

Dataset Impact on Model Interpretability

This document presents a detailed analysis of an IQNN-CS model trained on two datasets. The analysis reveals that the performance of the IQNN-CS model, and consequently the effectiveness of interpretability methods, is strongly influenced by the dataset. Dataset 1 exhibits cleaner training dynamics, higher confidence predictions, and more reliable explanations, while Dataset 2 suffers from noisy convergence, lower confidence, and less consistent explanations. Robust interpretability requires a stable and semantically meaningful latent representation learned by the model; a poorly trained model will yield unreliable explanations, regardless of the interpretability method used.

Inter-Class Attribution Alignment (ICAA) and Occlusion Analysis consistently emerge as the most reliable interpretability techniques, particularly when the model is well-trained. They provide localized and class-discriminative explanations. Gradient-based methods perform well with stable models, but their reliability degrades with noisy training. Example-Based Attribution reveals inconsistencies between the training and test data, indicating potential issues with generalization, and Indecision Detection identifies instances where the model is unreliable. The report demonstrates that the model’s latent representation reveals potential clusters and patterns, and comparative analysis of the datasets shows that Dataset 1 exhibits higher confidence in predictions and more structured attribution patterns.

The report provides a valuable assessment of the interpretability of an IQNN-CS model. The key takeaway is that the effectiveness of interpretability methods is strongly dependent on the quality of the training data and the stability of the model. ICAA and Occlusion Analysis emerge as robust techniques, but their reliability is contingent on a well-trained model. The report underscores the importance of not only choosing the right interpretability method but also ensuring that the underlying model is capable of learning meaningful and stable representations.

Interpretable Quantum Neural Networks for Credit Risk

Scientists developed IQNN-CS, an interpretable neural network framework designed for multiclass credit risk classification, demonstrating a practical path toward transparent and accountable quantum machine learning models for financial decision-making. The architecture combines a variational quantum neural network with post-hoc explanation techniques specifically tailored for structured data, addressing a critical need for transparency in financial applications. This work focuses on making quantum neural networks suitable for high-stakes financial applications through interpretability-aware design, rather than solely pursuing performance gains. To quantify how the model distinguishes between credit risk categories, researchers introduced Inter-Class Attribution Alignment (ICAA), a novel metric that measures attribution divergence across predicted classes, offering insights into the decision-making process.

The team evaluated IQNN-CS on two real-world credit datasets, focusing on robust training dynamics, attribution behavior, and interpretability, while emphasizing deployment feasibility in regulated financial settings. Results demonstrate stable training dynamics and competitive predictive performance, confirming the model’s ability to accurately assess credit risk. The framework successfully handles multiclass credit scoring, a significant advancement over previous quantum approaches that primarily focused on binary classification. Furthermore, the research highlights the importance of interpretability in quantum machine learning, an aspect often overlooked in prior work, and establishes a foundation for deploying transparent and accountable models in sensitive financial environments. This work addresses a critical gap in the field by focusing on interpretability requirements for real-world deployment in regulated financial environments.

Interpretable Credit Scoring with Quantum-Classical Networks

The team developed IQNN-CS, a novel hybrid quantum-classical model for credit scoring that achieves both strong predictive performance and interpretable outputs. Evaluations on two real-world credit datasets demonstrate the model’s high accuracy and reveal clear decision-making patterns through interpretability analysis. A key achievement of this work is the introduction of Inter-Class Attribution Alignment (ICAA), a metric that quantifies how distinctly the model reasons about different classes, providing valuable insight into its internal logic. The researchers found that ICAA effectively identified instances where the model’s reasoning broke down, even when standard performance metrics indicated good results, highlighting the importance of interpretability as a diagnostic tool.

Together with attribution sensitivity and example-based influence tracing, ICAA strengthens the toolkit available for understanding quantum models and builds confidence in their reliability. This work demonstrates that accurate quantum models can also be transparent and trustworthy, paving the way for their deployment in sensitive fields like finance and healthcare as quantum computing technology advances. The authors acknowledge that the model’s performance is dependent on the alignment between data structure and quantum encoding, an area for continued investigation.

👉 More information

🗞 IQNN-CS: Interpretable Quantum Neural Network for Credit Scoring

🧠 ArXiv: https://arxiv.org/abs/2510.15044