The challenge of creating trustworthy artificial intelligence for medical diagnosis currently limits the clinical adoption of advanced vision systems, as existing methods often produce explanations that are difficult to understand or fail to capture the complexity of real-world clinical reasoning. Kaushitha Silva, Mansitha Eashwara, and Sanduni Ubayasiri, from the University of Peradeniya, along with Ruwan Tennakoon from RMIT University and Damayanthi Herath from the University of Peradeniya, now present BiomedXPro, a novel framework that automatically generates a range of easily understood prompts for disease diagnosis. This system utilises evolutionary algorithms to extract biomedical knowledge and adaptively optimise prompts, consistently exceeding the performance of current prompt-tuning methods, especially when limited training data is available. Importantly, the research demonstrates a clear connection between the generated prompts and established clinical features, providing a verifiable foundation for predictions and representing a significant advance towards more reliable and clinically relevant AI systems.

Evolving Interpretable Prompts for Biomedical Imaging

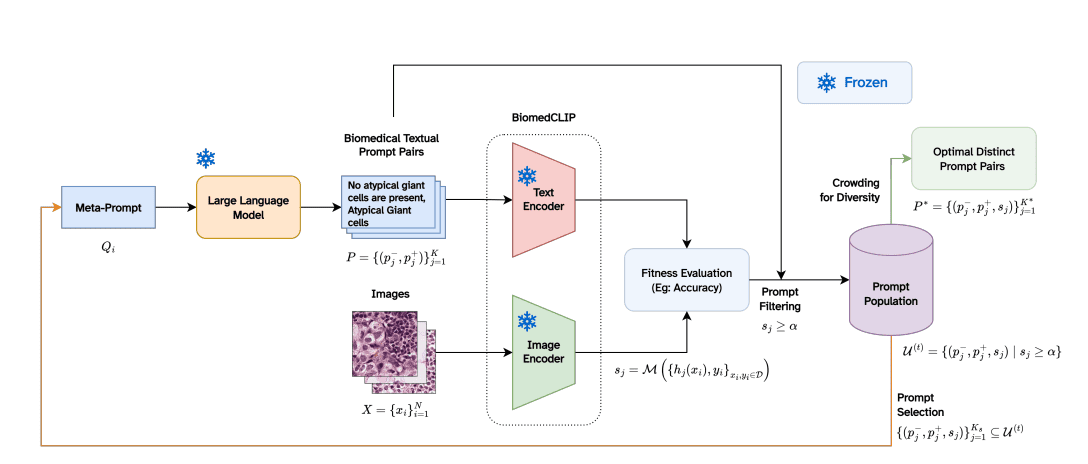

BiomedXPro, a novel evolutionary framework, automatically generates diverse and interpretable prompts for biomedical image classification, addressing limitations found in current prompt-tuning methods. The system leverages a large language model both to extract biomedical knowledge and to adaptively optimise prompts within an evolutionary search process. Experiments across multiple biomedical datasets demonstrate that BiomedXPro consistently outperforms existing state-of-the-art methods, particularly when limited training data is available. Importantly, analysis reveals a strong semantic alignment between the prompts discovered by BiomedXPro and statistically significant clinical features, grounding the model’s predictions in verifiable concepts. This alignment enhances the trustworthiness and interpretability of the system, representing a significant advance towards the safe and reliable deployment of vision-language models in clinical settings. Further validation is needed to confirm the model’s sensitivity to nuanced details and to ensure spatial attention aligns with features described in the prompts, alongside rigorous evaluation by clinical experts, with future work focused on refining clinical alignment and robustness.

BiomedXPro Evolves Interpretable Diagnostic Prompts Automatically

The work presents BiomedXPro, a novel evolutionary framework designed to automatically generate diverse and interpretable prompt pairs for disease diagnosis using biomedical vision-language models. Researchers addressed limitations of existing prompt-tuning methods, which often produce uninterpretable results or fail to capture the multifaceted nature of clinical diagnosis. The core of BiomedXPro lies in its ability to leverage a large language model both as a biomedical knowledge extractor and an adaptive optimiser, enabling the discovery of prompts grounded in verifiable clinical concepts. Experiments conducted on multiple biomedical benchmarks demonstrate that BiomedXPro consistently outperforms state-of-the-art prompt-tuning methods, particularly in data-scarce, few-shot settings. The team formulated prompt discovery as a multi-objective optimisation problem, balancing classification accuracy with prompt diversity to mitigate semantic redundancy. This approach delivers interpretability through clear, medically grounded observations, diversity by maintaining multiple complementary prompts, and clinical trustworthiness by anchoring probabilistic predictions in meaningful medical concepts.

Evolving Interpretable Prompts for Disease Diagnosis

The study pioneers BiomedXPro, an evolutionary framework designed to automatically generate diverse and interpretable prompts for biomedical disease diagnosis. Unlike methods that optimise single prompts, the team engineered a system that evolves an ensemble of human-readable prompts, each capturing distinct diagnostic observations. This process leverages a large language model, functioning both as a biomedical knowledge extractor and an adaptive optimiser, to iteratively refine prompts through structured feedback. The framework begins by generating an initial population of prompts, then evaluates their performance on a designated biomedical benchmark dataset, assessing how well each prompt guides a vision-language model in identifying disease indicators.

Following evaluation, the team implemented a selection process, prioritising prompts that demonstrate strong diagnostic performance and semantic coherence with established clinical features. These selected prompts then undergo a mutation and crossover phase, inspired by evolutionary algorithms, where elements are combined and altered to create new prompts with potentially improved characteristics. To ensure clinical relevance, the study incorporated a mechanism for grounding prompts in statistically significant clinical features, verifying that the generated prompts align with established medical knowledge.

Evolving Interpretable Prompts for Biomedical Imaging

The team’s approach prioritises generating prompts that are not only accurate but also diverse, mitigating the risk of semantic redundancy and enhancing the robustness of the diagnostic system. The framework’s performance was evaluated by measuring the collective performance of the generated prompt pairs on training datasets, demonstrating a significant improvement over existing methods. The team’s work represents a breakthrough in interpretable AI for biomedical imaging, offering a verifiable basis for predictions and paving the way for more trustworthy and clinically aligned diagnostic tools. The system’s ability to generate diverse and interpretable prompts addresses a critical need in the field, enabling clinicians to understand the reasoning behind AI-driven diagnoses and fostering greater confidence in these technologies.

👉 More information

🗞 BiomedXPro: Prompt Optimization for Explainable Diagnosis with Biomedical Vision Language Models

🧠 ArXiv: https://arxiv.org/abs/2510.15866