Hedging financial risk with options is a cornerstone of modern finance, yet traditional methods often struggle to adapt to rapidly changing market conditions. Zofia Bracha, Paweł Sakowski, and Jakub Michańków, from University of Warsaw and TripleSun, now demonstrate a powerful new approach using deep reinforcement learning. Their team developed an intelligent agent capable of dynamically hedging at-the-money S and P 500 options without relying on pre-defined models of price behaviour. By training this agent on nearly two decades of historical market data, the researchers reveal a system that consistently outperforms conventional delta-hedging strategies, particularly when transaction costs are high or market volatility increases, offering a significant advancement in practical options trading.

Deep Reinforcement Learning Beats Black-Scholes Hedging

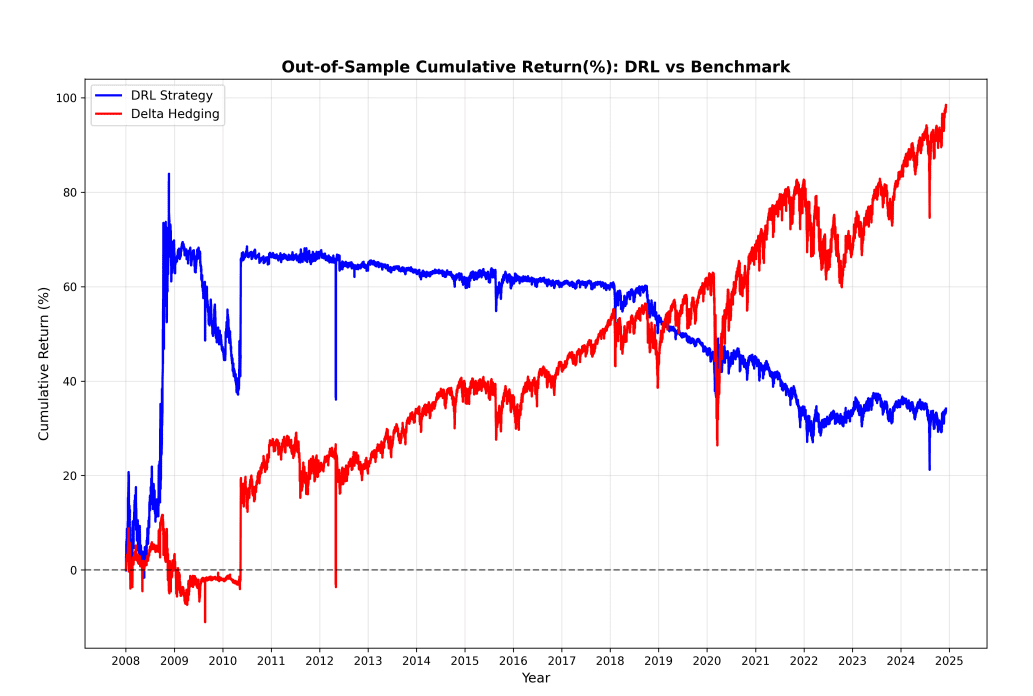

This research presents a deep reinforcement learning (DRL) agent designed to improve upon traditional hedging strategies for at-the-money S and P 500 index options. The team developed an agent based on the Twin Delayed Deep Deterministic Policy Gradient (TD3) algorithm, training it to make hedging decisions without relying on explicit models of price dynamics. The agent was trained on nearly 17 years of historical intraday data, spanning 2004 to 2024, utilizing six predictor variables including option price, underlying asset price, moneyness, time to maturity, realized volatility, and current hedge position. A walk-forward procedure rigorously evaluated the agent’s performance out-of-sample, demonstrating it consistently outperforms the benchmark Black-Scholes delta-hedging strategy.

Performance was assessed using key metrics, including annualized return, volatility, information ratio, and Sharpe ratio. The study reveals the agent’s robustness and flexibility, particularly in volatile or high-cost trading environments. Experiments incorporating transaction costs and risk-awareness penalties further highlight the agent’s adaptability. While performance diminishes as risk aversion increases, the agent consistently achieves superior results compared to delta-hedging. Analysis of volatility estimation windows reveals that longer time intervals lead to more stable results, suggesting that incorporating a broader historical perspective improves the agent’s ability to navigate market fluctuations. This research delivers a significant advancement in automated hedging techniques, offering a promising alternative to traditional methods and paving the way for more sophisticated risk management strategies. By learning directly from market data, the agent avoids the limitations of pre-defined models and adapts to changing market conditions, potentially improving market efficiency, reducing trading costs, and enhancing risk management for investors.

Deep Reinforcement Learning Improves Option Hedging

This research demonstrates the successful application of deep reinforcement learning to the problem of hedging at-the-money S and P 500 index options, achieving performance improvements over traditional delta-hedging strategies. The team developed an agent, based on a sophisticated algorithm, trained on nearly two decades of historical market data without relying on pre-defined models of price behaviour. Through rigorous backtesting, the agent consistently outperformed delta-hedging across various market conditions, particularly when transaction costs were high or market volatility increased, showcasing its adaptability and robustness. The study further investigated the agent’s sensitivity to key parameters and market factors, revealing that performance remained stable with different volatility estimation windows, but diminished when a stronger emphasis was placed on risk aversion. These findings highlight the importance of carefully calibrating risk preferences when implementing reinforcement learning strategies in financial markets. While the agent demonstrates a clear advantage, its performance is influenced by the level of risk aversion incorporated into its decision-making process, suggesting that further research is needed to optimise this parameter for different investor profiles and market scenarios.

Deep Reinforcement Learning for Dynamic Option Hedging

This research details the application of deep reinforcement learning (DRL) to the problem of dynamically hedging options, a crucial task in financial markets. The team developed a DRL agent to manage risk and maximize profit, moving beyond traditional methods that rely on assumptions about how prices change. The agent learned to make hedging decisions by analysing nearly 17 years of historical intraday data, from 2004 to 2024, considering factors like option price, underlying asset price, time to maturity, and market volatility. A robust evaluation procedure, known as walk-forward analysis, ensured the agent’s performance held up when tested on unseen market data.

Results demonstrate the DRL agent consistently outperforms the widely used Black-Scholes delta-hedging strategy. The agent’s adaptability and robustness were particularly evident in volatile markets or when transaction costs were high. Experiments incorporating penalties for risk-taking further highlighted the agent’s ability to adjust to different investment preferences. While increasing risk aversion slightly reduced returns, the agent consistently delivered superior results compared to traditional methods. Analysis of different volatility estimation windows revealed that using longer time periods led to more stable and reliable performance.

👉 More information

🗞 Application of Deep Reinforcement Learning to At-the-Money S&P 500 Options Hedging

🧠 ArXiv: https://arxiv.org/abs/2510.09247