Creating accurate depth maps is crucial for applications ranging from robotics to augmented reality, yet achieving this in real-time with multiple camera inputs remains a significant challenge. Hangtian Zhao from the University of Science and Technology of China, Xiang Chen from East China Normal University, and Yizhe Li, along with colleagues, now present FastViDAR, a new framework that efficiently estimates depth from four fisheye cameras. The team achieves this breakthrough by introducing an Alternative Hierarchical Attention mechanism, which intelligently combines information from different viewpoints with minimal computational cost, and a novel depth fusion approach. This innovation enables FastViDAR to generate full depth maps at up to 20 frames per second on standard embedded hardware, demonstrating competitive performance on real-world datasets and paving the way for responsive, 360-degree vision systems.

Researchers address the need for fast and reliable omnidirectional depth perception, critical for applications in robotics and autonomous driving. Their approach focuses on efficiently fusing multi-view depth estimates into a comprehensive representation. The team achieves cross-view feature mixing through separate intra-frame and inter-frame windowed self-attention, reducing computational overhead. A novel ERP fusion approach projects multi-view depth estimates to a shared equirectangular coordinate system, generating the final fused depth map.

Transformer Networks Estimate Omnidirectional Depth Maps

This research introduces OmniVidar, a new method for estimating depth from multiple fisheye images, aiming for accurate and efficient depth maps for omnidirectional scenes. The method utilizes a transformer-based architecture with hierarchical attention to process images from multiple viewpoints. Experiments demonstrate that OmniVidar achieves state-of-the-art performance on benchmark datasets, including both synthetic and real-world scenes, and focuses on real-time performance, making it suitable for applications such as robotics and augmented reality. The research begins by outlining the importance of understanding three-dimensional scenes for applications like robotics, virtual and augmented reality, and self-driving vehicles.

It highlights the challenges of capturing three-dimensional information with traditional cameras when a wide field of view is required, and proposes using multiple fisheye cameras to capture a complete 360-degree view of the environment. The core problem addressed is accurate and efficient depth estimation from these multi-fisheye images, which OmniVidar offers through its transformer-based architecture and hierarchical attention mechanisms. Existing methods for three-dimensional reconstruction and depth estimation have been extensively reviewed, categorizing them into areas such as multi-view stereo, learning-based multi-view stereo, stereo matching, and omnidirectional stereo. The research also considers recent advancements in using transformers for computer vision tasks. This review identifies gaps in existing research, which OmniVidar aims to address through its innovative approach, relying on principles of multi-view geometry to establish correspondences between the different fisheye images and using a cost volume to represent the disparity between views, ultimately enabling depth estimation.

FastViDAR Achieves High-Fidelity Depth Map Fusion

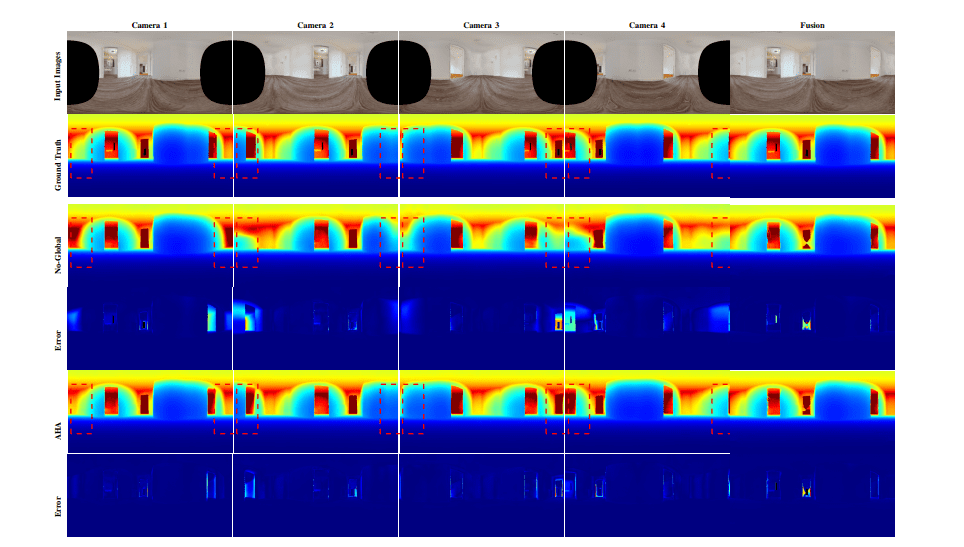

The research team presents FastViDAR, a novel framework designed to generate full depth maps from four fisheye cameras, alongside per-camera depth, fusion depth, and confidence estimates. Experiments demonstrate that the AHA mechanism significantly improves performance, achieving a reduction in Absolute Relative error to 0. 111 and RMSE to 0.

384, while maintaining a high percentage of accurate depth estimations with 0. 904 of pixels within a 1. 25 threshold. The team also introduces a novel ERP fusion approach, projecting multi-view depth estimates onto a shared equirectangular coordinate system to create the final fused depth map. Comparative tests on the 2D-3D-S dataset reveal that FastViDAR achieves an Absolute Relative error of 0.

119, an RMSE of 0. 433, and a Log10 error of 0. 046, with 92. 9% of pixels falling within the 1. 25 accuracy threshold.

These results demonstrate the effectiveness of the ERP fusion strategy in creating accurate and consistent depth maps. To ensure robust depth estimation, the researchers developed an ERP-weighted loss function, incorporating both a data term and a multi-scale gradient term, weighted by the cosine of the latitude to account for varying pixel densities on the equirectangular projection. This weighting scheme consistently improves stability and prevents the poles, with their dense pixel concentration, from dominating the loss calculation. The team validated the framework using the HM3D and 2D-3D-S datasets, achieving competitive zero-shot performance on real datasets while simultaneously reaching a processing speed of up to 36 milliseconds on Orin NX embedded hardware. The framework’s efficiency and accuracy represent a significant advancement in multi-view depth estimation.

Realtime Omnidirectional Depth Estimation with FastViDAR

FastViDAR represents a significant advancement in omnidirectional depth estimation, delivering real-time performance from multi-fisheye camera setups. This approach efficiently fuses information across different camera views, reducing computational demands while maintaining accuracy. Furthermore, the team proposed a new ERP fusion technique that projects depth estimates onto a shared coordinate system, improving the overall quality of the final depth map.

Evaluations using both HM3D and 2D3D-S datasets demonstrate that FastViDAR achieves competitive performance, even without requiring extensive fine-tuning, and operates at up to 20 frames per second on standard embedded hardware. The method exhibits improved depth consistency and accuracy across all spatial directions, and supports flexible camera configurations, making it well-suited for applications demanding comprehensive 360-degree depth perception. While trained on a relatively limited dataset, FastViDAR’s performance rivals that of methods trained on much larger datasets, highlighting the effectiveness of the proposed attention and fusion techniques. The authors acknowledge that further research could explore optimization for different hardware platforms and investigate the potential for incorporating semantic information to enhance depth estimation.

👉 More information

🗞 FastViDAR: Real-Time Omnidirectional Depth Estimation via Alternative Hierarchical Attention

🧠 ArXiv: https://arxiv.org/abs/2509.23733