Real-time object detection continues to advance rapidly, and a team led by Ranjan Sapkota from Cornell University, alongside Rahul Harsha Cheppally and Ajay Sharda from Kansas State University, now presents a significant leap forward with YOLO26. This research details key architectural enhancements within the newest iteration of the You Only Look Once (YOLO) family, engineered to deliver exceptional performance on edge and low-power devices. The team introduces innovations such as end-to-end non-maximum suppression-free inference and a new label assignment strategy, alongside the adoption of an advanced optimisation algorithm inspired by large language model training. Through rigorous benchmarking on Orin Jetson platforms, and comparisons against previous YOLO versions, the researchers demonstrate that YOLO26 achieves superior efficiency, accuracy, and deployment versatility, marking a pivotal advancement in real-time object detection technology.

The research highlights continuous improvements in speed, accuracy, and efficiency across YOLO versions, and future advancements centre on multimodal learning, integrating data from sources like vision and language using Large Vision-Language Models to enhance detection performance. Semi-supervised and self-supervised learning leverage unlabeled data to create more robust models, reducing the need for extensive labeled datasets. Attention mechanisms, such as those implemented in recent YOLO versions, focus on the most relevant features within an image, improving both accuracy and efficiency.

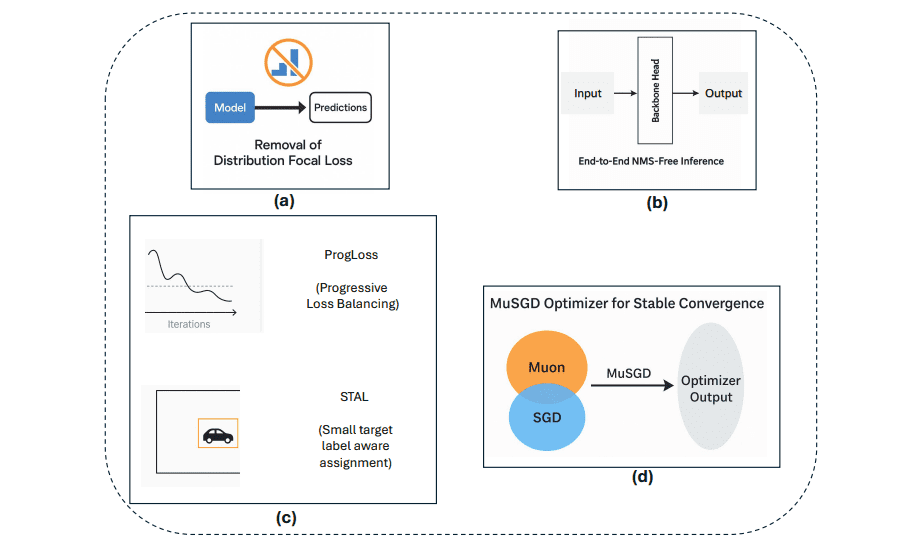

Researchers are also exploring hypergraph-enhanced visual perception, utilizing hypergraphs to model complex relationships between objects and improve visual understanding. Resource-aware optimization techniques aim to deploy YOLO models on resource-constrained devices like FPGAs and edge devices through quantization and efficient architectures, crucial for applications like autonomous driving and robotics. The field emphasizes deploying YOLO models on diverse hardware platforms, including GPUs, FPGAs, and edge devices, and discusses techniques for optimization. Key innovations include end-to-end NMS-free inference, streamlining processing, and removal of Distribution Focal Loss to simplify model exports. To improve detection stability and accuracy, particularly for small objects, the team introduced ProgLoss and Small-Target-Aware Label Assignment techniques. Inspired by advancements in large language model training, the study adopted the MuSGD optimizer to accelerate learning and enhance model performance.

To comprehensively benchmark YOLO26, scientists collected a custom dataset in agricultural environments using a machine vision camera, manually labeling objects to provide ground truth for evaluation. Models were trained under identical conditions, and results were reported using metrics including precision, recall, accuracy, F1 score, and mean Average Precision (mAP). The evaluation extended to measuring inference speed and pre/post-processing times, providing a holistic assessment of YOLO26’s efficiency. This work introduces key architectural enhancements designed to improve efficiency and accuracy, particularly for deployment on edge and low-power devices. Researchers achieved end-to-end NMS-free inference, eliminating a common source of latency and simplifying the detection pipeline, and removed Distribution Focal Loss (DFL) to streamline model exports and reduce computational demands. The team incorporated ProgLoss and Small-Target-Aware Label Assignment (STAL) into YOLO26, demonstrably improving both stability during training and the detection of small objects.

Furthermore, the adoption of the MuSGD optimizer, inspired by techniques used in large language model training, contributes to faster convergence and enhanced performance. These architectural innovations address limitations found in previous iterations, establishing it as a pivotal milestone in the YOLO evolution. By removing dependencies on NMS and DFL, the team achieved a streamlined architecture optimized for resource-constrained environments.

Simplified Training and Faster Object Detection

YOLO26 represents a substantial advancement in real-time object detection, building upon the strengths of the YOLO series while introducing key architectural innovations and a strong focus on practical deployment. Researchers achieved a streamlined design by removing the Distribution Focal Loss module and eliminating the need for non-maximum suppression, simplifying bounding box regression and broadening hardware compatibility. These changes enable end-to-end inference, reducing latency and simplifying the deployment pipeline. Further improvements stem from the introduction of Progressive Loss Balancing and Small-Target-Aware Label Assignment during training, which stabilize learning and enhance accuracy, particularly for small objects.

A novel MuSGD optimizer accelerates convergence and improves training stability, contributing to a detector that is both more accurate and more efficient. Benchmark comparisons demonstrate that YOLO26 surpasses previous YOLO versions and narrows the performance gap with transformer-based detectors, delivering competitive accuracy with significantly improved throughput on standard hardware. The team emphasizes that a key contribution of this work lies in its emphasis on deployment advantages, deliberately optimizing the architecture for real-world use and ensuring compatibility with a wide range of formats, enabling integration into diverse applications. Researchers also highlight the model’s robust quantization capabilities, enabling deployment even in resource-constrained environments.

👉 More information

🗞 YOLO26: Key Architectural Enhancements and Performance Benchmarking for Real-Time Object Detection

🧠 ArXiv: https://arxiv.org/abs/2509.25164