Collecting large video datasets for specific research purposes presents a significant challenge, often requiring considerable time and effort. Yidan Zhang, Mutian Xu, and Yiming Hao, along with colleagues, address this problem by introducing VC-Agent, a novel interactive agent designed to streamline the process of customized video collection. This system understands user requests and incorporates feedback to efficiently retrieve and expand relevant video clips with minimal input, representing a substantial improvement over traditional methods. By combining existing multi-modal technologies with newly developed filtering policies that adapt to ongoing user interaction, VC-Agent not only accelerates dataset creation but also establishes a new benchmark for personalised video collection, validated through comprehensive user studies and experiments demonstrating its effectiveness and efficiency.

The work introduces a new Personalized Video Collection Benchmark (PVB) containing three distinct domains, each with five specific requirements meticulously outlined for video content, encompassing categories, actions, shot types, component specifications, and content restrictions. Researchers rigorously annotated 10,000 videos across these domains, precisely labeling whether each video met each of the five requirements, ensuring a high-quality benchmark for evaluation. Experiments demonstrate that VC-Agent significantly outperforms existing video retrieval methods and multi-modal large language models (MLLMs) on the PVB.

The team measured performance using Intersection-over-Union (IoU), achieving results of 64. 82, 60. 58, 56. 23, 52. 95, and 49.

17 for progressively more complex requirement sets. In comparison, leading MLLMs achieved substantially lower IoU scores, demonstrating VC-Agent’s superior ability to accommodate complex and detailed demands. Further analysis reveals that traditional video retrieval methods struggle with the multitude of specific demands inherent in customized dataset collection, while VC-Agent excels at handling finer details. Ablation studies confirm the effectiveness of key modules within VC-Agent, with performance gains becoming more significant as the complexity of requirements increases. While leveraging MLLMs introduces a computational cost, the team demonstrates that this trade-off is justified by the significantly improved performance and generalization capability of the new agent.

Interactive Video Dataset Construction with VC-Agent

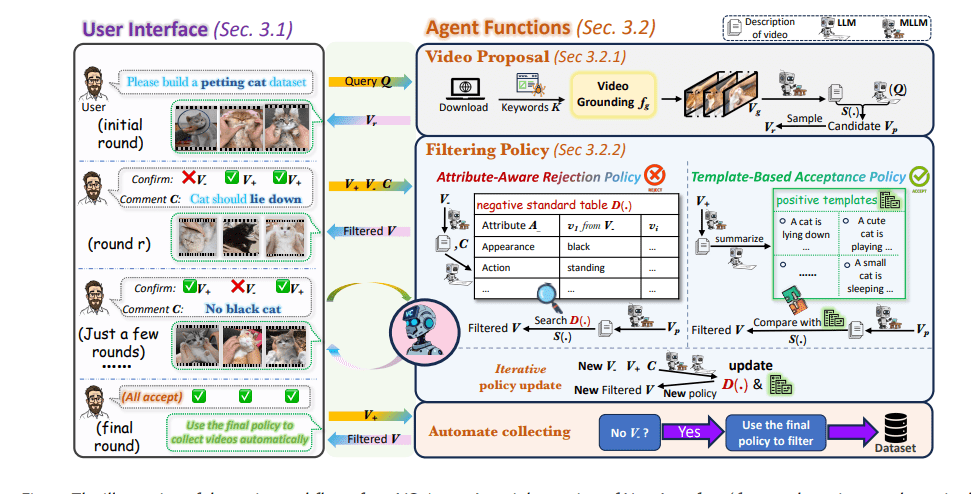

Scientists developed VC-Agent, an interactive system designed to efficiently construct customized video datasets, addressing the challenges of time-consuming and labor-intensive data collection. The system combines a user-friendly interface with an intelligent agent capable of understanding and responding to user feedback, ultimately scaling up relevant video clips with minimal input. The core of the system lies in its ability to translate user requirements into actionable video retrieval and refinement processes, significantly reducing the effort required to build specialized datasets. The research team engineered a system with three primary modes of user interaction: initial query, confirmation, and comment.

Users begin by providing a broad query, which triggers an initial search and filtering of videos from public platforms. Subsequently, the system presents multiple video samples to the user, who confirms acceptable and unacceptable clips, forming acceptance and rejection sets. This confirmation process directly informs the agent’s learning and refinement of its search criteria. Furthermore, users can provide specific comments on rejected videos, detailing preferences like “No black cat” or “Cat should lie down”, enriching the agent’s understanding of nuanced requirements. The back-end of VC-Agent leverages multimodal large language models (MLLMs) integrated with a self-updatable filtering policy for decision-making.

Initially, the system retrieves raw video clips based on the user’s query and employs a video grounding model to select the most relevant segments. Crucially, the research team introduced an innovative filtering policy that iteratively refines the selection process based on user feedback. Accepted videos serve as positive templates for updating the acceptance policy, while rejected videos and associated comments inform the refinement of rejection criteria. This continuous learning loop ensures the agent progressively aligns with the user’s specific needs, delivering an optimal match between requested content and retrieved videos.

Rapid Video Dataset Construction with Agents

VC-Agent represents a significant advance in the efficient construction of customized video datasets, addressing the challenges posed by scaling laws and the labor-intensive nature of traditional collection methods. Researchers have developed an interactive agent capable of understanding user queries and feedback, then retrieving or generating relevant video clips with minimal user input. The system combines existing multi-modal large language models and large language models within a novel pipeline that utilizes textual descriptions for video proposals and a dual-policy mechanism for effective filtering. Extensive experiments demonstrate that VC-Agent substantially reduces the time required to build high-quality video datasets, typically achieving results in just a few minutes of user interaction.

The team successfully used the agent to create ten large-scale video datasets, pushing the boundaries of customized dataset construction and offering a versatile tool for diverse research areas. Researchers acknowledge that increased user effort can correlate with more complex requirements, potentially increasing the difficulty for the system to accurately interpret and fulfill requests. Future work may focus on refining the agent’s ability to handle increasingly nuanced and detailed user specifications, further streamlining the dataset creation process.

👉 More information

🗞 VC-Agent: An Interactive Agent for Customized Video Dataset Collection

🧠 ArXiv: https://arxiv.org/abs/2509.21291