Reinforcement learning increasingly powers the capabilities of large language models, driving advancements in reasoning and coding seen in models such as GPT-o, DeepSeek-R1, and Kimi-K1. 5. Yuzhen Zhou from Carnegie Mellon University, Jiajun Li, and Yusheng Su, alongside colleagues including Gowtham Ramesh from Advanced Micro Devices, Inc., and Zilin Zhu and Xiang Long from LMSYS Org, address a critical bottleneck hindering progress, the computational expense of generating rollouts during training. Their research introduces Active Partial Rollouts in Reinforcement Learning, or APRIL, a novel method that significantly improves efficiency by proactively managing rollout requests and recycling incomplete responses. This approach tackles the problem of lengthy responses stalling processing, keeping GPUs consistently engaged and accelerating both training and final accuracy, with improvements of up to 44% in throughput and 8% in performance across various tasks. APRIL’s framework and hardware independence, demonstrated through integration with the slime RL framework and compatibility with AMD GPUs, represents a substantial step towards scalable and efficient reinforcement learning.

Large Language Model Reinforcement Learning Frameworks

A range of projects and frameworks are advancing reinforcement learning for large language models. Dapo provides an open-source system for scaling reinforcement learning, while Slime offers a high-performance post-training framework designed for efficient scaling. LlamaRL presents a distributed, asynchronous framework for large-scale language model training, and another system focuses on reinforcement learning optimization with a user-friendly scaling library. Further research explores group sequence policy optimization and techniques like StreamRL, which aims to create scalable and elastic reinforcement learning for language models, alongside methods for optimizing reinforcement learning from human feedback training.

Specific optimization techniques are also being developed. SortedRL accelerates training through online length-aware scheduling, and researchers are investigating how scaling test-time compute can be more effective than increasing model parameters. Specexec offers massively parallel speculative decoding for interactive language model inference on consumer devices, while other work focuses on learning to reason under off-policy guidance and leveraging off-policy reinforcement learning training. Infrastructure and system-level optimizations are also crucial, with projects like Orca, a distributed serving system for transformer-based models, and advancements in fully sharded data parallel training.

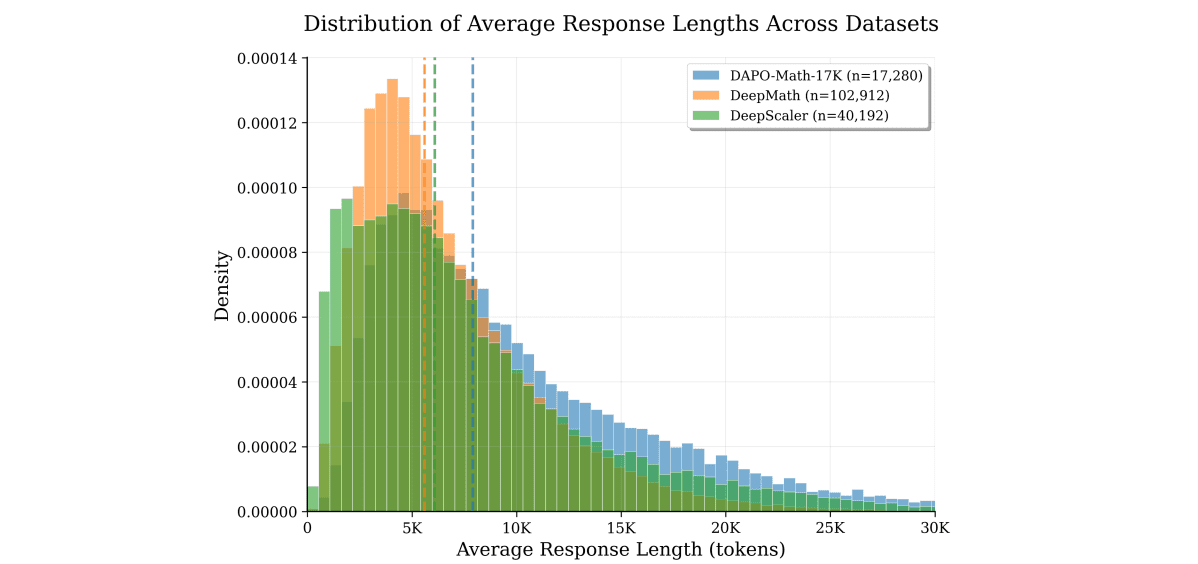

Sglang provides efficient execution of structured language model programs, contributing to overall system performance. Researchers are also publishing technical reports detailing model architectures, such as the Qwen3 model. Foundational work continues on key algorithms, including statistical gradient-following algorithms for connectionist reinforcement learning and investigations into the secrets of reinforcement learning from human feedback in large language models. Other relevant projects include Transformer reinforcement learning (Trl) and further advancements in efficient execution of structured language model programs, building on the Sglang framework. Traditional methods often suffer from low GPU utilization because the time it takes to generate rollouts varies significantly, forcing faster sequences to wait for the longest to complete. To overcome this, the team engineered a system that deliberately over-provisions rollout requests to inference engines, exceeding the standard batch size. Once the target number of completed rollouts is reached, the system actively terminates any remaining unfinished sequences, preventing wasted computation and minimizing idle GPU time.

By recycling these partial results, APRIL systematically alleviates the long-tail effect of varying rollout lengths, substantially reducing GPU idle time and enhancing overall training efficiency. Experiments demonstrate that APRIL improves rollout throughput by at least 20% across commonly used reinforcement learning algorithms, including GRPO, DAPO, and GSPO, and across various large language models. Furthermore, the team rigorously tested APRIL’s performance, showing it not only accelerates rollout generation but also achieves faster convergence and increases final accuracy by approximately 2% to 5% across tasks. The system is designed for broad compatibility, having been integrated into the slime reinforcement learning framework and successfully deployed on both NVIDIA and AMD GPUs. Researchers addressed the significant computational cost of rollout generation, which currently dominates reinforcement learning training time, by proactively reusing partial rollouts instead of discarding incomplete ones. By over-provisioning requests and recycling responses, APRIL avoids wasted computation and substantially increases throughput, achieving improvements of up to 44% across several commonly used algorithms. The team demonstrated that APRIL not only accelerates training and improves throughput but also maintains accuracy, achieving up to 8% higher final accuracy across various tasks. Importantly, APRIL is compatible with existing frameworks and hardware, including both NVIDIA and AMD GPUs, and has already been integrated into the slime RL framework. The authors acknowledge that their method represents a step towards more efficient training pipelines and anticipate that the principles behind APRIL will inspire further advancements in adaptive rollout strategies and the design of reinforcement learning frameworks that fully utilize modern hardware capabilities.

👉 More information

🗞 APRIL: Active Partial Rollouts in Reinforcement Learning to tame long-tail generation

🧠 ArXiv: https://arxiv.org/abs/2509.18521