The challenge of replicating the success of large language models in industrial search and recommendation systems remains significant, with many current approaches offering only modest improvements over existing deep learning methods. Sunhao Dai, Jiakai Tang, Jiahua Wu, and colleagues address this limitation by proposing a new framework, OnePiece, that incorporates key elements driving the performance of large language models, context engineering and multi-step reasoning, into industrial ranking pipelines. The team’s work moves beyond simply adopting transformer architectures, instead focusing on enriching input data with contextual cues and enabling iterative refinement of results, a process previously underexplored in commercial applications. Through deployment in Shopee’s personalised search system, OnePiece demonstrates substantial gains in key business metrics, including increased gross merchandise value per user and a rise in advertising revenue, signifying a substantial step forward in the field of industrial recommendation systems.

The breakthroughs of LLMs stem not only from their architecture, but also from two complementary mechanisms: context engineering, which enriches input queries with contextual cues, and multi-step reasoning, which iteratively refines outputs through intermediate reasoning steps.

Contextual Recommendation with Diverse Information Sources

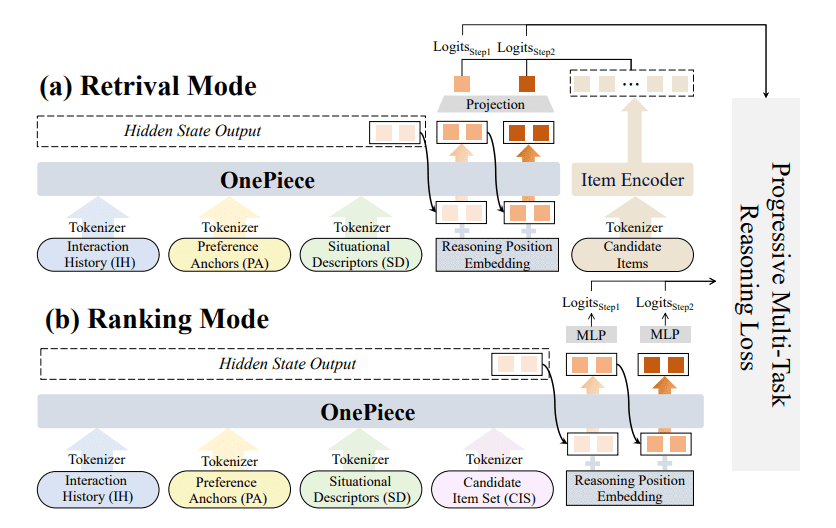

This research details OnePiece, a novel recommendation system designed for industrial applications. OnePiece improves recommendation accuracy by effectively integrating diverse contextual information, moving beyond simple user-item interactions to consider past user actions, long-term user preferences, current situational details, and the items being considered for recommendation. The system represents all these contextual components as a single sequence, allowing the model to learn relationships between them. OnePiece employs a multi-step reasoning process, where each step refines the user representation by querying different information sources, enabling efficient compression and progressive information enhancement. It operates in two stages: a retrieval stage filters a large pool of items to identify potential candidates, and a ranking stage refines the candidate list and predicts the relevance of each item. Attention visualization reveals that the model learns hierarchical and diversified reasoning strategies, with different parts of the model focusing on different aspects of the input, demonstrating strong cross-component interactions and enabling effective integration of diverse contextual information.

OnePiece Boosts Industrial Search and Recommendation Systems

LLM Ranking via Context and Reasoning

This work presents OnePiece, a novel framework designed to integrate principles from Large Language Models into industrial ranking systems. Researchers addressed the limitations of simply transplanting Transformer architectures, which yield only incremental improvements over existing Deep Learning Recommendation Models. The team focused on two key mechanisms driving LLM success, context engineering and multi-step reasoning, and successfully adapted them for ranking tasks. OnePiece achieves this through structured context engineering, augmenting user interaction history with preference and scenario signals, and block-wise latent reasoning, which refines representations through iterative processing. The framework’s effectiveness was demonstrated through deployment in Shopee’s personalized search, where it consistently improved key business metrics, including gains in GMV/UU and advertising revenue. Future research directions include exploring more sophisticated methods for context construction and refining the multi-step reasoning process, representing a significant step towards unlocking the full potential of LLM-inspired techniques in industrial-scale recommendation and search systems.

👉 More information

🗞 OnePiece: Bringing Context Engineering and Reasoning to Industrial Cascade Ranking System

🧠 ArXiv: https://arxiv.org/abs/2509.18091