Vision-Language-Action models are increasingly important for complex tasks, but their computational demands limit practical deployment, prompting researchers to explore methods for accelerating these systems. Hanzhen Wang, Jiaming Xu, and Jiayi Pan, from Shanghai Jiao Tong University, along with Yongkang Zhou and Guohao Dai from Shanghai Jiao Tong University and Infinigence-AI, address this challenge with a novel pruning technique. Their work reveals that existing pruning methods, which focus solely on the current action, overlook crucial information from previous actions, leading to significant performance drops. The team introduces SpecPrune-VLA, a new approach that intelligently prunes visual tokens by considering both the immediate context and the broader history of actions, achieving substantial speedups on standard hardware with minimal loss of accuracy, and representing a significant step towards more efficient and deployable VLA models.

Token pruning, a technique for accelerating Vision-Language-Action (VLA) models, selectively removes unimportant data to improve processing speed. Current pruning methods often overlook the broader context of previous actions, limiting their effectiveness and potentially reducing success rates. This research identifies a strong correlation between consecutive actions and introduces a novel approach that integrates both local and global information for more intelligent token selection, resulting in the development of SpecPrune-VLA.

Two-Stage Token Pruning for VLA Models

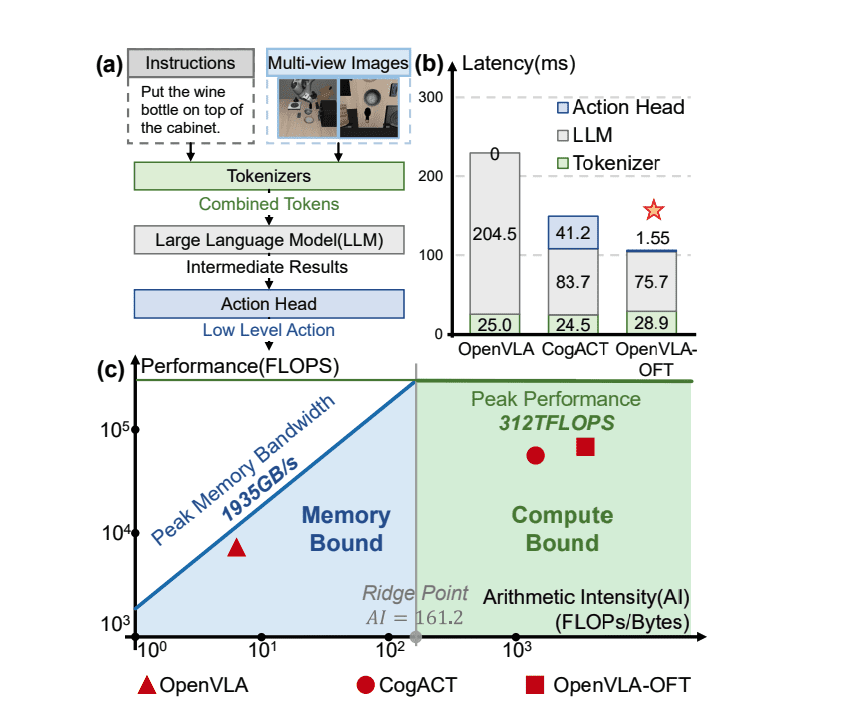

This research details a method for accelerating and compressing Vision-Language-Action (VLA) models, enabling more efficient robotic manipulation. The core idea is to strategically prune tokens, or image patches, during processing to reduce computational cost without significantly impacting performance. The team introduces a two-stage pruning approach: Static Token Pruning and Dynamic Token Pruning. This strategy identifies and retains only the most relevant image patches, removing less important tokens based on a pre-defined threshold and pruning rate. Dynamic Token Pruning further refines the process at specific layers, leveraging temporal dynamics to identify and remove redundant information.

A key insight is that task-relevant and dynamic image patches often provide complementary information, and focusing on their intersection helps pinpoint the most critical regions. Object boundaries exhibit significant changes over time, making them reliable indicators of important areas. Experiments demonstrate that this method achieves significant speedups and compression rates on several datasets without substantial performance degradation, outperforming methods like OpenVLA-OFT, VLA-Cache, and EfficientVLA.

Global and Local Context Improves VLA Speed

Scientists have developed SpecPrune-VLA, a novel method for accelerating Vision-Language-Action (VLA) models, achieving significant speedups without compromising performance. Existing token-pruning techniques often focus on local information, leading to substantial drops in success rates or limited acceleration. This new approach leverages both local and global context for smarter token selection, addressing a critical limitation in current VLA models. Researchers observed a strong correlation between consecutive action generations, enabling them to reuse information from previous steps to prune redundant tokens more effectively.

The team implemented a two-level pruning system, beginning with static pruning at the action level, which reduces visual tokens by utilizing global history and local context. This is coupled with dynamic pruning at the layer level, where tokens are pruned based on layer-specific importance, allowing the model to adaptively refine its computational focus. Furthermore, a lightweight action-aware controller categorizes actions as either coarse-grained or fine-grained, adjusting pruning aggressiveness accordingly, as fine-grained actions are more sensitive to pruning. Experiments conducted on both NVIDIA A800 and GeForce RTX 3090 GPUs demonstrate the effectiveness of SpecPrune-VLA, achieving an average speedup of 1.46times on the A800 and 1. 57times on the RTX 3090, with negligible impact on task success rates. Data reveals that VLA model inference is primarily compute-bound, meaning that computation, rather than memory access, is the primary bottleneck, and pruning effectively reduces this computational load.

Selective Pruning Accelerates Robotic Vision-Language Models

This research introduces SpecPrune-VLA, a new method for accelerating Vision-Language-Action models used in robotics. Existing pruning techniques often failed to maintain performance because they relied solely on information from the current action, ignoring valuable context from previous steps. SpecPrune-VLA addresses this by intelligently selecting tokens using both local and global information, resulting in a more efficient and effective pruning process. Experiments on a simulation benchmark demonstrate that SpecPrune-VLA achieves significant speedups, 1. 46times faster on NVIDIA A800 GPUs and 1.57 times faster on GeForce RTX 3090 GPUs, without compromising the success rate of robotic tasks. The method works by pruning tokens at two levels, adjusting the aggressiveness of pruning based on the type of action being performed, and retaining the most important data for accurate task completion. The authors acknowledge that all experiments were conducted in simulated environments and recognize that real-world deployment may present additional challenges. Future work will focus on validating the method’s effectiveness on physical robotic platforms to confirm its performance in more complex scenarios.

👉 More information

🗞 SpecPrune-VLA: Accelerating Vision-Language-Action Models via Action-Aware Self-Speculative Pruning

🧠 ArXiv: https://arxiv.org/abs/2509.05614