Understanding how open quantum systems evolve in space and time presents a significant challenge in modern information science, and accurately modelling these dynamics is computationally expensive. Dounan Du and Eden Figueroa, from Stony Brook University and Brookhaven National Laboratory, address this problem by introducing a novel neural network architecture that learns the spatiotemporal behaviour of these complex systems. Their method incorporates fundamental principles of physics to achieve efficient scaling, and importantly, allows for control over the system’s evolution through external parameters. The team demonstrates that this approach not only predicts system behaviour with high accuracy, even under unforeseen conditions, but also accelerates simulations by up to three orders of magnitude compared to traditional numerical methods, paving the way for real-time optimisation of quantum technologies and scalable modelling of complex light-matter interactions.

Neural Networks Simulate Quantum Memory Processes

This collection of references highlights a growing trend in quantum information science: the application of neural networks, particularly Transformer architectures, to simulate and model quantum systems. The research focuses on leveraging machine learning to understand and predict the behaviour of quantum memories and networks, areas crucial for advancing quantum technologies. These foundational works provide the context for the later integration of machine learning techniques. The bibliography demonstrates a clear progression towards utilizing neural networks for complex simulations. References detail the development of physics-informed neural networks and neural operators, capable of learning mappings between function spaces and solving partial differential equations essential for representing physical systems.

This builds towards the core focus: Transformers, originally developed for natural language processing, are now being adapted to model physical systems, including those in quantum mechanics, with architectures like axial attention and Swin Transformers being explored. Recent references demonstrate a specific focus on using Transformers for quantum simulation, employing these networks to approximate the ground state of quantum systems, simulate quantum dynamics, and develop neural quantum propagators. This work explores the potential of machine learning to accelerate simulations, improve accuracy, and ultimately control quantum systems, offering the potential to overcome computational limitations and unlock new possibilities in quantum technology.

Physics-Informed Neural Networks for Quantum Dynamics

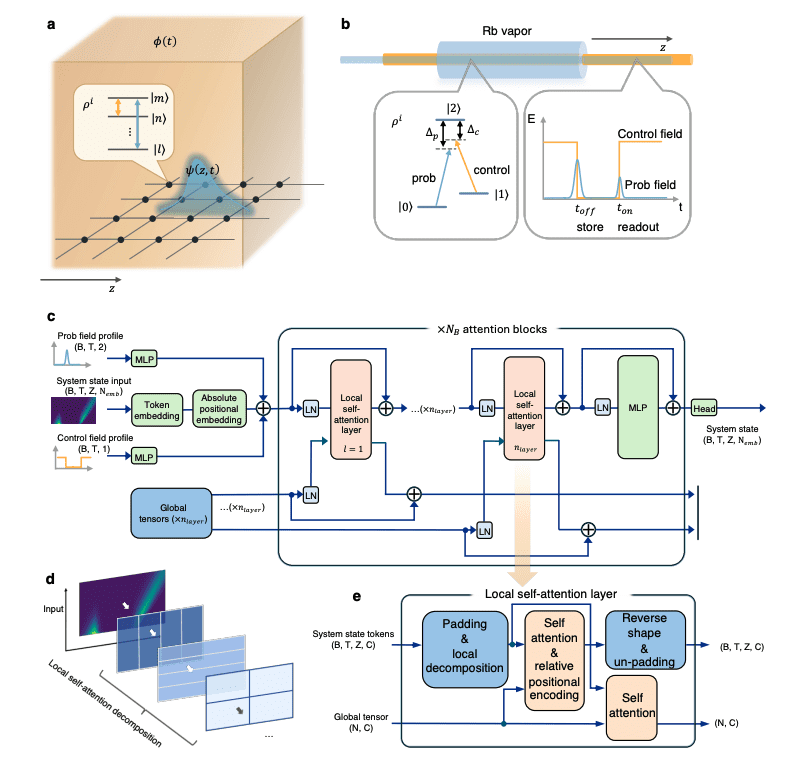

Scientists have developed a novel neural network architecture to model the complex dynamics of open quantum systems, overcoming computational limitations of traditional methods. The approach learns the spatiotemporal relationships within these systems, enabling significantly faster predictions without sacrificing accuracy. This innovative method bypasses the need to directly solve intricate equations governing system evolution, instead learning the dynamics from data and achieving speed-ups of up to three orders of magnitude, while incorporating translational invariance to improve scalability. To validate the approach, researchers generated synthetic data representing a driven, dissipative single qubit and a quantum memory based on electromagnetically induced transparency in rubidium-87 atoms.

These simulations produced datasets with varying control parameters, allowing the model to learn both expected and unexpected behaviours, and rigorously tested the model’s ability to generalize beyond its training data. High-fidelity simulations captured the interaction of laser fields with the atomic ensemble, accounting for realistic experimental parameters and incorporating decoherence effects. Different model configurations were evaluated, varying the number of trainable parameters and attention heads per layer, employing regional self-attention layers with axial decomposition to efficiently process the spatiotemporal data.

Neural Network Simulates Open Quantum Systems

Scientists have developed a novel neural network architecture capable of accurately simulating the complex dynamics of open quantum systems, achieving substantial speedups over traditional numerical methods. This breakthrough addresses a critical challenge in quantum information science, where simulating spatially structured systems with external control is computationally demanding. The team’s model learns the spatiotemporal evolution of these systems, incorporating the fundamental principle of translational invariance to achieve scalable complexity and efficient computation. Experiments demonstrate the model’s ability to predict the behaviour of both a driven dissipative single qubit and a more complex electromagnetically induced transparency quantum memory with high fidelity.

The architecture accurately captures the interplay between coherent quantum evolution, dissipative processes, and spatial propagation, even when presented with control parameters outside of its initial training data, achieving an acceleration of up to 1485x compared to conventional numerical solvers. The core of this advancement lies in a regional attention mechanism, which efficiently processes spatial information and allows the model to scale to larger, more complex systems. By encoding the local density matrix with built-in Hermiticity and employing a causal decoder structure, the model ensures physically consistent predictions. These results establish a general surrogate modeling framework with immediate relevance to large-scale quantum network simulation, real-time experimental feedback, and scalable quantum device optimization.

Physics-Informed Neural Networks Model Quantum Dynamics

This work introduces a new neural network architecture for modeling the dynamics of complex, open quantum systems, offering a computationally efficient alternative to traditional numerical methods. The model learns the behaviour of these systems by incorporating fundamental principles of physics, specifically translational invariance, and can accurately predict their evolution even under varying control parameters, achieving substantial speed-ups while maintaining high predictive fidelity. The research establishes a framework for surrogate modeling, with potential applications in the design of large-scale quantum networks, repeaters, and the optimisation of quantum devices. The authors acknowledge that the model’s performance is currently limited by the extent of its training data, and extrapolation to entirely unseen regimes remains a challenge, suggesting future work could incorporate broader datasets derived from real-world experiments to enhance generalisation capabilities.

👉 More information

🗞 Learning spatially structured open quantum dynamics with regional-attention transformers

🧠 ArXiv: https://arxiv.org/abs/2509.06871