Equilibrium Propagation (EP) offers a promising alternative to traditional backpropagation for training neural networks, potentially enabling on-chip learning with reduced computational demands, but its application has been limited by difficulties in training deep networks. Jiaqi Lin, Malyaban Bal, and Abhronil Sengupta from The Pennsylvania State University now present a new EP framework that overcomes these limitations by incorporating intermediate error signals, improving information flow and allowing for the successful training of significantly deeper architectures. This research represents a crucial step forward in scaling EP, as the team demonstrates state-of-the-art performance on challenging image recognition tasks using deep VGG networks and benchmark datasets like CIFAR-10 and CIFAR-100. By integrating knowledge distillation with local error signals, this work unlocks the potential for EP to become a viable solution for resource-constrained machine learning applications and paves the way for more biologically plausible learning systems.

Augmented Equilibrium Propagation for Stable Learning

Researchers introduced an augmented Equilibrium Propagation (EP) framework to address instability often encountered when training deep neural networks. EP aims to provide a more stable and efficient learning process by seeking a stable state within the network’s dynamics, differing from traditional methods that rely on gradient descent. The team enhanced the standard EP framework by adding intermediate learning signals, which guide the learning process and improve stability, allowing for the training of deeper architectures. The method utilizes a mathematical function representing the network’s overall objective, which EP seeks to minimize.

Loss functions measure the difference between the network’s output and the desired result, both at the output layer and at these intermediate layers, providing a refined learning signal. During training, the network’s weights are adjusted based on these signals, focusing on local connections and interactions to improve learning. A technique called linear mapping aligns the activations of a guiding “teacher” network with those of a learning “student” network, further stabilizing the process. This research contributes a novel training method, a theoretical understanding of its convergence, and the potential for more stable and efficient training of deep neural networks, paving the way for application in more complex systems.

Training Deep Networks with Equilibrium Propagation

Researchers engineered a novel approach to training deep neural networks using Equilibrium Propagation (EP), a biologically inspired learning rule. This method overcomes limitations encountered in deeper architectures by enhancing information flow and neuron dynamics, addressing the vanishing gradient problem. The system mimics biological processes, utilizing local error signals and knowledge distillation to improve gradient estimation and network stability. The core of the technique involves training convergent recurrent neural networks (CRNNs) where error signals propagate backward through connections, influencing preceding layers and adjusting synaptic weights.

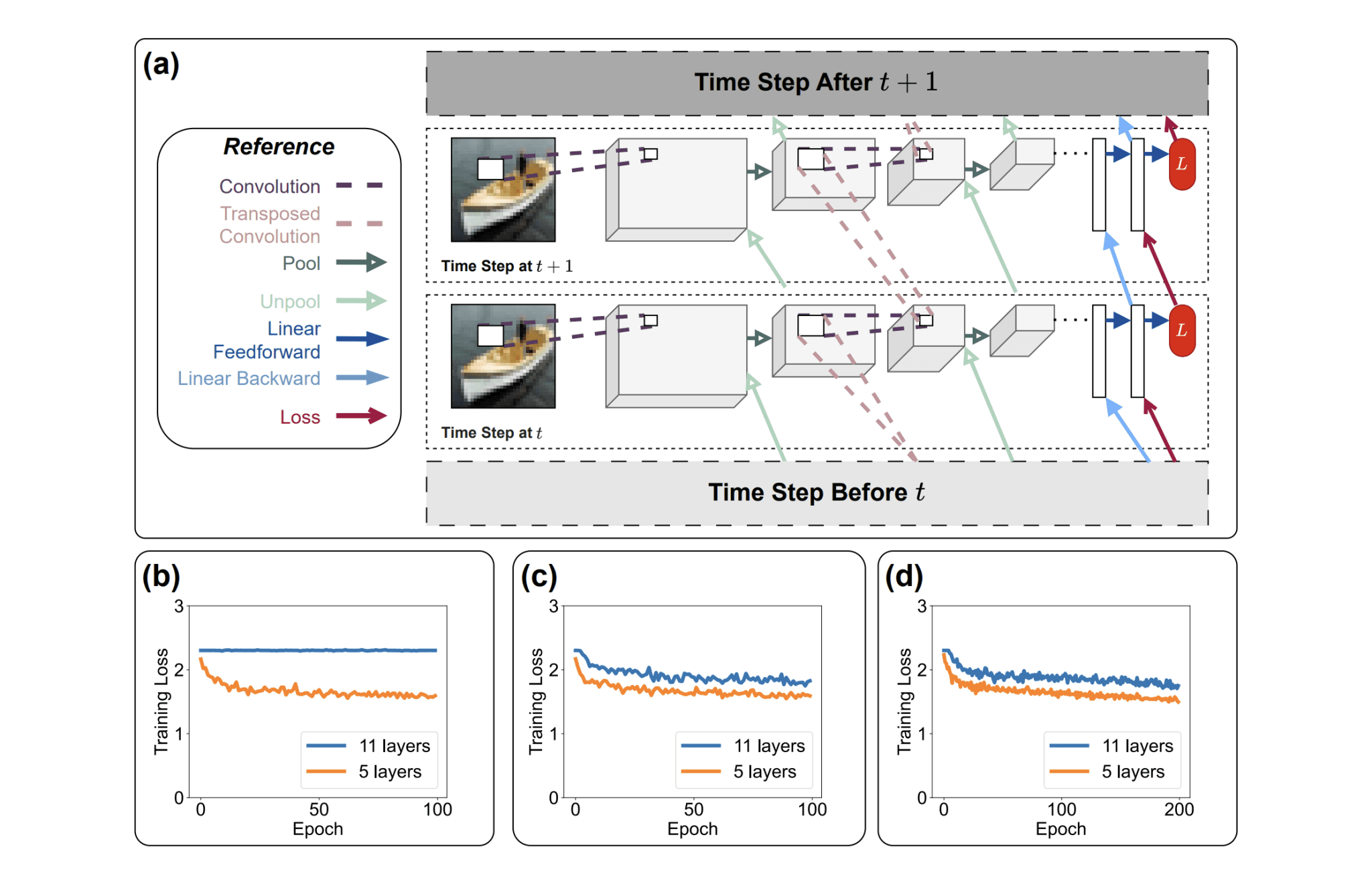

Experiments employ convolutional and pooling operations during forward passes, and transposed convolutions and unpooling during backward passes, effectively mirroring information flow within the network. To tackle the vanishing gradient issue, the team incorporated intermediate error signals, allowing for more robust gradient computations and enabling the training of significantly deeper CRNNs. Furthermore, scientists harnessed knowledge distillation, where a smaller “student” network learns from a larger, pre-trained “teacher” network, to further stabilize training and improve performance. The system delivers substantial improvements in training performance on the CIFAR-10 and CIFAR-100 datasets, showcasing the scalability of EP and paving the way for its application in real-world systems.

Deeper Recurrent Networks Trained with Equilibrium Propagation

Researchers have developed a novel framework for Equilibrium Propagation (EP), a biologically inspired learning rule, that significantly enhances its scalability to deep neural networks. Prior work with EP suffered from vanishing gradients in deeper architectures, hindering convergence and performance. This new approach directly addresses this limitation, enabling the training of substantially deeper convergent recurrent neural networks (CRNNs) than previously possible. The team’s breakthrough integrates both knowledge distillation and local error signals into the EP process, effectively boosting information flow and improving the convergence of neuron dynamics.

Experiments demonstrate that this augmented EP framework achieves state-of-the-art performance on the challenging CIFAR-10 and CIFAR-100 datasets, showcasing its ability to scale effectively with deep VGG architectures. Specifically, the researchers observed a marked improvement in training loss for VGG-11 CRNNs, a network previously susceptible to vanishing gradients, when trained with their enhanced EP method. The results clearly demonstrate that the integration of local error signals and knowledge distillation overcomes the limitations of earlier EP implementations, offering a biologically plausible alternative to traditional backpropagation algorithms and potentially unlocking new avenues in neuromorphic computing and artificial intelligence.

Deeper Networks and Reduced Computational Cost

Researchers presented a new framework for Equilibrium Propagation (EP), a biologically inspired learning rule for neural networks. They addressed the challenge of vanishing gradients in deep EP networks by incorporating intermediate error signals, which improves information flow and allows EP to effectively train deeper architectures. Experiments on the CIFAR-10 and CIFAR-100 datasets, using VGG architectures, demonstrate that this augmented EP framework achieves state-of-the-art performance without performance degradation as network depth increases, showcasing its scalability. Compared to the more conventional Backpropagation Through Time (BPTT), this EP framework significantly reduces demands on both GPU memory and computational resources.

The method employs local updates, making it particularly suitable for on-chip learning due to its single-circuit architecture and weight update mechanisms inspired by synaptic plasticity. While the current study focuses on convolutional networks, the authors acknowledge that future research could explore the generalizability of this framework to more complex architectures, such as large-scale vision models and transformer-based language models. The authors note a moderate increase in memory consumption due to the inclusion of additional weight matrices used in knowledge distillation, a trade-off considered acceptable given the performance gains.

👉 More information

🗞 Scalable Equilibrium Propagation via Intermediate Error Signals for Deep Convolutional CRNNs

🧠 ArXiv: https://arxiv.org/abs/2508.15989