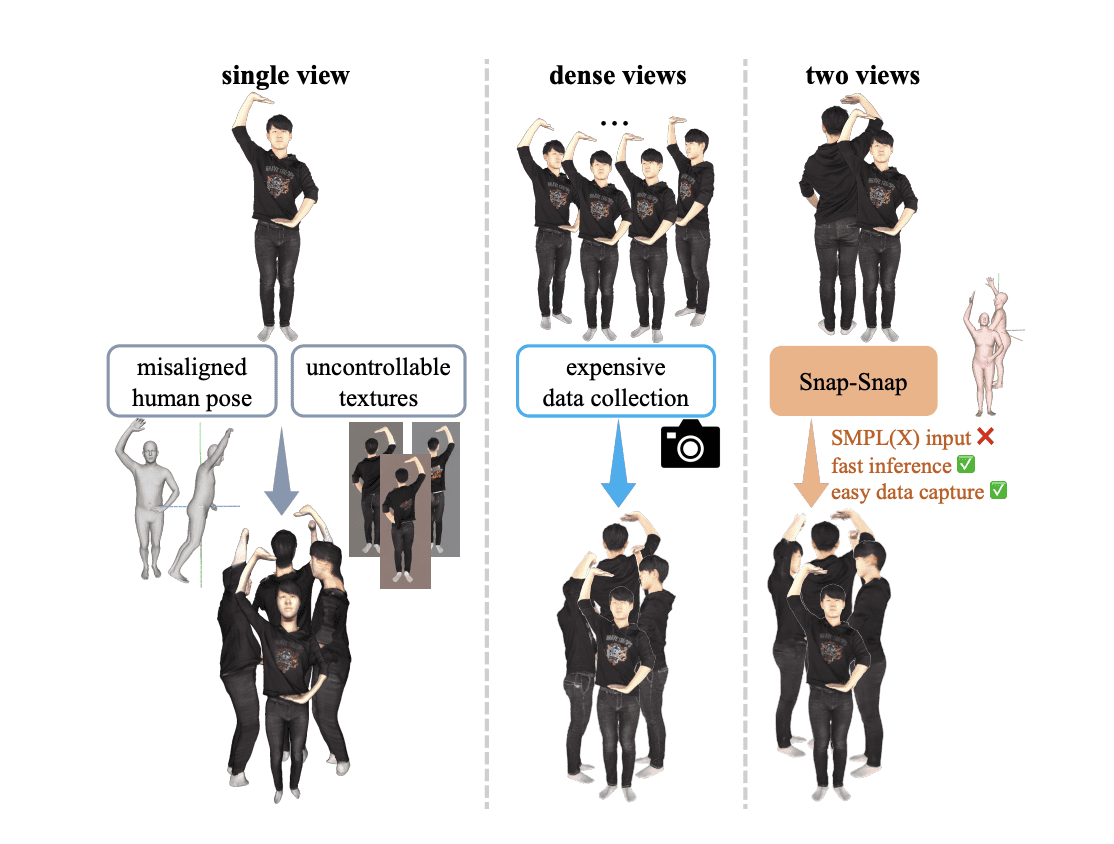

Reconstructing accurate 3D models of humans remains a key challenge in computer vision, with broad implications for virtual reality, animation, and personalised digital avatars. Jia Lu, Taoran Yi, and colleagues from Huazhong University of Science and Technology, alongside researchers from Huawei and Shanghai Jiaotong University, now present a remarkably efficient method for achieving this using only two images, a front and back view. This innovative approach overcomes the difficulty of building consistent 3D representations from minimal input, employing a redesigned reconstruction model trained on extensive human data. The team’s system rapidly generates complete, coloured 3D point clouds, which are then transformed into high-quality 3D Gaussians, and achieves full human reconstruction in just 190 milliseconds on standard hardware, significantly outperforming existing techniques and opening possibilities for accessible 3D capture using even mobile devices.

It achieves high-fidelity 3D human reconstruction with minimal input, a significant challenge compared to methods requiring multiple views or depth sensors. The system directly predicts 3D point clouds, avoiding the inaccuracies of relying on intermediate representations, and leverages a pre-trained model for foundational geometry, improving reconstruction quality. Additional prediction heads enhance the geometry of side views, improving completeness. Snap-Snap consistently outperforms other methods in terms of image quality and achieves fast inference speeds, making it suitable for real-time applications. It demonstrates robust reconstruction even with arbitrary images and poses, and produces more complete and higher-quality reconstructions than competing methods.

Fast 3D Human Reconstruction From Two Views

Researchers have developed Snap-Snap, a new approach for creating 3D human models from just two images, a front and back view, in milliseconds. This method prioritizes accessibility and speed without sacrificing quality, moving away from complex multi-view setups. The core innovation lies in a redesigned geometry reconstruction model that learns to predict consistent point clouds even with limited overlapping information between the two input images. The team trained this model on extensive human datasets, allowing it to understand human geometry and infer missing details. Recognizing the initial reconstruction lacked color, the researchers implemented an algorithm to wrap and apply color from the available views, creating a complete, colored point cloud.

These point clouds are then transformed into 3D Gaussians, a rendering technique that produces more realistic and detailed results. This entire process is remarkably efficient, completing a full human reconstruction in approximately 190 milliseconds on standard hardware, and achieving state-of-the-art performance on established datasets. By prioritizing speed and ease of use, Snap-Snap significantly lowers the barrier to entry for creating 3D human models, making the technology accessible to a wider range of users and applications, and performs well even with images captured from simple mobile devices.

Rapid 3D Human Reconstruction From Two Views

Researchers have developed a new method for reconstructing detailed 3D models of the human body from just two images, a front and back view, achieving results in a fraction of a second. This represents a significant advancement, as previous techniques often required multiple images or lengthy processing times. The new approach dramatically lowers the barrier to creating digital human models, enabling rapid 3D reconstruction with minimal effort. The core of the innovation lies in a redesigned reconstruction model that predicts complete point cloud representations of the human body, even with limited input data.

Unlike methods reliant on extensive image overlap, this system effectively builds a consistent 3D form from sparse viewpoints, adapting general geometric principles to the specific characteristics of the human form. To address the challenge of missing information, an enhancement algorithm supplements color details, creating a complete and visually accurate model. The resulting point clouds are then transformed into 3D Gaussians, a technique that improves rendering quality and realism. The speed and accuracy of the method are particularly noteworthy; complete human reconstruction is achieved in just 190 milliseconds using standard computer hardware, surpassing existing state-of-the-art techniques on benchmark datasets, and performs well with images captured from readily available mobile devices.

Fast 3D Human Reconstruction From Two Views

This research presents a new method for reconstructing three-dimensional human models from just two images, a front and back view. The team developed a system capable of predicting complete 3D human models, represented as 3D Gaussians, in just 190 milliseconds using a single GPU. This is achieved through a redesigned geometry reconstruction model, adapted from recent advances in foundational reconstruction models and specifically trained on extensive human data. To address the challenge of incomplete information from the two input images, the researchers implemented a nearest neighbour search algorithm to fill in missing details.

The resulting completed point clouds are then transformed into 3D Gaussians, enhancing rendering quality and enabling wider applications of human body reconstruction. Experiments demonstrate the method’s state-of-the-art performance on established datasets, and its ability to function effectively with images captured from readily available mobile devices. The team has made their code and demonstrations publicly available, facilitating further research and development in this area.

👉 More information

🗞 Snap-Snap: Taking Two Images to Reconstruct 3D Human Gaussians in Milliseconds

🧠 ArXiv: https://arxiv.org/abs/2508.14892