Researchers at Lawrence Livermore National Laboratory (LLNL), in collaboration with the Oden Institute at the University of Texas at Austin and the Scripps Institution of Oceanography at the University of California, San Diego, have developed a real-time tsunami forecasting system leveraging the exascale computing power of El Capitan-boasting a theoretical peak performance of 2. 79 quintillion calculations per second and funded by the Advanced Simulation and Computing (ASC) program at the National Nuclear Security Administration (NNSA).

Utilizing 43,500 AMD Instinct MI300A Accelerated Processing Units (APUs), the team performed offline precomputations solving extreme-scale acoustic-gravity wave propagation problems with 55. 5 trillion degrees of freedom-shattering the record for the largest unstructured mesh finite element simulation-and employing LLNL’s open-source finite element library, MFEM, to map ocean-floor motion to sensor data. This approach enables the solution of a billion-parameter Bayesian inverse problem in under 0. 2 seconds, achieving a 10-billion-fold speedup over existing methods and allowing for rapid predictions-complete with uncertainty quantification-on modest GPU clusters by inferring seafloor earthquake impact from real-time pressure sensor data and advanced physics-based simulations, representing a paradigm shift toward predictive, physics-informed emergency response systems applicable to a range of natural hazards and beyond.

Tsunami Forecasting Advance

Scientists at Lawrence Livermore National Laboratory (LLNL), in collaboration with the Oden Institute at the University of Texas at Austin (UT Austin) and the Scripps Institution of Oceanography at the University of California, San Diego (UC San Diego), have achieved a significant advancement in tsunami forecasting through the development of a real-time system powered by the exascale supercomputer El Capitan. This system leverages El Capitan’s theoretical peak performance of 2.79 quintillion calculations per second, facilitated by funding from the Advanced Simulation and Computing (ASC) program at the National Nuclear Security Administration (NNSA), to dramatically improve the speed and accuracy of tsunami predictions.

The core innovation lies in a precomputation step, executed offline, which generates an extensive library of physics-based simulations linking earthquake-induced seafloor deformation to the resulting propagation of tsunami waves. This precomputation involved solving extreme-scale acoustic-gravity wave propagation problems utilizing over 43,500 AMD Instinct MI300A Accelerated Processing Units (APUs). The resulting dataset enables real-time tsunami forecasting on comparatively smaller systems, a crucial feature for practical implementation in operational warning centres. Researchers solved a billion-parameter Bayesian inverse problem in under 0. 2 seconds, achieving a 10-billion-fold speedup over existing methodologies.

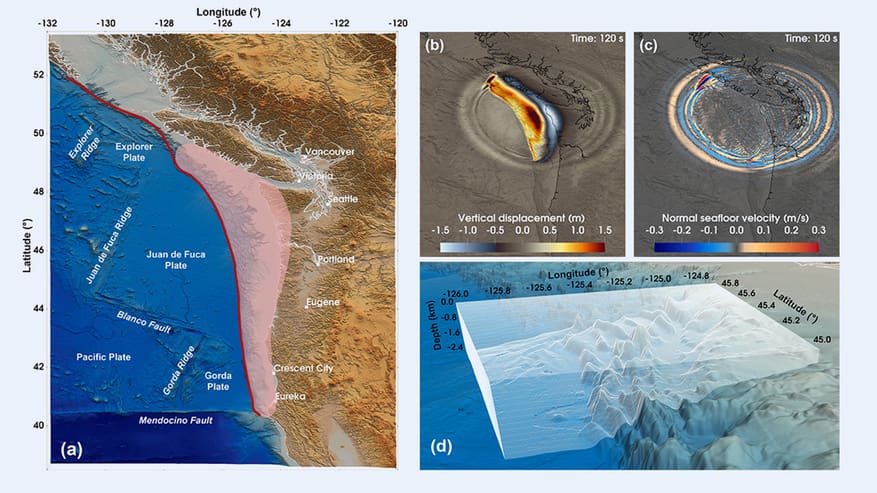

This advancement circumvents the limitations of conventional tsunami warning systems, which often rely on simplified models and can be prone to false alarms or delayed warnings due to their inability to fully capture the complexity of fault rupture dynamics. The system employs a dynamic, data-driven approach, modelling the effects of seafloor earthquake motion using real-time pressure sensor data and advanced physics-based simulations, coupled with uncertainty quantification. At the heart of this capability is MFEM, LLNL’s open-source finite element library, which facilitated scalable, GPU-accelerated simulations of acoustic-gravity wave propagation. Running on 43,520 APUs of El Capitan, MFEM performed the most compute-intensive phase, solving the acoustic-gravity wave equations to precompute mappings between ocean-floor motion and sensor data using 55. 5 trillion degrees of freedom, establishing a new record for the largest unstructured mesh finite element simulation. MFEM’s high-order methods and GPU readiness, developed under the ASC program and the Department of Energy’s (DOE) Exascale Computing Project, were instrumental in achieving full machine scalability.

This precomputed library allows for rapid predictions in seconds during an actual tsunami event using modest GPU clusters, transforming the potential for effective early warning systems. For near-shore events, such as a magnitude 8. 0 or larger earthquake along the Cascadia Subduction Zone, where the first destructive waves could reach the coast within 10 minutes, this speed is critical. Researchers anticipate deploying this approach in future tsunami warning systems as seafloor sensor networks, including distributed acoustic sensing, become more widespread and computational infrastructure continues to improve, resulting in faster, smarter, and more reliable emergency alerts. The Bayesian inversion framework is not limited to tsunamis and could be applied to a wide range of complex systems, from real-time wildfire tracking and subsurface contaminant tracking to space weather forecasting and intelligence applications.

Computational Infrastructure

The development of a real-time tsunami forecasting system at Lawrence Livermore National Laboratory (LLNL) represents a significant advancement in hazard mitigation, fundamentally enabled by the exascale computing capabilities of the El Capitan supercomputer. Boasting a theoretical peak performance of 2. 79 quintillion calculations per second, El Capitan, funded through the Advanced Simulation and Computing (ASC) program at the National Nuclear Security Administration (NNSA), facilitated the precomputation of an extensive library of physics-based simulations. This library establishes a critical link between earthquake-induced seafloor deformation and the resulting tsunami wave propagation, a process demanding immense computational resources. The core of this computational infrastructure relies on MFEM, LLNL’s open-source finite element library, specifically designed for scalable, GPU-accelerated simulations. Utilizing 43,520 AMD Instinct MI300A Accelerated Processing Units (APUs), researchers solved the acoustic-gravity wave equations, achieving a record-breaking simulation involving 55. 5 trillion degrees of freedom.

This precomputational phase effectively maps ocean-floor motion to sensor data, enabling the system to infer the impact of an earthquake on the ocean floor with unprecedented speed and accuracy. The success of this approach is directly attributable to MFEM’s high-order methods and GPU readiness, developed under the auspices of both the ASC program and the Department of Energy’s (DOE) Exascale Computing Project. The precomputed library allows for real-time tsunami forecasting on significantly more modest GPU clusters, a crucial factor for practical deployment. This contrasts sharply with conventional tsunami warning systems, often reliant on simplified models or computationally intensive full-physics simulations requiring hours or days to produce results. This capability is particularly vital for near-shore events, such as those anticipated along the Cascadia Subduction Zone, where destructive waves can reach coastal areas within minutes, demanding immediate and accurate predictions. The framework’s adaptability extends beyond tsunami forecasting, offering potential applications in diverse fields including real-time wildfire tracking, subsurface contaminant tracking, space weather forecasting and intelligence applications.

More information

External Link: Click Here For More