Efficient video processing remains a significant challenge for computer vision systems, mainly due to the vast amount of redundant information within video footage. Haichao Wang, Xinyue Xi, and Jiangtao Wen, along with colleagues, from Shenzhen International Graduate School, Tsinghua University, and New York University, present a new approach that streamlines video analysis by directly processing raw image data, bypassing traditional image processing steps. Their system employs a rapid motion estimation technique, identifying changes between frames using a block matching algorithm, and then refines these estimations with a context-aware network that focuses on areas needing correction. This innovative method achieves substantial speed improvements in video vision tasks while maintaining a high level of accuracy, representing a key step towards more efficient and responsive video applications.

Semantic Segmentation and Optical Flow Estimation

This body of research explores core computer vision tasks, with a strong emphasis on semantic segmentation, the process of classifying each pixel in an image, and optical flow estimation, which determines motion between frames in a video. Several approaches to semantic segmentation are investigated, including SegFormer and BiSeNet, known for their efficiency and real-time performance, respectively. Other techniques, such as DfaNet and SegBlocks, focus on deep feature aggregation and dynamic resolution to achieve fast and accurate segmentation. Optical flow estimation, crucial for understanding video content, is addressed by methods like FlowNet and its evolution, FlowNet 2.

PWC-Net utilizes a different approach, employing CNNs with pyramid, warping, and cost volume techniques. Depth estimation, which aims to understand the 3D structure of a scene, is also explored. A key trend throughout these investigations is efficient video processing, driven by the demands of applications like autonomous driving and mobile devices. Researchers are developing techniques like TapLab and Movinets, alongside efficient network designs, to achieve real-time or low-latency performance.

Motion estimation and analysis, heavily reliant on optical flow, contribute to a comprehensive understanding of dynamic scenes. Fundamental image processing techniques are also under scrutiny, including image signal processing (ISP), and methods for image restoration and enhancement. Researchers are exploring ways to improve noise reduction, automatic image quality tuning, and techniques for demosaicing, denoising, and super-resolution. Several datasets, such as Cityscapes and KITTI, provide valuable resources for training and evaluating these algorithms. Convolutional neural networks (CNNs) remain a workhorse for many of these approaches, but transformers are gaining popularity, especially for tasks like segmentation and depth estimation.

Efficient network design, incorporating techniques like bilateral networks and mobile-friendly architectures, is a recurring theme. The research highlights the importance of real-time performance, deep learning, and leveraging compressed video data for faster processing. This collection of work demonstrates a vibrant and rapidly evolving field focused on building intelligent systems that can understand and interpret visual information.

Raw Bayer Data Speeds Vision Processing

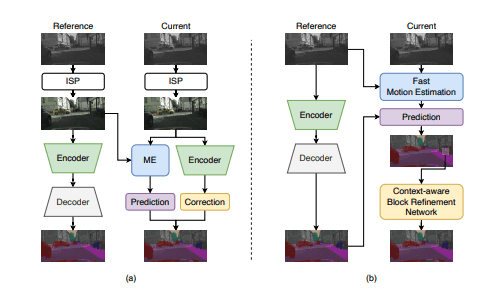

Researchers have developed a novel video vision system designed to overcome limitations in processing speed and efficiency, particularly when dealing with high frame rate footage. Recognizing that traditional systems struggle with computational demands, the team streamlined the entire process from data input to analysis. A key innovation lies in bypassing the standard image signal processor and directly feeding raw Bayer-format data into the vision models. This eliminates a significant bottleneck, reducing initial processing time and computational load. To address inherent redundancy in video, the team moved away from established methods like video codecs and optical flow estimation, which introduce computational overhead.

Instead, they developed a fast block matching-based motion estimation algorithm specifically tailored for computer vision applications. This algorithm efficiently identifies movement between frames, allowing the system to focus processing on areas that have changed. Further refining the motion estimation, the researchers incorporated a context-aware block refinement network, which leverages information from surrounding image areas to improve the accuracy of motion vectors. To balance computational efficiency with overall accuracy, a dynamic frame selection strategy was implemented, intelligently designating certain frames as “key frames” for full analysis. The combined effect of these innovations is a system that significantly accelerates video vision tasks with minimal performance loss.

Raw Data Processing Accelerates Video Vision

Researchers have developed a new video vision system designed to significantly accelerate processing speeds while maintaining accuracy, addressing a key challenge in artificial intelligence and computer vision applications. The system achieves this by fundamentally altering how video data is handled, bypassing traditional methods that rely on extensive pre-processing and complex motion estimation techniques. Instead of first converting video data into a standard format, the system directly processes raw data captured by the camera’s sensor, eliminating a major computational bottleneck. A core innovation lies in a fast, block-matching motion estimation algorithm, specifically tailored for video vision tasks.

This algorithm efficiently identifies movement between frames, creating a map of motion vectors that guides subsequent processing. Unlike existing methods that extract motion vectors from compressed video or rely on computationally intensive optical flow models, this approach prioritizes speed without sacrificing precision. The system further refines these motion vectors with a dedicated module, ensuring accurate tracking even in complex scenes. Performance analysis reveals that conventional motion estimation techniques can consume substantial processing time, making them a significant obstacle to real-time video analysis.

To further optimize efficiency, the system intelligently selects which frames require full processing by the main vision model. Frames with minimal changes are predicted from key frames, reducing redundant calculations. A lightweight correction network then focuses on refining only the areas of non-key frames that exhibit significant motion, ensuring high accuracy without processing the entire frame. This selective approach allows the system to achieve substantial acceleration, while maintaining performance comparable to existing methods.

Efficient Video Vision with Temporal Refinement

This research presents a new video vision system designed to improve efficiency by addressing temporal redundancy and front-end processing overhead. The team achieves acceleration by directly feeding raw video data into neural networks, bypassing traditional image signal processing, and employing a fast block matching algorithm for motion estimation. A context-aware block refinement network then corrects errors, and a frame selection strategy dynamically balances accuracy and efficiency based on the degree of shift between frames. Experiments demonstrate that this method significantly speeds up video vision tasks with only a slight reduction in performance. The authors acknowledge that existing video vision acceleration techniques still contain computational redundancies, particularly in motion estimation, and their approach aims to mitigate these issues. Future work could explore further refinements to the motion estimation algorithm and the frame selection strategy to optimise the balance between speed and accuracy even further, potentially broadening the applicability of this system to a wider range of video analysis scenarios.

👉 More information

🗞 Fast Motion Estimation and Context-Aware Refinement for Efficient Bayer-Domain Video Vision

🧠 ArXiv: https://arxiv.org/abs/2508.05990