Large language models frequently struggle when applied to specific, specialist fields, often failing to match their performance in general knowledge tasks. Researchers led by Xiaopeng, at [Institution name not provided in source], propose a new framework, AQuilt, designed to overcome this limitation by automatically generating high-quality training data for these niche applications. The team’s approach uniquely weaves together logical reasoning and self-inspection techniques during data creation, encouraging the language model to not simply recall information, but to actively process and verify its responses. This results in a dataset that significantly improves performance on specialist tasks while dramatically reducing the computational cost, achieving comparable results to a leading model with just seventeen percent of the resources, and demonstrating a marked increase in the relevance of the generated data to real-world applications.

Logic and Inspection Enhance Synthetic Data Generation

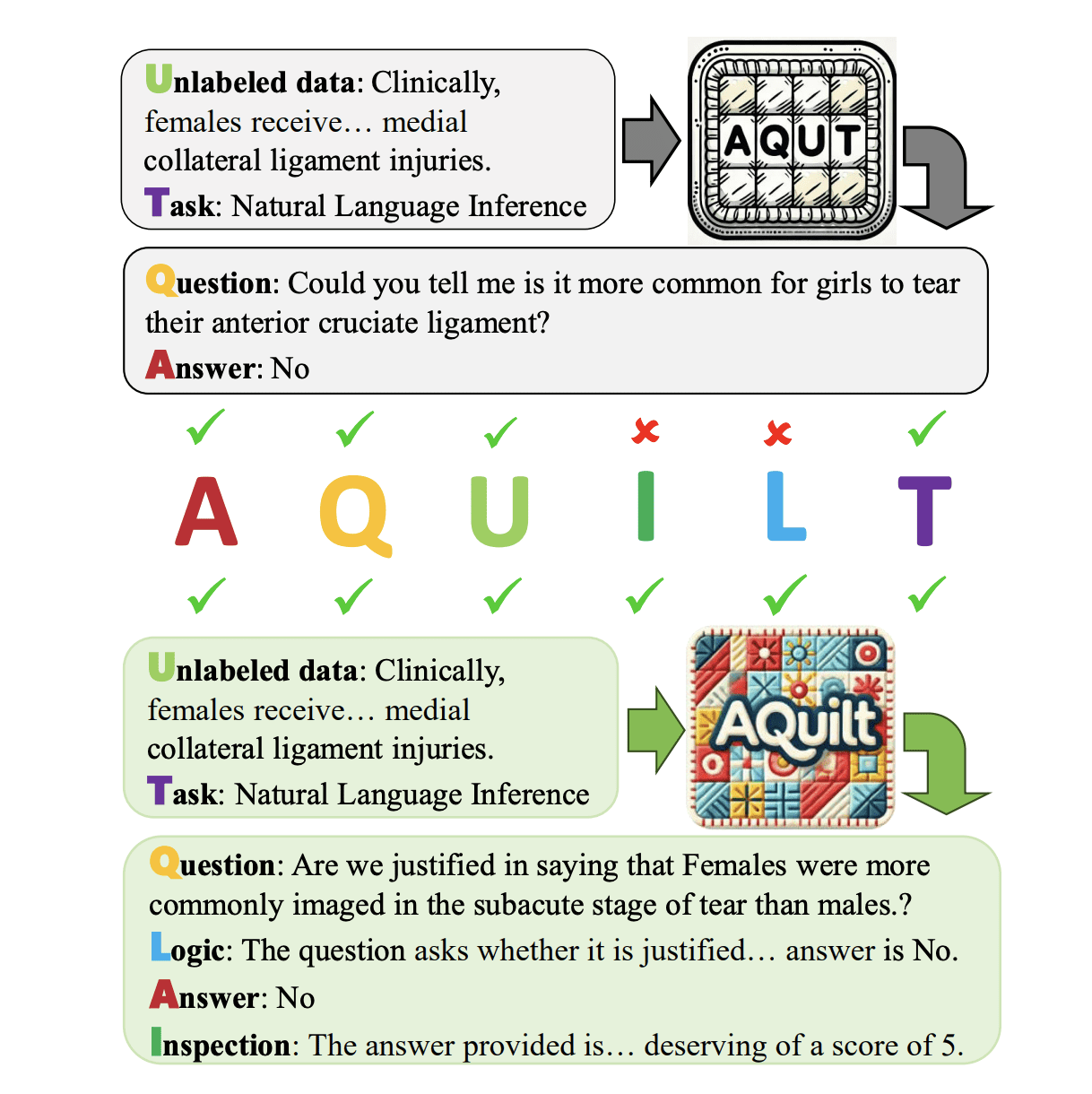

To address limitations in applying large language models to specialized fields, researchers developed AQuilt, a novel framework for generating high-quality training data from unlabeled sources. Existing methods for creating synthetic data often require expensive, powerful models or struggle to capture the nuances of specific domains, hindering their effectiveness and accessibility. AQuilt overcomes these challenges by focusing on a cost-effective approach that leverages the inherent domain-specific knowledge embedded within unlabeled data itself. The core innovation expands the traditional question-answer format of synthetic data generation to include additional layers of information, specifically Logic and Inspection.

Rather than simply creating question-answer pairs, AQuilt generates accompanying logical reasoning for each answer, and a self-assessment of the answer’s quality, encouraging the language model to not only provide correct responses, but also to justify its reasoning and evaluate its own work. This multi-faceted approach aims to improve the model’s reasoning abilities and ensure the reliability of the generated data. Furthermore, the framework incorporates ‘Task type’ to broaden the model’s adaptability and improve its performance on unseen tasks. A smaller, specialized data synthesis model is trained using this expanded dataset, significantly reducing the computational costs associated with data generation. The researchers successfully constructed a substantial dataset of over 700,000 examples, demonstrating that AQuilt can achieve performance comparable to much larger and more expensive models, while utilizing only a fraction of the resources. This makes high-quality, domain-specific data generation more accessible and practical for a wider range of applications.

Synthetic Data Boosts Specialized Language Model Performance

Researchers have developed a new framework, AQuilt, designed to improve the performance of large language models (LLMs) in specialized fields like law and medicine, where general-purpose models often struggle. The core innovation lies in a method for creating high-quality training data from unlabeled sources, effectively teaching the LLM the nuances of a specific domain without requiring expensive, manually labeled datasets. Unlike existing approaches that rely on powerful, costly models or limited, simple data generation, AQuilt focuses on extracting maximum value from readily available unlabeled data. AQuilt distinguishes itself by generating not only question-answer pairs, but also incorporating “logic” and “inspection” components into the synthetic data.

This encourages the LLM to reason through problems and self-evaluate its responses, leading to more accurate and reliable results. By expanding the type of data generated, the framework also improves the model’s ability to generalize to new, unseen tasks. The team successfully created a dataset of 703,000 examples using this method, demonstrating its scalability and efficiency. Experiments reveal that AQuilt achieves performance comparable to the DeepSeek-V3 model, a leading LLM, but at a significantly reduced cost, only 17% of the production expense. This cost reduction is achieved by training a smaller, more focused data synthesis model, making advanced LLM technology more accessible.

Furthermore, analysis confirms that the inclusion of logic and inspection components, alongside the higher relevance of the generated data, directly contributes to the framework’s strong performance. The researchers have made their data synthesis model, training data, and code publicly available, fostering further development in specialized LLMs and data synthesis techniques. This open-source approach promises to accelerate progress in creating more powerful and cost-effective AI solutions for a wide range of specialized domains, moving beyond the limitations of general-purpose models.

Logic and Inspection Improve Data Synthesis

This research presents AQuilt, a framework designed to generate high-quality training data for specialized domains using unlabeled data. The method incorporates elements of logic and self-inspection to improve the reasoning capabilities of the generated data, and allows for customization across different tasks. Experiments demonstrate that AQuilt achieves performance comparable to the DeepSeek-V3 model, but at a significantly reduced production cost, less than 17%, and generates data with greater relevance to downstream tasks. The study successfully outperforms the Bonito data synthesis model in both task generalization and overall performance, highlighting the effectiveness of the logic and inspection components. The authors acknowledge limitations including reliance on a single source for distilled data and a focus on high-resource languages, suggesting future work could expand data sources and explore performance on mid- and low-resource languages. They also plan to investigate the potential of integrating their self-inspection framework with more advanced data synthesis models, such as DeepSeek-R1 and Kimi-K1.

👉 More information

🗞 AQuilt: Weaving Logic and Self-Inspection into Low-Cost, High-Relevance Data Synthesis for Specialist LLMs

🧠 DOI: https://doi.org/10.48550/arXiv.2507.18584