Detecting moving objects quickly and accurately is crucial for applications like self-driving cars and robotics, and motion segmentation offers a powerful way to achieve this by identifying dynamic elements in a scene without needing to classify them. Riku Inoue and Masamitsu Tsuchiya, from their respective institutions, along with Yuji Yasui, present a new approach to motion segmentation that significantly boosts efficiency. Their research introduces Channel-wise Motion Features, a cost-volume-based method that captures 3D motion information using only a pose network, unlike existing models which rely on multiple complex subnetworks. The results demonstrate a four-fold increase in processing speed on standard datasets, while maintaining comparable accuracy and reducing the number of model parameters by approximately 75 percent, representing a substantial advance for real-time applications.

Streamlined Motion Segmentation with Feature Channels

Accurately detecting moving objects in real-time is essential for applications like self-driving cars and robotics, allowing these systems to navigate complex environments safely. Motion segmentation, a technique for identifying these dynamic objects, offers a promising solution by classifying pixels as either moving or stationary. However, current motion segmentation models often rely on multiple complex subnetworks to estimate various scene properties, significantly increasing computational demands and hindering real-time performance. Researchers Riku Inoue, Masamitsu Tsuchiya, and Yuji Yasui at Honda R&D have developed a novel approach called Channel-wise Motion Features to address this challenge.

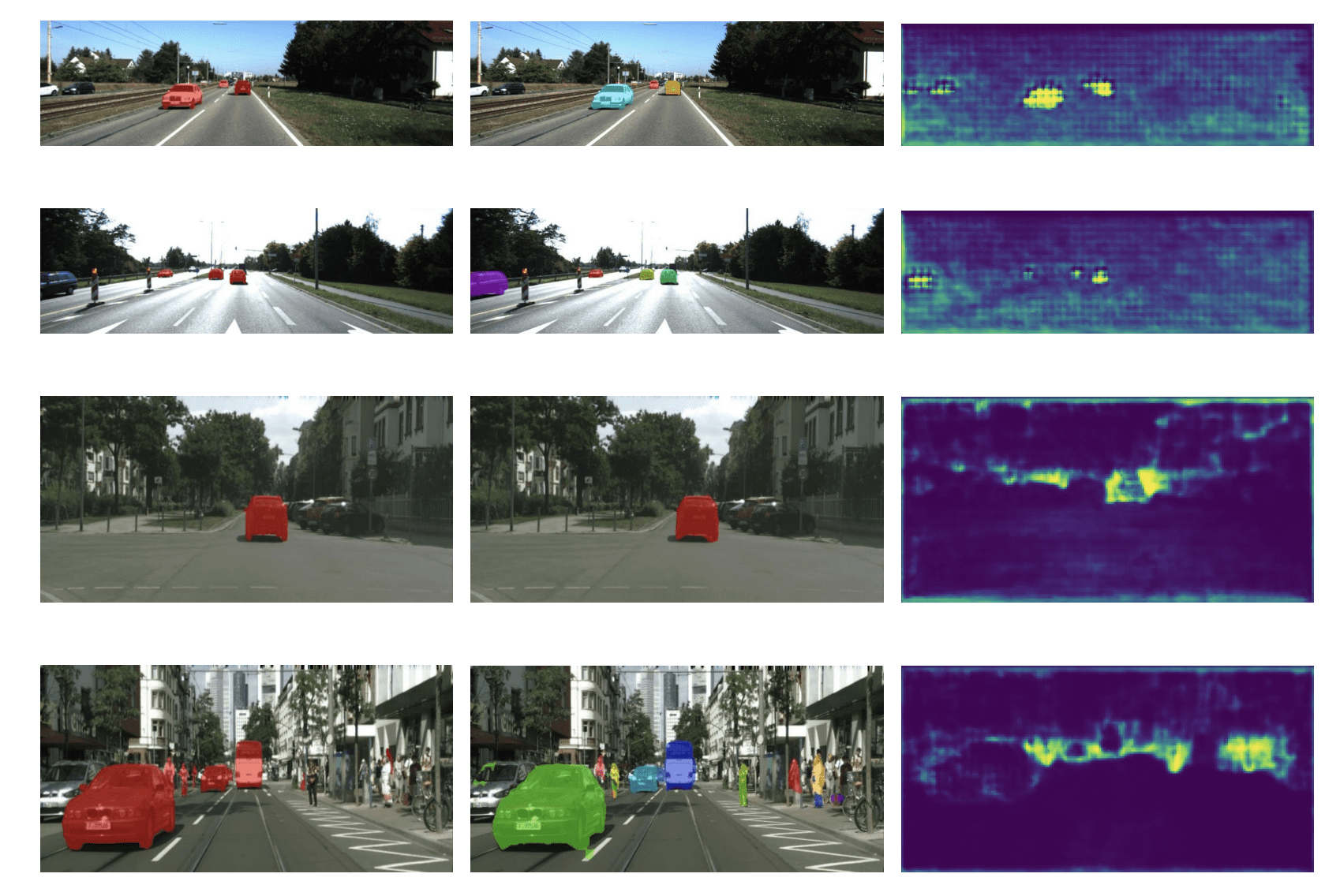

Their method streamlines the process by efficiently representing motion information within a 3D framework, reducing the need for multiple subnetworks. The team observed inconsistencies in existing cost volume techniques when applied to regions containing moving objects, and leveraged this characteristic to enhance motion detection. The core of their innovation lies in a new representation that extracts depth features and captures 3D motion information using only the pose network, a significant reduction in computational load. By aggregating information from feature maps, the model constructs a 4D cost volume that highlights the unique characteristics of moving objects, making them easier to identify.

This approach achieves approximately four times the processing speed of state-of-the-art models on standard datasets, while maintaining comparable accuracy and reducing the number of parameters required. Through extensive experimentation, the researchers demonstrate the effectiveness of Channel-wise Motion Features in accurately and efficiently segmenting motion in complex scenes. They also introduce a novel depth range setting specifically tailored for detecting moving objects, further enhancing the model’s performance. This advancement represents a significant step towards enabling real-time, robust motion segmentation for safety-critical applications, paving the way for more reliable and responsive autonomous systems.

Faster, Accurate Motion Segmentation with Channel Features

The model achieves approximately four times the frames per second (FPS) of state-of-the-art models on both the KITTI Dataset and Cityscapes from the VCAS-Motion Dataset. Importantly, this performance gain occurs without compromising accuracy, as the method demonstrates equivalent results to existing approaches. Furthermore, the method significantly reduces the number of parameters required, achieving a reduction to approximately 25% of those used by comparable models. This combination of increased speed and reduced computational cost represents a substantial advancement in the field.

Efficient Motion Features From Single Pose Network

Typically, the overall computational cost of the model increases, hindering real-time performance. This research proposes a novel cost-volume-based motion feature representation, termed Channel-wise Motion Features, which offers enhanced efficiency. The method involves extracting depth features from each instance in the feature map and capturing the scene’s 3D motion information. Notably, the Pose Network is the only subnetwork used to build Channel-wise Motion Features, eliminating the need for others.

Faster, Accurate Motion Segmentation with Channel Features

We propose Channel-wise Motion Features, a novel motion feature representation for motion segmentation. The work addresses the previously unattained trade-off between inference speed and accuracy, demonstrating the efficiency and effectiveness of the proposed model. Experiments show that the model achieves approximately four times the FPS and reduces parameters by about 25%, with only a 6.09% F-measure drop on the KITTI Dataset and a 0.48% CAQ decrease on the VCAS-Motion Cityscapes Dataset compared to state-of-the-art models. Furthermore, ablation analysis demonstrates that Channel-wise Motion Features effectively captures features of moving objects.

We expect that these findings will lead to advancements in motion segmentation and applications such as autonomous driving. Limitations Similar to previous methods based on instance activation, the model exhibits a weakness in detecting smaller objects. Additionally, distant moving objects, which exhibit subtle apparent motions, are not reliably detected. Potential solutions include employing high-resolution feature maps when constructing the Cost Volume, although such approaches significantly increase computational cost. Since processing the 4D Cost Volume with 3D convolutions requires a large amount of GPU memory, practical solutions to address this issue are necessary. We intend to address these challenges in future research.

👉 More information

🗞 Channel-wise Motion Features for Efficient Motion Segmentation

🧠 DOI: https://doi.org/10.48550/arXiv.2507.13082