Large language models translating natural language into executable SQL offer data access for non-technical users, but lack inherent uncertainty expression. Research evaluating confidence estimation strategies in supply chain data retrieval finds language models frequently overestimate accuracy. Embedding-based semantic similarity proves most effective at identifying flawed SQL queries.

The increasing application of large language models (LLMs) to data analysis presents both opportunities and challenges for organisations seeking to unlock insights from complex, structured datasets. While LLMs facilitate access to information for users without specialist technical skills by converting natural language queries into executable SQL code, a critical limitation lies in their inability to inherently quantify the reliability of these generated queries. Jiekal, Yikai Zhao, and colleagues from Amazon Web Services Supply Chain address this issue in their research, detailed in the paper “Confidence Scoring for LLM-Generated SQL in Supply Chain Data Extraction”. Their work evaluates several methods for estimating the confidence level of SQL queries produced by LLMs, specifically within the context of supply chain data retrieval, and investigates the potential of translation-based consistency checks, embedding-based semantic similarity, and self-reported confidence scores to accurately identify potentially inaccurate outputs.

Supply chain analytics increasingly utilises Large Language Models (LLMs) to convert natural language questions into executable Structured Query Language (SQL), thereby broadening access to structured data and empowering a wider range of users to extract valuable insights. However, LLMs do not inherently quantify uncertainty, presenting significant challenges in assessing the reliability of generated queries and necessitating robust confidence scoring mechanisms.

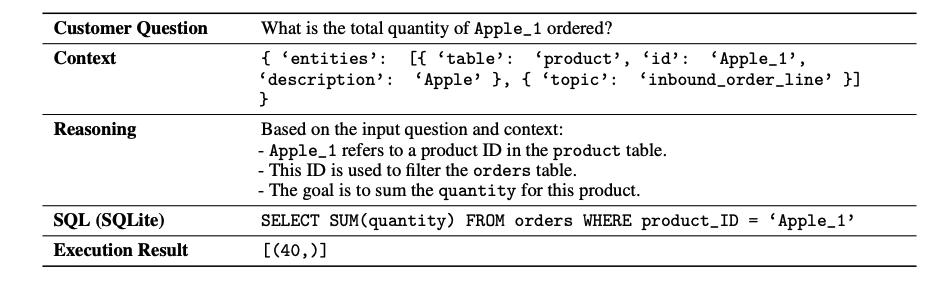

Researchers are currently investigating several approaches to determine which best identifies inaccurate queries within the complexities of supply chain data. Translation-based consistency checks assess reliability by translating both the original question and the generated SQL back into natural language, then comparing the results for logical consistency and semantic equivalence. Embedding-based similarity, conversely, measures the semantic closeness between the user’s question and the generated SQL, operating on the assumption that higher similarity indicates greater accuracy and a more faithful representation of the user’s intent. The study employs Claude Sonnet 3 as its LLM, focusing on its performance within the specific domain of supply chain analytics and the intricacies of its data structures.

Findings reveal a significant issue with LLM calibration, as models frequently exhibit overconfidence, assigning high confidence scores to incorrect SQL queries and undermining trust in the generated results. This limits the effectiveness of relying on the LLM’s self-reported confidence as a reliable indicator of accuracy, necessitating supplementary methods to accurately reflect the uncertainty inherent in their SQL generation process. In contrast, embedding-based similarity methods demonstrate a stronger ability to discriminate between accurate and inaccurate SQL, consistently identifying instances where the query does not logically reflect the intent of the request. The research highlights that simply obtaining an answer is insufficient; establishing trust in the answer’s validity is paramount, especially within data-sensitive applications like supply chain management.

Embedding-based approaches offer a promising avenue for improving the trustworthiness of LLM-powered data analysis, consistently outperforming simpler metrics and providing a more nuanced assessment of query correctness. The research underscores the necessity of robust confidence scoring mechanisms to mitigate the risk of flawed insights and costly decisions arising from inaccurate SQL queries within supply chain operations, ensuring data-driven decisions are based on reliable information.

Retrieval-Augmented Generation (RAG), a technique involving providing the LLM with relevant data to enhance its knowledge base, may improve performance, but does not resolve the underlying issue of calibration.

Future research should explore the effectiveness of fine-tuning LLMs on domain-specific data, as this may significantly improve both SQL generation accuracy and the reliability of confidence scores, tailoring the model to the specific nuances of supply chain analytics. Ultimately, developing robust confidence scoring mechanisms is essential for enabling the safe and effective deployment of LLM-powered text-to-SQL systems in critical applications such as supply chain management, fostering trust and enabling data-driven decision-making.

Expanding the scope of evaluation to encompass a wider range of supply chain datasets and query types remains crucial, ensuring the robustness and generalizability of the findings. Moreover, research should explore combining factual grounding with transparent reasoning to further enhance performance.

👉 More information

🗞 Confidence Scoring for LLM-Generated SQL in Supply Chain Data Extraction

🧠 DOI: https://doi.org/10.48550/arXiv.2506.17203