A new framework generates real-time audio-driven portrait videos using diffusion models, achieving up to 78 frames per second at 384×384 resolution and 45 FPS at 512×512. Variable-length generation, consistency training, quantization, and a novel inference strategy enable low latency (140-215ms) and control over facial expressions and states.

The demand for realistic, responsive digital avatars continues to grow across entertainment, communication, and virtual reality applications. Achieving convincing interaction necessitates not only visually accurate representations but also the capacity to generate video in real-time, synchronised with audio input. Researchers at Meituan Inc. – Haojie Yu, Chao Wang, Zhaonian Wang, Tao Xie, Xiaoming Xu, Yihan Pan, Meng Cheng, Hao Yang, and Xunliang Cai – detail a new framework, ‘LLIA – Enabling Low-Latency Interactive Avatars: Real-Time Audio-Driven Portrait Video Generation with Diffusion Models’, which addresses the computational challenges of deploying diffusion models for this purpose. Their work focuses on optimising video generation speed and stability, enabling fluid, low-latency communication through digitally rendered portraits.

Real-time Audio-Driven Portrait Video Generation Achieved Through Optimised Diffusion Models

Researchers have developed a framework capable of generating real-time portrait videos driven by audio input, addressing a key limitation in the deployment of diffusion models for interactive applications. The system facilitates fluid and authentic two-way communication, representing an advance in realistic virtual human experiences.

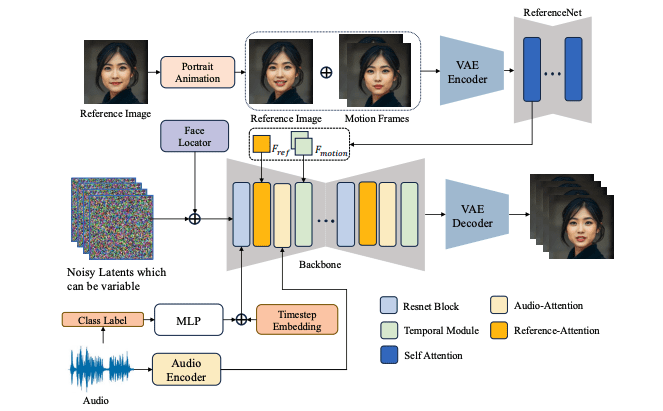

The core of the innovation lies in minimising computational bottlenecks. The team implemented variable-length video generation, reducing the initial delay before video output commences – a critical factor for natural user interaction during state changes. They then employed a consistency model training strategy, specifically adapted for Audio-Image-to-Video generation. Consistency models accelerate the inference process – the generation of output from input data – without compromising visual fidelity. Further speed gains were achieved through model quantization – reducing the precision of numerical values within the model – and pipeline parallelism, distributing computational load across multiple processing units.

To mitigate potential instability arising from these optimisations, the researchers devised a novel inference strategy for long-duration video generation. This ensures consistent output quality and realistic visuals over extended sequences. The framework also incorporates class labels as conditional inputs, allowing seamless transitions between speaking, listening, and idle states, thereby expanding the range of possible interactions.

The system builds upon established techniques. Audio input is processed using wav2vec 2.0, a self-supervised learning method for speech representation, accurately capturing nuances in the audio signal. Computer vision tools, specifically Mediapipe, are used for precise facial landmarking – identifying key points on the face – to ensure realistic animation. The model is trained using datasets such as Mead, providing a rich source of data for learning realistic facial expressions and movements.

Performance benchmarks demonstrate the framework’s efficacy. On an RTX 4090D GPU, the system achieves a maximum frame rate of 78 FPS at 384×384 resolution and 45 FPS at 512×512 resolution. Initial video generation latency is measured at 140ms and 215ms respectively, confirming the system’s ability to deliver low-latency performance.

Future work will focus on enhancing interactivity and responsiveness, with the ultimate goal of creating virtual humans capable of seamless real-time interaction. Potential applications span entertainment, education, healthcare, and customer service, promising more immersive and engaging digital communication experiences.

Definitions:

- Diffusion Models: A class of generative models that learn to create data by reversing a process of gradually adding noise.

- Inference: The process of using a trained model to generate output from input data.

- Quantization: Reducing the precision of numerical values within a model to reduce its size and computational requirements.

- Pipeline Parallelism: Distributing the computational load of a model across multiple processing units to accelerate processing.

- Self-Supervised Learning: A machine learning paradigm where the model learns from unlabeled data by creating its own supervisory signals.

👉 More information

🗞 LLIA — Enabling Low-Latency Interactive Avatars: Real-Time Audio-Driven Portrait Video Generation with Diffusion Models

🧠 DOI: https://doi.org/10.48550/arXiv.2506.05806