SmartAvatar generates fully rigged 3D human avatars from single images or text using large vision-language models. An autonomous verification loop iteratively refines avatar features, ensuring high fidelity and customisation. Benchmarks demonstrate superior performance in mesh quality, identity accuracy and animation readiness compared to existing methods.

The creation of realistic, customisable digital representations of people remains a complex challenge in computer graphics. Researchers are now demonstrating a new approach utilising large vision-language models (VLMs) to generate fully articulated 3D human avatars from either a single photograph or textual description. This method moves beyond the limitations of conventional diffusion-based techniques by incorporating an autonomous verification process, allowing for iterative refinement guided by artificial intelligence. Alexander Huang-Menders, Xinhang Liu, Andy Xu, Yuyao Zhang, Chi-Keung Tang, and Yu-Wing Tai detail their work in the article “SmartAvatar: Text- and Image-Guided Human Avatar Generation with VLM AI Agents”, published this week, outlining a system capable of producing animation-ready avatars with improved fidelity and control on standard consumer hardware.

SmartAvatar: A Novel Framework for Controllable 3D Human Avatar Generation

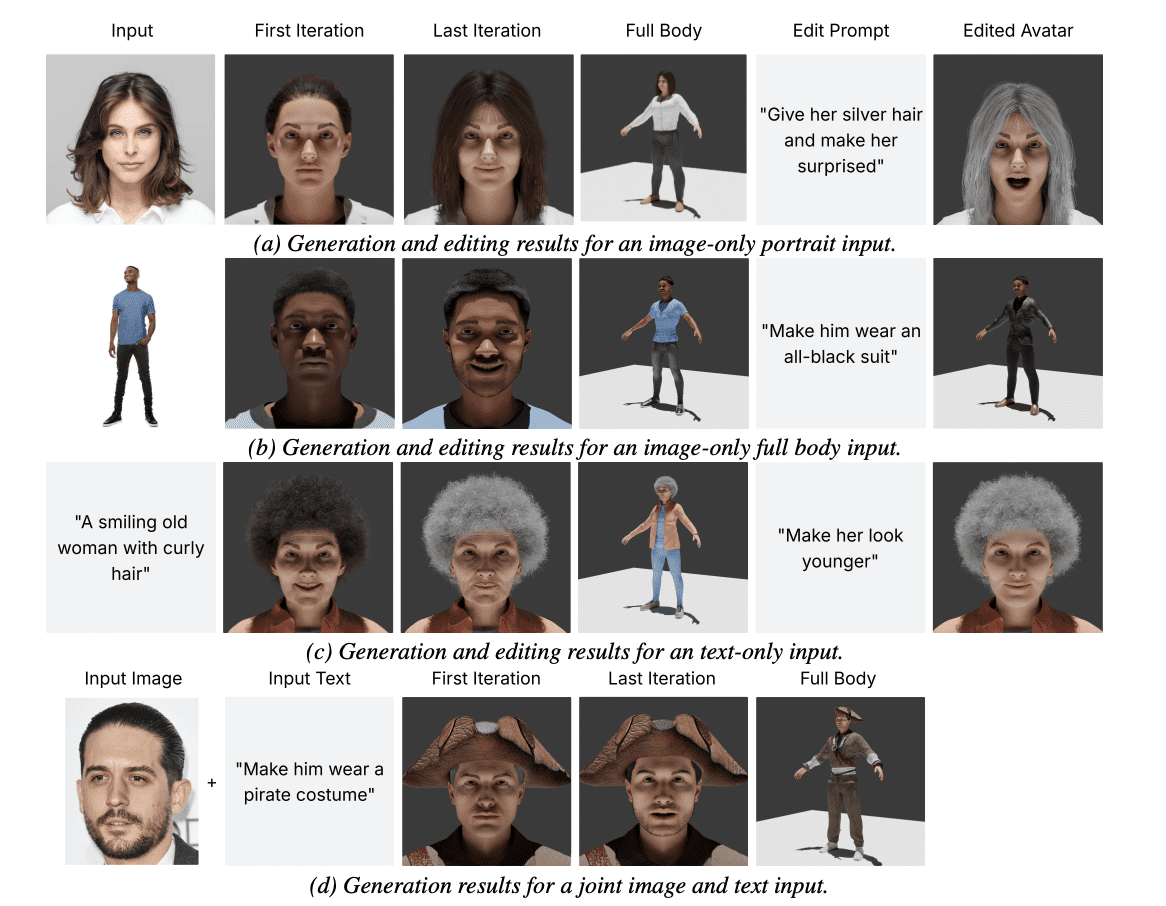

The creation of realistic and controllable three-dimensional human avatars from single images or textual descriptions represents a notable development in computer graphics. SmartAvatar presents a new framework for achieving this, distinguishing itself from existing diffusion-based methods through prioritised control over identity, body shape, and animation readiness. The system combines large vision-language models (VLMs) – artificial intelligence systems trained to understand both images and text – with established parametric human generators.

SmartAvatar actively renders initial avatar drafts within an autonomous verification loop. This loop evaluates the draft against the input – be it image or text – assessing facial similarity, anatomical correctness, and alignment with the provided prompt. The system then iteratively adjusts generation parameters, refining the avatar towards a satisfactory result and enabling precise control over facial and bodily features. Users can shape avatars through conversational prompting, moving beyond the limitations of static, pre-trained datasets common in diffusion models.

Quantitative benchmarks demonstrate SmartAvatar’s superior performance compared to recent text- and image-driven avatar generation systems. Improvements are evident in reconstructed mesh quality – the detail and accuracy of the 3D model – identity fidelity, attribute accuracy, and crucially, animation readiness. The system employs metrics such as ArcFace ID Similarity – a measure of facial recognition accuracy – CLIP Image Similarity, and CLIP Text Similarity, normalising these measurements using cosine similarity to provide a standardised scale from zero to one. User studies corroborate these findings, demonstrating that SmartAvatar outperforms existing methods in key areas.

A central feature is the autonomous verification loop. The system renders draft avatars, evaluates them against input criteria – facial similarity, anatomical plausibility, and prompt alignment – and iteratively refines generation parameters. This iterative refinement, guided by the VLM, facilitates fine-grained control over both facial and bodily features, allowing users to refine avatars through conversational prompts. The generated avatars exhibit consistent identity and appearance across various poses, making them immediately suitable for animation and interactive applications.

SmartAvatar’s performance on consumer-grade hardware further enhances its accessibility and practicality. Evaluation metrics consistently demonstrate the system’s ability to accurately represent both visual and semantic information from the input data.

Future work should focus on expanding the range of controllable attributes, incorporating more nuanced characteristics such as detailed clothing, accessories, and stylistic variations. Investigating methods to improve the system’s robustness to ambiguous or incomplete input prompts also presents a valuable avenue for research, enhancing its adaptability and user-friendliness. Exploring integration with real-time motion capture systems could unlock new possibilities for creating dynamic and personalised avatars in interactive environments, further expanding its applications.

👉 More information

🗞 SmartAvatar: Text- and Image-Guided Human Avatar Generation with VLM AI Agents

🧠 DOI: https://doi.org/10.48550/arXiv.2506.04606