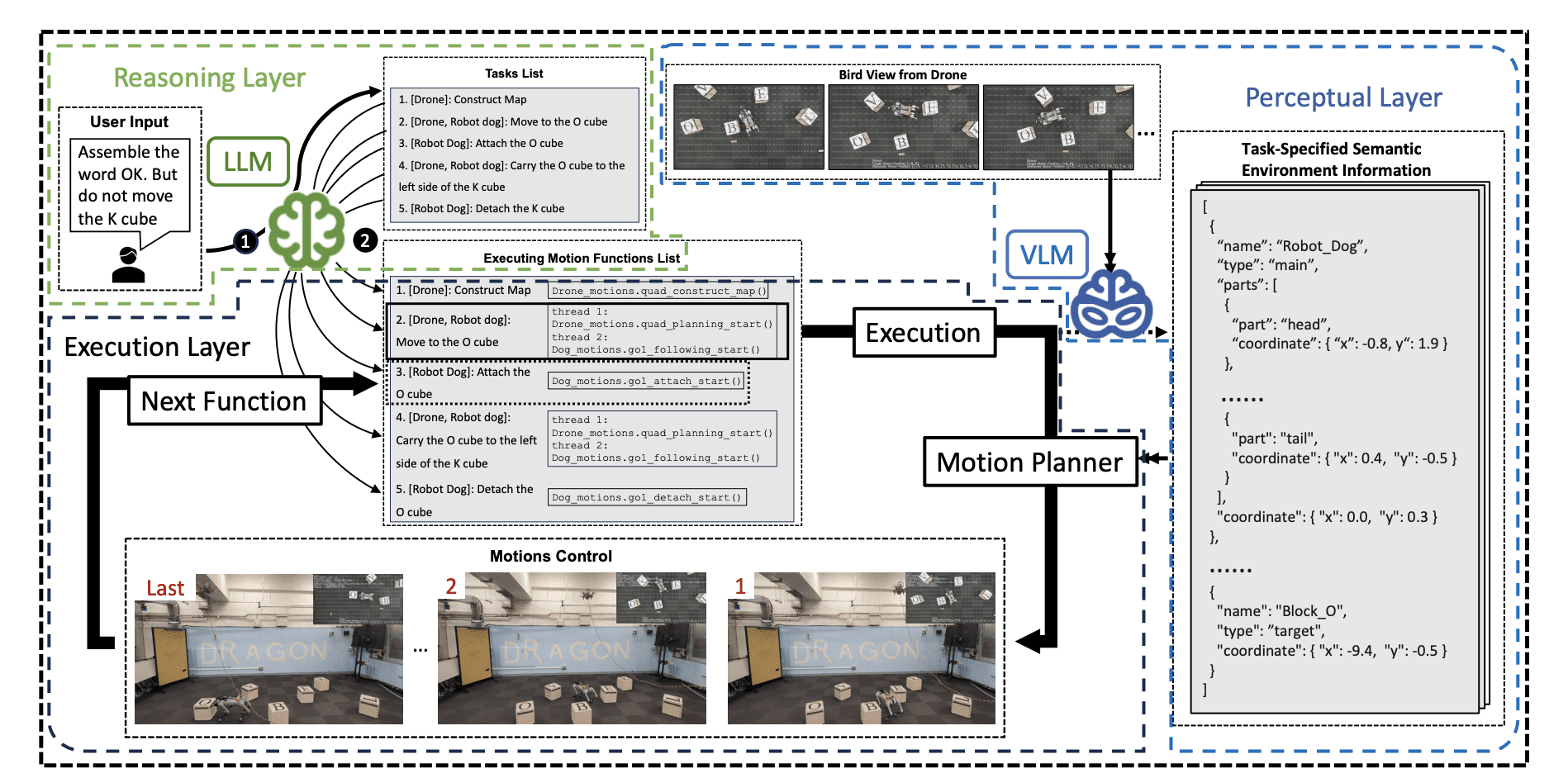

Researchers developed a hierarchical system integrating large language models and vision-language models to enable coordinated action between aerial and ground robots. The system facilitates task decomposition, semantic mapping from images, and robust navigation, even when targets are absent, demonstrated through a letter-cubes arrangement task.

Coordinated action between robots operating in different environments presents a significant challenge in complex tasks. Researchers are now demonstrating a system where an aerial drone and a ground-based robot collaborate using a novel integration of artificial intelligence. This approach moves beyond static programming by employing large language models (LLMs) – algorithms trained on vast amounts of text data – to decompose tasks and construct a semantic understanding of the environment. Coupled with vision-language models (VLMs) that interpret images, the system enables the aerial robot to guide the ground robot’s navigation and object manipulation, even when targets are obscured or absent. Haokun Liu, Zhaoqi Ma, Yunong Li, Junichiro Sugihara, Yicheng Chen, Jinjie Li, and Moju Zhao detail this hierarchical framework in their paper, “Hierarchical Language Models for Semantic Navigation and Manipulation in an Aerial-Ground Robotic System”.

Hierarchical Control Enables Coordinated Action in Multi-Robot Systems

Cooperative robots are demonstrating increased autonomy in interpreting instructions and coordinating actions. Recent research details a hierarchical framework integrating large language models (LLMs) and vision-language models (VLMs) to enhance the capabilities of heterogeneous multi-robot systems, specifically combining aerial and ground-based platforms. This approach represents a move beyond pre-programmed robotic behaviours, allowing robots to translate natural language instructions into coordinated action.

The system functions by utilising an LLM to decompose complex tasks and construct a global semantic map – a representation of the environment incorporating object recognition and spatial relationships. Simultaneously, a fine-tuned VLM extracts task-relevant semantic labels – identifying objects and their properties – and 2D spatial information from aerial imagery. This data informs both global and local planning. The VLM’s output guides an unmanned aerial vehicle (UAV) along an optimised path, while also providing the automated guided vehicle (AGV) with the information necessary for local navigation and manipulation.

Researchers successfully integrated aerial and ground perception to achieve task completion, creating a synergistic robotic team. The UAV provides a comprehensive overview of the environment, enabling the AGV to accurately locate and manipulate objects. This division of labour streamlines the task completion process.

Experiments demonstrated the system’s ability to successfully complete complex tasks, such as arranging letter cubes, in dynamic real-world environments. Notably, the system maintains functionality even when a target object is absent, relying on continuous image processing and semantic understanding to maintain alignment. This robustness stems from the integration of LLM-driven reasoning with VLM-based perception, allowing operation in dynamic conditions and overcoming challenges posed by obstacles and changing environments.

Future work will focus on expanding the system’s capabilities to handle more complex tasks and environments. Researchers aim to develop more adaptable algorithms, enabling autonomous robotic operation across a wider range of scenarios and potentially increasing automation and efficiency in various industries.

👉 More information

🗞 Hierarchical Language Models for Semantic Navigation and Manipulation in an Aerial-Ground Robotic System

🧠 DOI: https://doi.org/10.48550/arXiv.2506.05020