Research demonstrates that ‘scrambling’ systems, used for processing temporal information, exhibit predictable scaling behaviour. Measurement accuracy improves with reservoir size but degrades with repeated data use unless computational resources increase exponentially. Memory retention of initial inputs diminishes exponentially with both reservoir size and processing steps, a characteristic also observed under noisy conditions. These findings, established through novel concentration bounding techniques, clarify limitations in utilising these systems for complex temporal tasks and highlight trade-offs between accuracy, memory and computational cost.

The capacity of quantum systems to process time-dependent information represents a significant area of investigation within quantum computation. Researchers are actively exploring how the inherent dynamics of these systems can be harnessed for tasks requiring memory and sequential processing, moving beyond the static gate operations of conventional quantum algorithms. A new study by Xiong et al. from the École Polytechnique Fédérale de Lausanne (EPFL) and Chulalongkorn University details a theoretical analysis of ‘scrambling’ systems – those exhibiting rapid information dispersal – as potential reservoirs for temporal information processing. Their work, titled ‘Role of scrambling and noise in temporal information processing with quantum systems’, examines the trade-offs between reservoir size, iterative use, and the retention of past inputs, both in idealised and noisy conditions, and introduces novel mathematical techniques to quantify these effects.

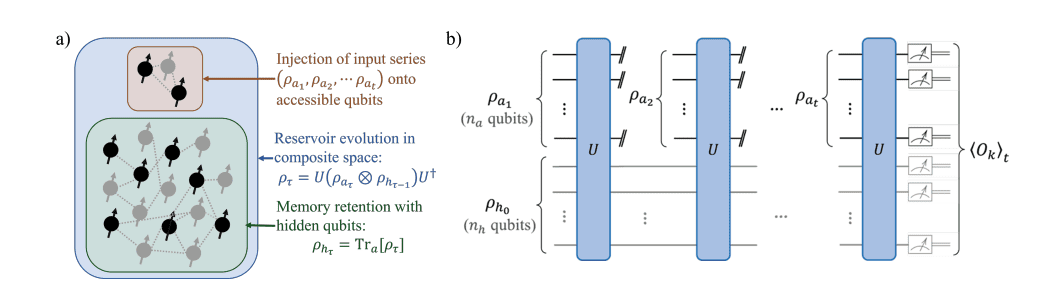

Recent research investigates the capacity of scrambling quantum systems to process temporal information, focusing on the theoretical underpinnings of performance within a general reservoir computing framework. This work rigorously examines the scalability and memory retention characteristics of quantum reservoirs, modelled using high-order unitary designs, under both ideal and noisy conditions, moving beyond simply demonstrating effective feature maps and instead quantifying how these systems retain and process information over time. Researchers demonstrate that measurement readouts from these quantum reservoirs exhibit exponential concentration as reservoir size increases, a property crucial for reliable information processing, and importantly, this concentration does not degrade with increasing reservoir iterations, suggesting potential viability for repeatedly utilising smaller reservoirs with sequential data.

The study establishes that the memory of early inputs and initial states decays exponentially with both reservoir size and the number of processing iterations, highlighting a fundamental limitation: maintaining long-term memory requires either a significantly larger reservoir or a method to counteract this inherent decay. Researchers demonstrate that measurement readouts exhibit exponential concentration with increasing reservoir size, a property crucial for reliable information processing, and importantly, this concentration does not degrade with increasing reservoir iterations, suggesting potential viability for repeatedly utilising smaller reservoirs with sequential data. This work necessitates new proof techniques for bounding concentration in temporal learning models, providing a quantitative understanding of the trade-offs between reservoir size, processing iterations, and the required measurement overhead. This detailed analysis is crucial for assessing the feasibility and performance of quantum reservoir computing in practical applications, and provides a foundation for future development of more efficient and robust quantum information processing systems.

👉 More information

🗞 Role of scrambling and noise in temporal information processing with quantum systems

🧠 DOI: https://doi.org/10.48550/arXiv.2505.10080