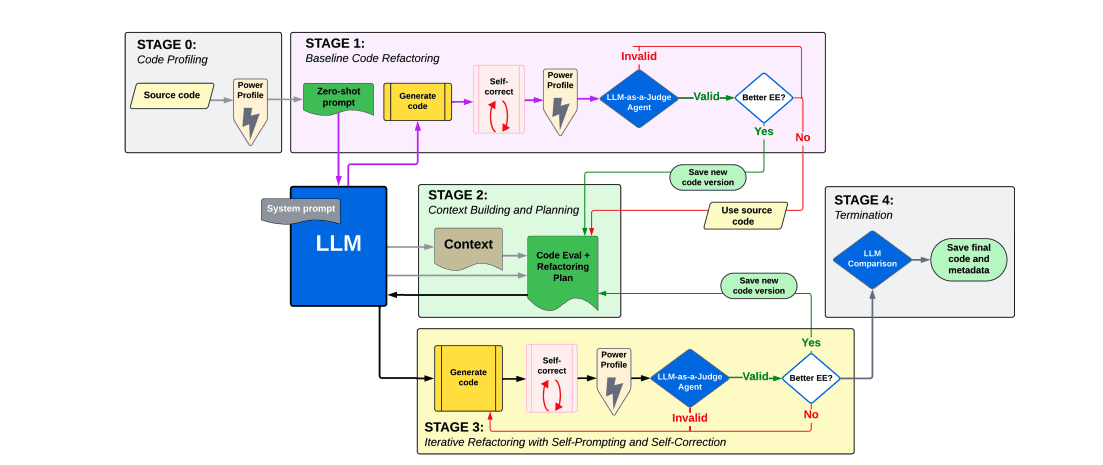

On May 4, 2025, researchers introduced LASSI-EE, an innovative framework that leverages large language models to automate energy-efficient refactoring of parallel scientific codes. Their study achieved a significant 47% average energy reduction across 85% of tested benchmarks on NVIDIA’s A100 GPUs, highlighting the potential for enhanced efficiency in computational tasks.

LASSI-EE is an automated framework that uses large language models to refactor parallel code for energy efficiency on target systems. Tested on 20 HeCBench benchmarks using A100 GPUs, the framework achieved a 47% average energy reduction across 85% of cases. The results demonstrate LLMs’ potential beyond functional correctness, enabling energy-aware programming. Insights and limitations within the framework are also addressed to guide future improvements.

Integrating large language models (LLMs) into scientific computing has expanded beyond generating functional code, now focusing on enhancing energy efficiency and performance. A collaborative team led by Yiheng Tao from the University of Illinois Chicago, along with colleagues Xingfu Wu and Valerie Taylor from Argonne National Laboratory, among others, presents their work titled Leveraging LLMs to Automate Energy-Aware Refactoring of Parallel Scientific Codes. Their research introduces LASSI-EE, an automated framework that refines parallel codes for energy efficiency. This innovative approach not only improves code functionality but also addresses critical performance and energy considerations, offering a significant advancement in computational efficiency.

Large language models create parallel code for high-performance computing yet face challenges in accuracy and performance.

Large language models (LLMs) have emerged as powerful tools capable of generating code, including parallel code for high-performance computing tasks. These models demonstrate potential in automating complex programming processes but face challenges in ensuring the correctness and efficiency of the generated code. For instance, projects like HPC-Coder, developed by Daniel Nichols and colleagues, explore how LLMs can model parallel programs, highlighting their ability to handle intricate computational tasks. However, studies indicate that while LLMs can produce syntactically correct code, it often falls short in terms of efficiency and correctness when executed in parallel environments.

Specialized models such as StarCoder 2 and Code Llama have been fine-tuned for code generation, potentially improving the understanding of programming patterns, including those related to parallelism. These advancements suggest that with further refinement, LLMs could become more adept at generating efficient and correct parallel code. Additionally, research into optimizing CUDA kernels provides insights into potential methods for enhancing performance, though integrating these techniques into LLM workflows remains a complex task.

The development of tools and frameworks that combine LLMs with optimization techniques is crucial for advancing the field. Such tools would enable the automated application of best practices in parallel programming, potentially leading to more efficient code generation. Furthermore, the integration of reasoning models, such as those developed by Azure OpenAI, could aid in solving complex problems related to parallel programming, offering new avenues for improving solutions.

Despite these advancements, significant challenges remain, particularly in ensuring the correctness and efficiency of generated parallel code. The computational demands of training large models also pose practical limitations, emphasizing the need for efficient resource utilization and accessibility. Addressing these issues will be essential for unlocking the full potential of LLMs in parallel programming and enabling their widespread adoption in high-performance computing environments.

Train and fine-tune LLMs on parallel code datasets.

Integrating large language models (LLMs) into parallel programming represents a promising yet complex frontier in computational science. By training LLMs on datasets of high-quality parallel code, researchers have demonstrated their ability to generate code that aligns with best practices for frameworks like CUDA or OpenMP. These models excel at producing efficient and optimized code by learning patterns from extensive training data, effectively mimicking the decision-making processes of experienced developers. However, this capability hinges on careful fine-tuning, where LLMs are further trained on high-performance computing (HPC)-specific datasets to better grasp domain-specific nuances.

Despite their potential, challenges remain in ensuring the reliability and correctness of code generated by LLMs. Parallel programming requires a deep understanding of concurrency and resource management, areas where subtle bugs like race conditions can emerge if the model lacks sufficient expertise. To address this, researchers have developed automated testing frameworks that evaluate the scalability and performance of generated code under various conditions. These tools help mitigate risks by identifying potential issues before they impact real-world applications.

The choice of programming paradigm also plays a critical role in determining the effectiveness of LLMs in parallel programming. Shared-memory, message-passing, and GPU-based paradigms each present unique challenges that may require separate fine-tuning or specialized models to handle effectively. For instance, generating code for distributed systems demands an understanding of communication protocols and load balancing strategies, which differ significantly from those required for shared-memory architectures.

Ultimately, the scalability and practicality of LLM-generated code depend on how well these models can implement efficient task distribution and resource management. While tools like HPC-Coder highlight active research in this area, real-world adoption remains limited as researchers continue to refine both the methodologies and the evaluation frameworks. As LLMs evolve, their integration into development workflows holds the potential to revolutionize parallel programming by automating complex tasks while maintaining high standards of performance and reliability.

LLMs can create and assess parallel code but have limitations.

The referenced studies explore the application of large language models (LLMs) in generating and evaluating parallel code, highlighting both progress and challenges.

- Evaluation Cost Modeling: Stefan K. Muller and Jan Hoffmann’s work ([6]) focuses on modeling and analyzing the evaluation cost of CUDA kernels. Their research provides insights into optimizing parallel computations by quantifying the computational overhead associated with kernel execution. This approach enables developers to make informed decisions about resource allocation, potentially improving performance in high-performance computing (HPC) applications.

- LLM Capabilities for Parallel Code: Daniel Nichols and colleagues ([7]) investigate whether LLMs can effectively generate parallel code. Their experiments demonstrate that while LLMs can produce syntactically correct parallel code, the generated code often lacks efficiency and scalability compared to manually optimized solutions. This finding underscores the need for further refinement of LLM training methodologies to better capture the nuances of parallel programming.

- HPC-Coder Framework: In ([8]), the same team introduces HPC-Coder, a framework designed to model and generate parallel programs using LLMs. The study shows that HPC-Coder can successfully handle complex parallel constructs, such as distributed memory operations and task scheduling. However, the authors note that further research is required to enhance the model’s ability to generalize across diverse HPC workloads.

- Parallel Pattern Generation: Adrian Schmitz et al. ([11]) explore the generation of code for parallel patterns using LLMs. Their results indicate that LLMs can effectively replicate known parallel patterns when provided with sufficient training data and clear examples. This suggests that structured approaches to training may improve the reliability and quality of code generated by LLMs.

LLMs show promise but face hurdles in HPC code generation.

Integrating large language models (LLMs) in high-performance computing (HPC) for parallel code generation and optimization presents both potential and challenges. Research indicates that LLMs can generate parallel code, as demonstrated by studies evaluating their capabilities. However, the accuracy and efficiency of this generated code remain significant concerns. The application of static analysis techniques, such as those used in optimizing CUDA kernels, offers a pathway to enhance the quality of LLM-generated code.

Future research should focus on refining model architectures to improve code generation accuracy and exploring hybrid approaches that combine LLMs with traditional optimization methods. Additionally, leveraging pattern-based code generation could guide LLMs in producing more effective parallel code. Addressing these areas will be crucial for advancing the practical application of LLMs in HPC environments.

👉 More information

🗞 Leveraging LLMs to Automate Energy-Aware Refactoring of Parallel Scientific Codes

🧠 DOI: https://doi.org/10.48550/arXiv.2505.02184