On April 20, 2025, researchers Kuanting Wu, Kei Ota, and Asako Kanezaki introduced FlowLoss, an innovative method enhancing temporal coherence in Video Diffusion Models by directly comparing flow fields with a noise-aware weighting scheme. This approach significantly improves motion stability and accelerates training convergence.

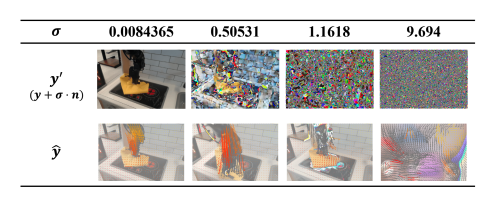

Video Diffusion Models (VDMs) often face challenges in generating temporally coherent motion. This research introduces FlowLoss, which directly compares flow fields from generated and ground-truth videos to improve temporal consistency. To address noise issues during diffusion, the authors propose a noise-aware weighting scheme that adjusts the flow loss across denoising steps. Experimental results demonstrate that FlowLoss enhances motion stability and accelerates training convergence in VDMs, offering practical insights for integrating motion-based supervision into generative models.

The field of robotics is experiencing significant transformations, driven by advancements in artificial intelligence (AI) and computer vision. These innovations are enhancing robots’ capabilities, particularly in their ability to perceive environments and make informed decisions. As a result, more autonomous and efficient robotic systems are emerging across various applications.

A key advancement is optical flow estimation, which enables robots to understand motion by analyzing video data. By tracking pixel movement between consecutive frames, robots can infer depth, direction, and speed—crucial for navigating dynamic environments. Recent models like Flownet use Convolutional Neural Networks (CNNs) to estimate optical flow with greater accuracy, allowing robots to adapt more effectively to changing scenarios.

Beyond perception, diffusion models are revolutionizing how robots predict future states. These generative models simulate potential outcomes by gradually adding noise to data and learning to reverse the process. This approach is particularly useful for forecasting in robotics, enabling robots to anticipate actions and plan accordingly. Research highlights their versatility and robustness, making them a valuable tool in enhancing decision-making.

Accurate evaluation of vision systems is essential for reliable robotics. Traditional metrics like Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) assess image quality, but recent advancements introduce perceptual metrics that better align with human visual perception. These metrics, developed by researchers such as Zhang et al., provide a nuanced evaluation, ensuring robots interpret environments accurately.

In video generation, maintaining temporal consistency is critical for robotics applications. Innovations in metrics, explored by Unterthiner et al., focus on evaluating not just individual frames but the coherence of sequences over time. This ensures robots can process and respond effectively to dynamic environments.

The Bridgedata v2 dataset is a pivotal resource for advancing robot learning. By providing extensive data, it supports training models on diverse tasks, enhancing adaptability in real-world scenarios. This dataset is instrumental in scaling up robotic learning, enabling systems to generalize and perform reliably across various environments.

Collectively, these innovations—optical flow estimation, diffusion models, advanced metrics, and comprehensive datasets—are driving the evolution of robotics. They equip robots with enhanced perception, predictive capabilities, and decision-making skills, setting the stage for a new era of autonomous and efficient robotic systems. As research continues, further advancements can be expected, integrating these technologies into everyday applications.

👉 More information

🗞 FlowLoss: Dynamic Flow-Conditioned Loss Strategy for Video Diffusion Models

🧠 DOI: https://doi.org/10.48550/arXiv.2504.14535