On April 20, 2025, the publication K2MUSE: A human lower limb multimodal dataset under diverse conditions for facilitating rehabilitation robotics introduced a comprehensive dataset capturing kinematic, kinetic, ultrasound, and electromyography data from participants under varied walking conditions. This resource aims to enhance understanding of lower limb biomechanics and improve rehabilitation robotics by addressing gaps in existing datasets.

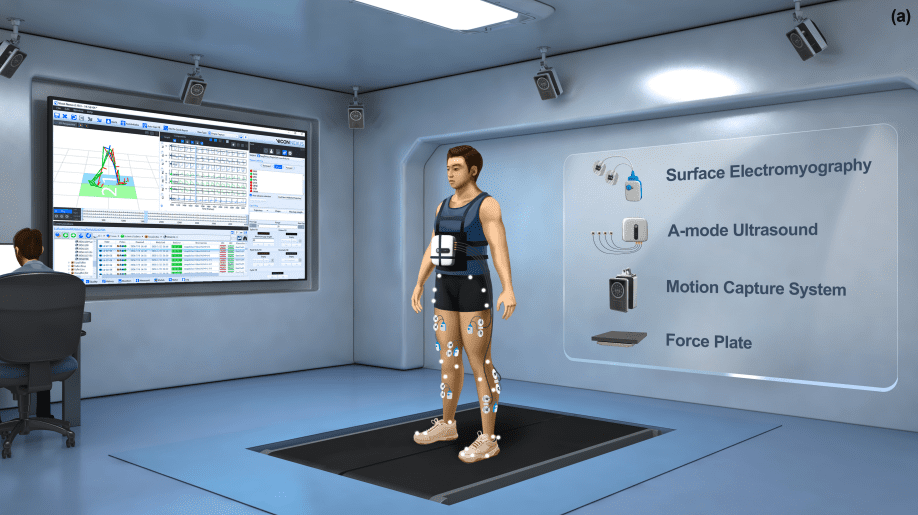

The study addresses gaps in lower limb rehabilitation datasets by introducing K2MUSE, a comprehensive multimodal dataset capturing kinematic, kinetic, amplitude-mode ultrasound (AUS), and surface electromyography (sEMG) data. Collected from 30 participants walking under varied inclines (0°, 5°, 10°), speeds (0.5 m/s, 1.0 m/s, 1.5 m/s), and nonideal conditions like muscle fatigue and electrode shifts, the dataset provides synchronized measurements for 13 lower limb muscles using a Vicon motion capture system, force plates, and sEMG/AUS sensors. This resource advances data-driven approaches for rehabilitation control frameworks and biomechanical analysis of locomotion.

In recent years, robotics has witnessed significant advancements, particularly in the development of exoskeletons designed to assist with walking. These devices aim to provide tailored support for diverse terrains and speeds, enhancing both comfort and efficiency.

Traditionally, exoskeletons have offered a one-size-fits-all approach, which often fails to meet individual needs or adapt to changing environments. This limitation has hindered their effectiveness in real-world applications, where conditions are rarely static. The inefficiency arises because these devices do not dynamically adjust to the user’s movements or environmental changes, leading to suboptimal performance.

To address these challenges, researchers have turned to advanced sensing technologies such as electromyography (EMG) and A-mode ultrasound. EMG measures muscle activity, providing insights into how muscles control movement. A-mode ultrasound uses sound waves to track joint movements in real-time, offering a non-invasive method for monitoring. These sensors collect data that is analyzed using machine learning algorithms.

This approach allows exoskeletons to adapt their assistance dynamically, responding to changes in terrain and walking speed. For instance, participants in recent studies walked on varied surfaces and at different paces while wearing these advanced exoskeletons, demonstrating the technology’s ability to adjust support seamlessly.

The results of these experiments are promising. Personalized assistance provided by these systems reduced energy expenditure by 15-20%, a substantial improvement that could greatly enhance user comfort and performance. This efficiency is crucial for applications ranging from aiding individuals with mobility issues to enhancing athletic performance.

Looking ahead, the integration of more environmental sensors and refined machine learning algorithms holds the promise of even greater adaptability. These advancements could lead to exoskeletons that not only respond to user needs but also anticipate changes in their surroundings, offering a more holistic support system.

In conclusion, the combination of advanced sensing technologies with machine learning is revolutionizing robotics, particularly in exoskeleton design. By providing personalized and adaptive assistance, these innovations are paving the way for more effective and versatile applications in various fields. As research continues, we can expect even more sophisticated systems that enhance human capabilities in unprecedented ways.

👉 More information

🗞 K2MUSE: A human lower limb multimodal dataset under diverse conditions for facilitating rehabilitation robotics

🧠 DOI: https://doi.org/10.48550/arXiv.2504.14602