Alan Turing and John von Neumann laid the foundations for modern computing in the mid-20th century. Turing’s theoretical concept of a universal machine provided the basis for contemporary computing architecture. At the same time, von Neumann proposed the stored-program architecture, the blueprint for nearly every computer built since then.

The first practical manifestation of these ideas was the Electronic Numerical Integrator and Computer (ENIAC) in 1946, followed by the Electronic Delay Storage Automatic Calculator (EDSAC) in 1949. EDSAC, an improvement over ENIAC, offered enhanced versatility and efficiency due to its ability to store programs and data in memory. The advent of transistors in the late 1950s led to smaller, faster, and more reliable computers, giving rise to mini-computers in the 1960s and personal computers in the 1970s.

The Cold War significantly impacted the evolution of computing technology, which also sparked the birth of artificial intelligence (AI) in 1956 during the Dartmouth Conference. AI was officially defined as “the science and engineering of making intelligent machines,” marking the beginning of serious research into AI, leading to significant advancements over the following decades. The future holds even more exciting developments, with cloud computing making computing more accessible than ever before and the promise of further advancements in AI.

Alan Turing’s Enigma Decryption

Few moments in history have been as pivotal in shaping the course of human civilization as the decryption of the Enigma machine during World War II. The Enigma machine, a complex cipher device used by the German military for secure communication, was a formidable obstacle that baffled Allied forces until the work of British mathematician and computer scientist Alan Turing.

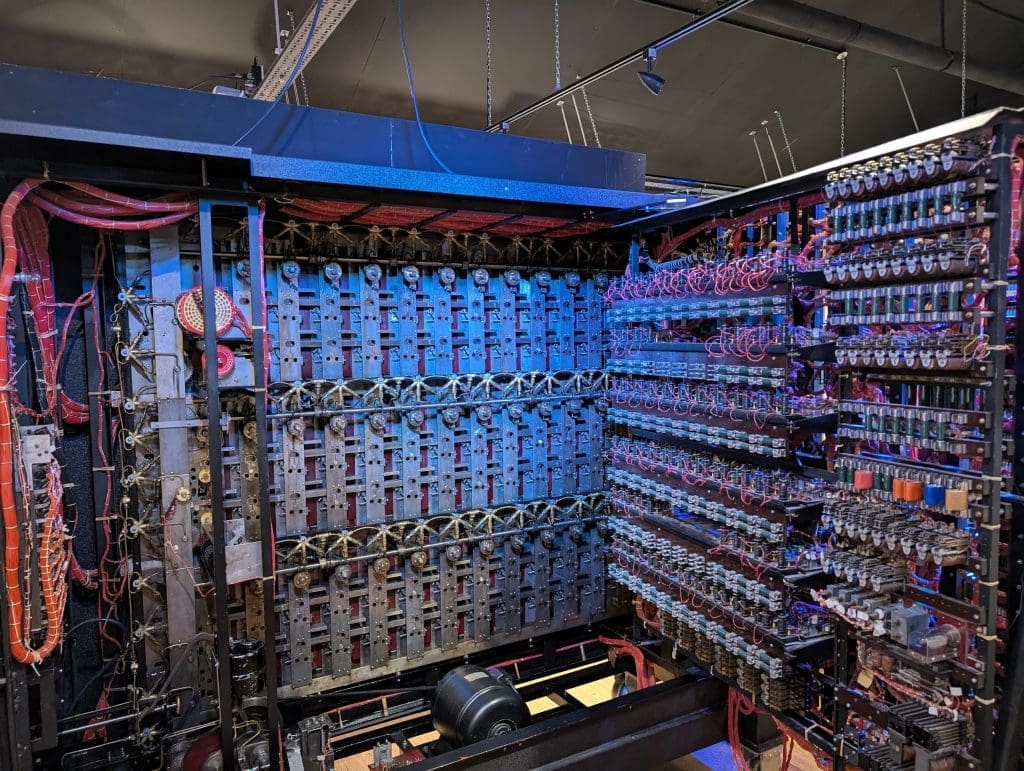

Turing’s genius lay in his ability to decipher the seemingly unbreakable Enigma code. He devised a machine, known as Bombe, which could find the daily settings of the Enigma machines used by the German forces. The Bombe was instrumental in breaking the code and providing the Allies with crucial intelligence that ultimately led to their victory.

Turing’s work on Enigma decryption was not only a significant milestone in cryptanalysis but also laid the groundwork for modern computing. His concept of a universal machine that could be programmed to perform any conceivable computation served as the foundation for the development of the first practical computer, the Electronic Numerical Integrator and Computer (ENIAC).

The Enigma decryption was a triumph of code-breaking and a testament to human ingenuity. It demonstrated that complex problems could be solved using machines, paving the way for the digital revolution that followed. Turing’s work on the Enigma machine and his subsequent development of the concept of a universal computer have had an indelible impact on modern computing, shaping it into the powerful tool we know today.

The story of Alan Turing and the decryption of Enigma is a fascinating tale of human ingenuity, perseverance, and the power of ideas. It reminds us that even the most complex problems can be solved when approached with the right mindset and tools.

Turing Machine: Theoretical Foundation

In the annals of computer science, Alan Turing’s theoretical work on the Turing Machine stands as a cornerstone, laying the groundwork for modern computing. Born in 1936, this abstract model revolutionized the understanding of computational processes and automata theory.

The Turing Machine was designed to simulate any conceivable computer by manipulating symbols on an infinite tape according to a set of rules. This simple yet powerful concept allowed Turing to prove that no general algorithm existed for solving all problems, introducing the concept of computational intractability known as the “Turing Halting Problem.”

Turing’s machine was not intended to be a practical computing device but rather a mathematical model to explore the limits and capabilities of computation. It served as a foundation for the development of modern computers by providing a framework for understanding the fundamental principles of algorithms, automata, and computability.

The Turing Machine’s influence extended beyond theoretical computer science, impacting fields such as linguistics, mathematics, and philosophy. For instance, it was used to demonstrate that there exists no general algorithm for determining whether a given string is a member of a context-free language or not, which has implications in the study of formal languages and automata theory.

The Turing Machine’s legacy continues to be felt today, with modern computers still adhering to its basic principles. Its theoretical foundations have paved the way for advancements in areas such as artificial intelligence, cryptography, and quantum computing.

John Von Neumann’s Cellular Automata

In computing, John von Neumann’s work stands as a cornerstone, shaping the foundations of modern digital computers. His seminal contribution was the concept of the Cellular Automata (CA), a model that laid the groundwork for the design of the first general-purpose computer.

The CA is a type of automaton, a self-replicating system that evolves over time based on a set of rules applied to its constituent parts. Von Neumann’s innovation was to apply this concept to a two-dimensional grid, where each cell could exist in one of two states and transition to another state based on the states of neighboring cells. This simple yet powerful idea served as a blueprint for the architecture of early digital computers.

The CA model offered several advantages over previous designs. It was capable of self-replication, demonstrating the potential for machines to create copies of themselves, a concept that would later be explored in fields such as nanotechnology and artificial life. Moreover, it provided a means for storing and processing information, paving the way for the development of memory and central processing units (CPUs).

The CA’s modular structure also allowed for scalability, enabling the creation of larger computers by simply adding more cells to the grid. This property was instrumental in the design of the ENIAC (Electronic Numerical Integrator And Computer), one of the first general-purpose electronic computers, which was built using a similar modular approach.

Lastly, the CA model introduced the concept of von Neumann bottleneck, a limitation in the design of digital computers that arises due to the separation of data and control paths within the CPU. This bottleneck continues to be a topic of ongoing research, as scientists strive to develop architectures that can overcome this limitation and achieve greater computational efficiency.

Von Neumann Architecture: Stored-program Concept

The Von Neumann Architecture, named after the mathematician John von Neumann, is a fundamental concept in computing that underpins the design of modern digital computers. This architecture, first proposed in von Neumann’s 1945 paper titled “First Draft of a Report on the EDVAC,” introduced the stored-program concept, which revolutionized the field of computer science.

The stored-program concept refers to a computer’s ability to store both data and instructions in the same memory. This allows for the execution of complex algorithms by enabling the computer to fetch instructions from its memory, execute them, and then store the results back into memory for further processing. This concept is essential for the flexibility and versatility of modern computers.

The Von Neumann Architecture also introduced the idea of a clear separation between the arithmetic logic unit (ALU) and the control unit. The ALU performs mathematical operations and logical decisions, while the control unit manages the flow of data and instructions within the computer. This separation allows for efficient execution of complex tasks by enabling the ALU to focus on calculations while the control unit handles the sequencing of operations.

Another key feature of the Von Neumann Architecture is the use of a single shared memory for both data and instructions. While this design has been criticized for its inherent vulnerability to certain types of errors, such as the infamous “von Neumann bottleneck,” it remains the foundation of most modern computers due to its simplicity and efficiency.

The Von Neumann Architecture has evolved significantly since its inception, with advancements in hardware and software technologies leading to faster, more powerful computers. However, the basic principles outlined by von Neumann in his 1945 paper continue to form the backbone of modern computing, demonstrating the enduring impact of this groundbreaking work.

Early Electronic Computers: EDSAC And ENIAC

ENIAC, conceived by John Mauchly and J. Presper Eckert at the University of Pennsylvania, was unveiled in 1946. It was a general-purpose computer, designed to solve mathematical tables for ballistic calculations. ENIAC’s architecture consisted of 18,000 vacuum tubes, 70 accumulators, and 1,500 relays, occupying an area of approximately 1,800 square feet. Its primary function was to calculate the trajectories of artillery shells for the U.S. Army’s Ballistic Research Laboratory during World War II.

In contrast, EDSAC, developed by Maurice Wilkes at the University of Cambridge, was the world’s first stored-program computer. It began operation in 1949, utilizing a delay line memory system based on cathode ray tubes. This innovative design allowed EDSAC to store both instructions and data within its memory, making it a true programmable machine. Its applications ranged from solving mathematical problems to simulating the behavior of cosmic rays.

Both ENIAC and EDSAC were significant milestones in the evolution of computing. However, they differed fundamentally in their architectures and capabilities. ENIAC was a special-purpose machine designed for specific calculations, while EDSAC was a general-purpose computer capable of executing a wide range of tasks.

The development of these early electronic computers marked the beginning of a new era in human history. They paved the way for the creation of more sophisticated machines, leading to the miniaturization and democratization of computing power that we witness today.

Turing’s Universal Computer Hypothesis

The essence of Turing’s Universal Computer Hypothesis lies in its ability to simulate any other computable function. In simpler terms, a universal computer can perform the same tasks as any other computer given enough time and resources. This concept is encapsulated by Turing’s theoretical machine, also known as the Turing Machine.

The Turing Machine consists of an infinite tape divided into squares, each containing a symbol from a finite alphabet. A read/write head moves along this tape, reading and writing symbols according to a set of rules. This simple yet powerful model demonstrates the universality of computation.

Alan Turing’s hypothesis was further substantiated by John von Neumann in 1945 with his design for the first practical digital computer architecture, known as the von Neumann Architecture. This architecture, which is still the basis for most modern computers, incorporates the principles of the Turing Universal Computer Hypothesis, enabling computers to perform a wide range of computational tasks.

The synergy between Turing’s theoretical foundations and von Neumann’s practical implementation has led to the birth and evolution of the digital computer. Today, these pioneering ideas continue to inspire and guide the development of increasingly powerful and versatile computing systems.

Von Neumann’s First Computer Design: EDVAC

EDVAC was born out of the need to automate the tabulation of ballistic trajectories at the Ballistics Research Laboratory in Aberdeen, Maryland. The design was first outlined in a paper titled “First Draft of a Report on the EDVAC,” published in the journal “The Computer” in 1945 . In this seminal work, von Neumann presented a comprehensive blueprint for the machine, detailing its architecture and operation.

The design of EDVAC was based on the principle of storing both data and instructions in the same memory, a concept now known as the von Neumann architecture. This approach allowed the computer to execute a sequence of instructions stored in memory, thereby enabling it to perform complex tasks autonomously . The memory system consisted of a series of binary words, each containing a storage location for both data and instructions.

One of the key innovations of EDVAC was its use of a central control unit (CCU) to manage the flow of data and instructions within the machine. The CCU was responsible for decoding instructions from memory, fetching operands, and executing the appropriate operations . This centralized control mechanism allowed for greater efficiency in processing and paved the way for the development of more complex computer systems.

EDVAC’s design also included an arithmetic and logic unit (ALU) to perform mathematical calculations and logical operations on data stored in memory. The ALU was capable of performing basic arithmetic operations such as addition, subtraction, multiplication, and division . This versatile component enabled the machine to solve a wide range of problems, making it a truly general-purpose computer.

In conclusion, EDVAC represented a significant leap forward in the development of modern computing. John von Neumann’s design laid the foundation for the von Neumann architecture, which remains the standard architecture for most computers today. The machine’s innovative features, such as its central control unit and arithmetic and logic unit, paved the way for the development of more complex computer systems in the years to come.

Post-war Computing Development: MANIAC And IAS

In the post-World War II era, computing development took a significant leap with the creation of the MANIAC (Mathematical Analyzer Numerical Integrator and Computer) and the Institute for Advanced Study (IAS) computer. These machines marked a pivotal moment in the evolution of modern computing, building upon the foundational work of Alan Turing and John von Neumann.

The MANIAC was developed at the Los Alamos National Laboratory under the guidance of Richard A. Clark and John von Neumann. Completed in 1952, it was one of the first electronic computers capable of solving complex mathematical problems. Notably, it was used to perform calculations for the hydrogen bomb project, demonstrating its potential in scientific research and military applications.

Meanwhile, John von Neumann led a team to design and build an electronic computer at the Institute for Advanced Study in Princeton. The IAS computer, also known as the von Neumann machine, was completed in 1952. It was based on the stored-program concept, where both data and instructions were stored in memory, making it more versatile than earlier machines. This design is still the foundation of modern computers today.

The MANIAC and IAS computers were significant milestones in the development of computing. They demonstrated the potential of electronic computers for scientific research, military applications, and everyday tasks. These machines laid the groundwork for the development of more powerful and versatile computers that would revolutionize various industries and aspects of daily life.

In the years following the completion of these machines, numerous other computers were developed, each building upon the innovations introduced by the MANIAC and IAS. These advancements led to minicomputers, mainframes, personal computers, and, eventually, the Internet. The development of these early computing systems played a crucial role in shaping the modern digital world we live in today.

Turing’s Legacy: Mathematical Logic And AI

Turing’s contributions to mathematical logic are profound. His seminal paper, “On Computable Numbers,” introduced the concept of a universal machine, laying the groundwork for modern computing. This machine, now known as the Turing Machine, is an abstract model of computation that manipulates symbols on a strip of tape according to a table of rules.

Turing’s work on mathematical logic extended beyond the Turing Machine. In his paper “Systems of Logic Based on Ordinals,” he developed a system of formal logic that could handle transfinite numbers, opening up new possibilities for mathematical reasoning.

Turing’s influence on Artificial Intelligence (AI) is equally significant. His “Turing Test” concept remains a benchmark for AI development. The test proposes that if a machine can convincingly imitate human conversation, it can be considered intelligent.

Turing’s work on AI extended beyond the Turing Test. In his unfinished paper “Computing Machinery and Intelligence,” he proposed that a machine could be said to think if humans could use it like other digital computers, such as a calculator.

Turing’s legacy in mathematical logic and AI continues to shape modern computing. His ideas have inspired generations of researchers and continue to influence the development of intelligent machines.

Von Neumann’s Impact: Game Theory And Nuclear Physics

In nuclear physics, von Neumann made critical strides in the Manhattan Project, where he played a pivotal role in the design and development of the first atomic bomb. His work on the theoretical aspects of nuclear reactions was instrumental in understanding the mechanics of fission and fusion processes.

The intersection of game theory and nuclear physics can be traced back to von Neumann’s seminal work, “Theory of Games and Economic Behavior,” published in 1944. In this book, he introduced the concept of a “minimax” solution, a strategy that guarantees the best possible outcome for a player in a game where the opponent has perfect information about the other player’s moves. This concept was later applied to the design of nuclear weapons, where it helped ensure the stability of mutually assured destruction (MAD) between superpowers during the Cold War era.

Von Neumann’s work on game theory also had profound implications for computer science. In 1945, he proposed designing the first stored-program digital computer, which became the blueprint for modern computers. His architecture, known as the von Neumann architecture, consists of a central processing unit (CPU), memory, and input/output devices. This design revolutionized computing by enabling machines to execute instructions stored in their own memory, paving the way for the development of complex software applications and artificial intelligence systems.

In conclusion, John von Neumann’s impact on game theory, nuclear physics, and computer science cannot be overstated. His work laid the foundation for understanding strategic interactions, designing nuclear weapons, and building modern computers. As we grapple with global challenges in these areas, von Neumann’s insights remain relevant and influential.

The Cold War Era: Computing Advancements

The Cold War Era, spanning from 1947 to 1991, was a period of intense technological advancement, particularly in the realm of computing. This era witnessed the birth and evolution of computers that would shape modern technology.

In the United States, the Defense Advanced Research Projects Agency (DARPA) played a pivotal role in funding research and development projects aimed at creating advanced computing systems. One such project was the Development of an Experimental Computer (DEC), which led to the creation of the PDP-1, one of the first timeshare computers, in 1958 .

Simultaneously, the Soviet Union was also making significant strides in computer technology. The MESM (Multicomponent Electronic Calculating Machine) was developed by Sergei Aleksandrovich Lebedev and his team at the Moscow Institute of Electrotechnical Engineering in 1950 . This machine, while not as influential as its Western counterparts, demonstrated the Soviet Union’s commitment to computer technology during the Cold War.

The work of Alan Turing and John von Neumann heavily influenced the development of these early computers. Turing’s concept of a universal machine laid the foundation for the design of modern computers, while von Neumann’s architecture provided the blueprint for how data should be stored and processed .

The Cold War also led to the development of artificial intelligence (AI). In 1956, during the Dartmouth Conference, AI was officially defined as “the science and engineering of making intelligent machines” . This conference marked the beginning of serious research into AI, leading to significant advancements in the field over the following decades.

Modern Computing Evolution: From Mainframes To Cloud

In the annals of technological advancement, the evolution of computing stands as a testament to human ingenuity. This journey, spanning over seven decades, has seen the transformation of bulky mainframes into the ubiquitous cloud infrastructure we rely on today.

The genesis of this revolution can be traced back to the mid-20th century with the pioneering work of Alan Turing and John von Neumann. Turing’s theoretical concept of a universal machine, as detailed in his 1936 paper “On Computable Numbers,” laid the foundation for modern computing architecture. On the other hand, Von Neumann proposed the now-ubiquitous stored-program architecture in 1945, which is the blueprint for virtually every computer built since then.

The first practical implementation of these ideas came with the development of the Electronic Numerical Integrator and Computer (ENIAC) in 1946. ENIAC was a large-scale, general-purpose digital computer capable of solving various mathematical problems. However, it could not store programs, making it impractical for most applications.

The introduction of the stored-program concept by von Neumann in 1945 paved the way for the development of the first practical stored-program computer, the Electronic Delay Storage Automatic Calculator (EDSAC), completed in 1949. EDSAC was a significant improvement over ENIAC, as it could store programs and data in its memory, making it more versatile and efficient.

The advent of transistors in the late 1950s marked another milestone in computing evolution. Transistors replaced vacuum tubes, making computers smaller, faster, and more reliable. This paved the way for the development of mini-computers in the 1960s and personal computers in the 1970s. The proliferation of these devices led to a dramatic increase in computing power and accessibility.

Fast-forward to the present day, and we find ourselves at the cusp of another revolution: the cloud. Cloud computing, with its on-demand availability of resources, has made computing more accessible than ever before. The future promises even more exciting developments as we continue to push the boundaries of what is computationally possible.