Researchers at the University at Buffalo have explored the potential of large language models, such as ChatGPT and Google’s Gemini, to detect deepfake images. Led by Siwei Lyu, SUNY Empire Innovation Professor of computer science and engineering, the study found that while these models lag behind state-of-the-art deepfake detection algorithms in accuracy, they offer a unique advantage: the ability to explain their decision-making process in plain language.

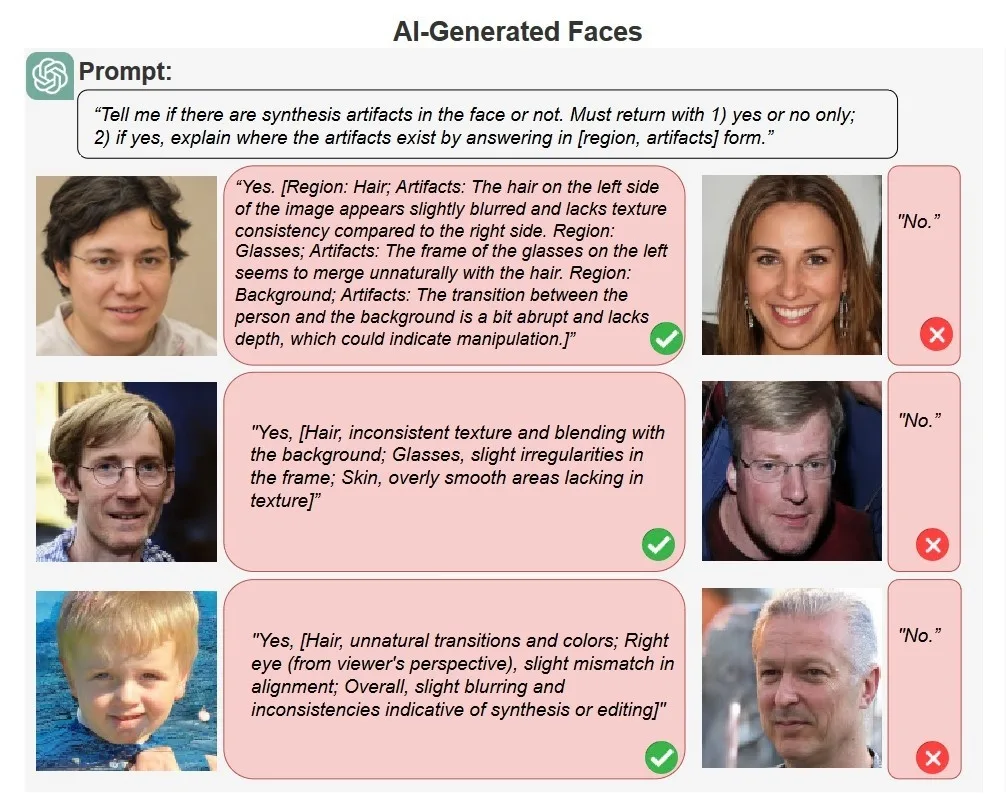

When analyzing an AI-generated photo, ChatGPT correctly pointed out anomalies, such as blurry hair and abrupt transitions between the person and background. This semantic knowledge and natural language processing make ChatGPT a more user-friendly deepfake tool for both users and developers. The study’s findings suggest that with proper prompt guidance, large language models can be effective at detecting AI-generated images, and their ability to provide explanations could revolutionize the field of deepfake detection.

The study by the University at Buffalo’s Media Forensics Lab is a significant step forward in exploring the capabilities of LLMs in identifying AI-generated faces. By feeding thousands of images of real and deepfake faces into ChatGPT with vision (GPT-4V) and Gemini 1.0, the researchers found that these models can detect synthetic artifacts with impressive accuracy – 79.5% for latent diffusion-generated images and 77.2% for StyleGAN-generated images.

What’s even more remarkable is that ChatGPT can explain its decision-making process in plain language, pointing out specific features that suggest an image is fake, such as blurry hair or abrupt transitions between the subject and background. This ability to provide understandable explanations sets LLMs apart from traditional deepfake detection algorithms, which often rely on complex statistical patterns and lack transparency.

The researchers attribute ChatGPT’s success to its semantic knowledge and natural language processing capabilities, which allow it to understand images as a human would – recognizing typical facial features, symmetry, and the characteristics of real photographs. This common-sense understanding enables LLMs to make inferences about an image based on their language component, making them more user-friendly for both users and developers.

However, there are also limitations to ChatGPT’s approach. Its performance is still below that of state-of-the-art deepfake detection algorithms, which can achieve accuracy rates in the mid- to high-90s. This is partly due to LLMs’ inability to catch signal-level statistical differences invisible to the human eye but often used by detection algorithms.

Additionally, other LLMs like Gemini may not be as effective at explaining their analysis, and sometimes provide nonsensical supporting evidence. Furthermore, LLMs can be hesitant to analyze images, requiring careful prompt engineering to elicit relevant information from them.

Despite these drawbacks, the study’s findings have significant implications for the development of more user-friendly deepfake detection tools. By fine-tuning LLMs specifically for this task, we may unlock their full potential in detecting AI-generated images and providing transparent explanations for their decisions.

External Link: Click Here For More