Google Research, in collaboration with Weizmann Institute, Tel-Aviv University, and Technion, has introduced Lumiere, a text-to-video diffusion model designed for synthesizing videos that portray realistic, diverse, and coherent motion. Unlike existing video models, Lumiere generates the entire temporal duration of the video at once, making it easier to achieve global temporal consistency. The model uses a Space-Time U-Net architecture and a pre-trained text-to-image diffusion model to generate full-frame-rate, low-resolution video. Lumiere can be used for a wide range of content creation tasks and video editing applications, including image-to-video, video inpainting, and stylized generation.

Introduction to Lumiere: A Space-Time Diffusion Model for Video Generation

Lumiere is a text-to-video diffusion model developed by a team of researchers from Google Research, Weizmann Institute, Tel-Aviv University, and Technion. The team includes Omer Bar-Tal, Hila Chefer, Omer Tov, Charles Herrmann, Roni Paiss, Shiran Zada, Ariel Ephrat, Junhwa Hur, Yuanzhen Li, Tomer Michaeli, Oliver Wang, Deqing Sun, Tali Dekel, and Inbar Mosseri. Lumiere is designed to synthesize videos that portray realistic, diverse, and coherent motion, a significant challenge in video synthesis.

Lumiere’s Unique Approach to Video Generation

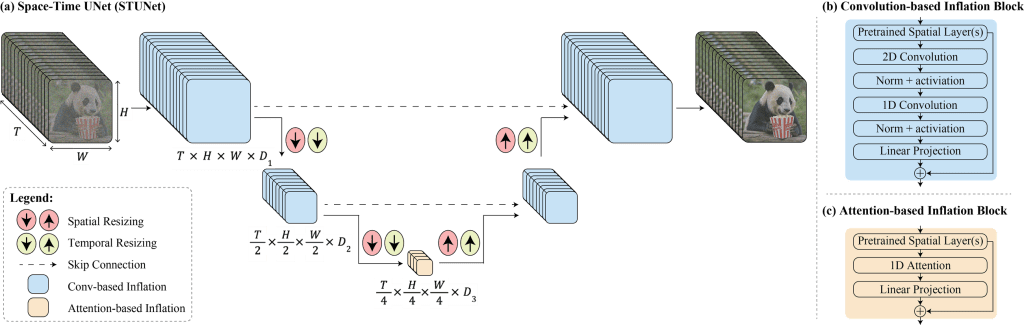

Unlike existing video models that synthesize distant keyframes followed by temporal super-resolution, Lumiere introduces a Space-Time U-Net architecture that generates the entire temporal duration of the video at once, through a single pass in the model. This approach ensures global temporal consistency, which is difficult to achieve with existing models. Lumiere’s model learns to directly generate a full-frame-rate, low-resolution video by processing it in multiple space-time scales.

Lumiere’s Superiority Over Existing Models

Existing text-to-video models often struggle with video duration, overall visual quality, and the degree of realistic motion they can generate. They typically adopt a cascaded design where a base model generates distant keyframes, and subsequent temporal super-resolution models generate the missing data between the keyframes in non-overlapping segments. This approach, while memory efficient, often fails to generate globally coherent motion. Lumiere, on the other hand, generates the full temporal duration of the video at once, leading to a more globally coherent motion compared to prior work.

Lumiere is built on top of a pre-trained text-to-image model, which works in pixel space and consists of a base model followed by a spatial super-resolution cascade. This integration allows Lumiere to benefit from the powerful generative prior of text-to-image models.

Lumiere’s Solution to Memory Requirements and Global Continuity

Applying spatial super-resolution on the entire video duration is infeasible in terms of memory requirements. Lumiere proposes to extend Multidiffusion, an approach proposed for achieving global continuity in panoramic image generation, to the temporal domain. This approach computes spatial super-resolution on temporal windows and aggregates results into a globally coherent solution over the whole video clip.

Lumiere’s Versatility in Video Content Creation Tasks

Lumiere demonstrates state-of-the-art video generation results and can be easily adapted to a wide range of content creation tasks and video editing applications. These include video inpainting, image-to-video generation, generating stylized videos that comply with a given style image, and invoking off-the-shelf editing methods to perform consistent editing.

Google Lumiere in Summary

Lumiere, a space-time diffusion model for video generation, represents a significant advancement in the field of text-to-video (T2V) diffusion models, distinguishing itself from its contemporaries in several key aspects. When compared to prominent models like ImagenVideo, AnimateDiff, StableVideoDiffusion (SVD), ZeroScope, Pika, and Gen2, Lumiere exhibits unique characteristics in its operational mechanisms and output features.

ImagenVideo, developed by Ho et al. in 2022, operates in pixel-space and is composed of a cascade of seven models. It is known for producing a reasonable amount of motion, but the overall visual quality is somewhat lower compared to newer models. AnimateDiff and ZeroScope, introduced by Guo et al. in 2023 and Wang et al. in 2023 respectively, are notable for their noticeable motion in videos. However, they tend to produce visual artifacts and are limited in terms of video duration, generating videos of only 2 seconds and 3.6 seconds, respectively. SVD, proposed by Blattmann et al. in 2023, inflates Stable Diffusion for video data and released only an image-to-video model, which is not conditioned on text and outputs 25 frames.

Commercial T2V models like Pika and Gen2, developed by Pika Labs and RunwayML in 2023, are recognized for their high per-frame visual quality. However, they are characterized by limited motion, often resulting in near-static videos. In contrast, Lumiere is capable of producing 5-second videos, which is longer than AnimateDiff and ZeroScope. It maintains a balance between motion magnitude and temporal consistency, ensuring overall quality. In user studies, Lumiere was preferred for both text-to-video and image-to-video generation tasks, indicating its effectiveness in these areas. Compared to its contemporaries, Lumiere manages to generate higher motion magnitudes while maintaining fewer visual artifacts and better temporal consistency.