Large Language Model (LLM) inference currently demands significant computational power, yet CPU-based solutions remain crucial for widespread access to artificial intelligence. Jingyao Zhang from the University of California, Riverside, Jaewoo Park and Jongeun Lee from the Ulsan National Institute of Science and Technology, alongside Elaheh Sadredini from the University of California, Riverside, present a new system, SAIL, that dramatically improves the efficiency of LLM inference on standard CPUs. This research addresses fundamental limitations in current CPU architectures when handling the low-precision arithmetic required by modern, compressed models and the memory bottlenecks inherent in generating text. SAIL integrates innovative lookup-table-based processing with on-chip memory, significantly reducing data movement and accelerating calculations, and achieves up to 10. 7times faster performance and 19. 9times better cost efficiency compared to existing CPU and GPU baselines, paving the way for practical and affordable LLM deployment on readily available hardware.

A dominant theme is reducing the precision of model weights and activations through quantization, which lowers memory requirements and computational costs. Alongside this, researchers explore pruning techniques to reduce the number of parameters within the models themselves. Significant effort targets optimizing attention mechanisms, a core component of LLMs. Innovations like NoMAD-Attention aim to improve CPU efficiency by minimizing computationally expensive operations, while BP-NTT optimizes the Number Theoretic Transform used in certain attention implementations.

Other approaches, such as DéjàVu and Infinity Stream, focus on improving data handling through efficient caching and in-memory processing. A strong emphasis exists on making LLMs perform well on CPUs, offering a cost-effective alternative to specialized hardware. This is achieved through techniques like kernel fusion, operator optimization, and table lookup. Beyond model optimization, researchers are developing complete serving systems and infrastructure for LLMs, such as Orca, a distributed system for Transformer models. Sustainability is also becoming a key concern, with investigations into GPU power capping for more efficient AI operation.

The entire LLM lifecycle is under scrutiny, prompting research into a comprehensive supply chain for these models. Foundational work continues on model architectures like Llama 2 and OPT, alongside comprehensive reviews of Generative Pre-trained Transformer (GPT) models. Novel computing paradigms are also being explored, including in-memory and near-memory computing, which move computation closer to data storage to reduce data movement. The RISC-V instruction set architecture is gaining traction as a platform for custom hardware acceleration, and SRAM-based acceleration offers potential for faster computation.

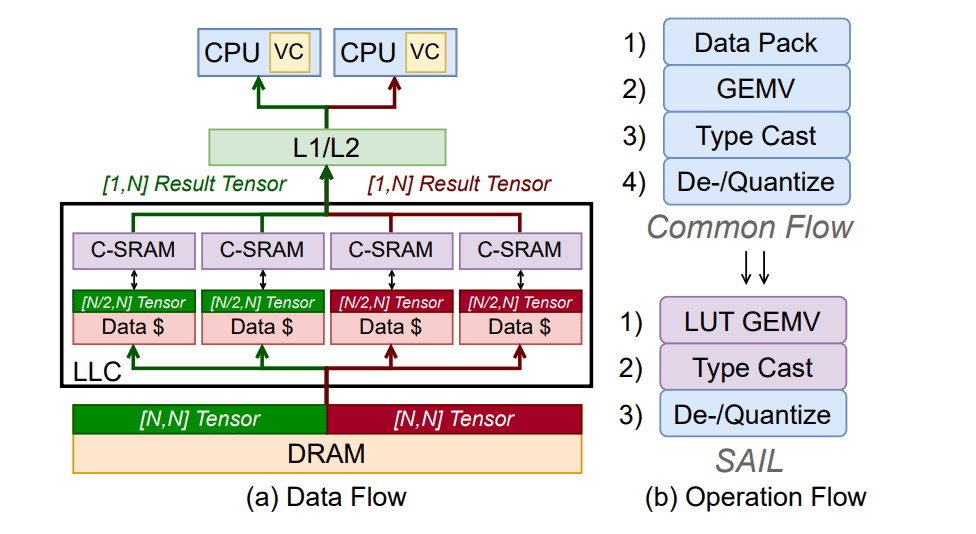

These efforts are complemented by general optimization techniques like kernel fusion and operator optimization. Key trends reveal a strong focus on CPU performance, the widespread adoption of quantization, and a growing recognition that memory bandwidth is a critical bottleneck. The work introduces a near-cache Processing-in-Memory (PIM) architecture, integrating compute-capable SRAM arrays adjacent to CPU caches. This allows for direct data transfer and high-bandwidth in-place computation, supporting arbitrary-precision arithmetic with minimal cost. The system functions as both a compute and storage unit, leveraging Lookup Table-based General Matrix-Vector Multiplication (LUT-GEMV). The team implemented a Pattern-Aware LUT optimization technique that exploits redundancy in input activation patterns, reducing computation cycles by 13.

8%. This optimization, combined with flexible data placement strategies, supports large workloads and pipelining between compute and data transfer. An in-memory type conversion algorithm tightly couples LUT-GEMV with existing compute and memory systems, leveraging parallelism in PIM and the CPU vector engine for floating-point operations. A single new instruction was introduced to natively support quantized LLM inference, incorporating fields for GEMV scale factors and quantization levels, while maintaining only 2% hardware overhead. Evaluations using a modified gem5 simulator demonstrate that SAIL achieves up to 10.

7x speedup and 19. 9x higher tokens per dollar compared to ARM Neoverse-N1 CPU baselines, and up to 7. 04x better cost efficiency than V100 GPUs. This breakthrough establishes a practical path for efficient CPU-based LLM inference, contributing to the democratization of AI by reducing reliance on specialized hardware.

SAIL Accelerates LLM Inference on CPUs

Researchers have developed SAIL, a novel system designed to accelerate large language model inference directly on standard CPUs. This achievement addresses the significant computational demands of these models and expands access to artificial intelligence by reducing reliance on specialized hardware. SAIL overcomes limitations of existing approaches by integrating SRAM-based processing-in-memory with a batched lookup table technique, enabling efficient computation across varying precision levels and model sizes. The system achieves substantial performance gains, demonstrating up to a ten-fold increase in speed and a nineteen-fold improvement in cost-efficiency compared to conventional CPU baselines, and exceeding the performance of comparable GPU platforms.

The core innovation lies in SAIL’s ability to perform computations within the CPU cache using lookup tables, minimizing data movement and maximizing data reuse. This approach requires minimal hardware overhead, adding only a small amount of logic to the CPU cache and a single new instruction, while seamlessly integrating with existing memory hierarchies. Future work may focus on optimizing SAIL for a wider range of models and exploring its potential with other computational workloads, further solidifying its role in democratizing access to powerful AI technologies.

👉 More information

🗞 SAIL: SRAM-Accelerated LLM Inference System with Lookup-Table-based GEMV

🧠 ArXiv: https://arxiv.org/abs/2509.25853