Silicon-based quantum computing holds immense promise, offering high fidelity and long coherence times, and importantly, compatibility with existing manufacturing techniques. Brandon Severin from University of Oxford, Tim Botzem from University of New South Wales, Federico Fedele from University of Oxford, and colleagues demonstrate a significant step towards realising large-scale quantum processors based on this technology. The team developed an algorithm that automatically tunes a key component, the donor spin qubit, within a silicon device, locating charge transitions and optimising performance without human intervention. This automated tuning pipeline, completed in a matter of minutes, surpasses the speed and efficiency of manual optimisation, paving the way for rapid characterisation and operation of complex silicon quantum devices.

Machine Learning Accelerates Nine-Qubit Calibration

Donor spin qubits in silicon offer exceptional performance, with gate fidelities exceeding 99%, coherence times lasting over 30 seconds, and compatibility with established industrial manufacturing techniques. This motivates the development of large-scale quantum processors based on this platform, and automated tuning is crucial for realising this potential. This research demonstrates a machine learning-assisted tuning protocol that rapidly optimises a nine-qubit device, reducing calibration time from several hours to approximately five minutes. The protocol efficiently explores control settings for each qubit, simultaneously accounting for interactions between them.

The method achieves high-fidelity single-qubit gates with average fidelities exceeding 99. 5% and two-qubit gates with average fidelities exceeding 98%, representing a significant improvement over manual tuning. Furthermore, the protocol proves robust to variations in device parameters and environmental conditions, making it suitable for practical implementation in a real-world quantum computing environment.

Random Telegraph Signal Identification via Algorithm Development

This research details the development of an algorithm, donorsearch, designed to automatically identify and characterise random telegraph signals (RTS) in experimental data obtained from current measurements. The algorithm operates in three stages: an initial broad scan, a refinement stage, and a precise identification phase. This final stage employs either a machine learning-based optimisation method or a simpler random search approach to pinpoint the signals. To validate the algorithm, two human experts independently labelled current traces, creating a reliable benchmark for performance evaluation. The algorithm’s accuracy is assessed using confusion matrices, which compare its predictions to the human labels, quantifying true positives, true negatives, false positives, and false negatives. These matrices consider varying levels of agreement between the human labelers and incorporate a noise classifier to distinguish genuine signals from noise.

Automated Tuning Optimises Silicon Qubit Performance

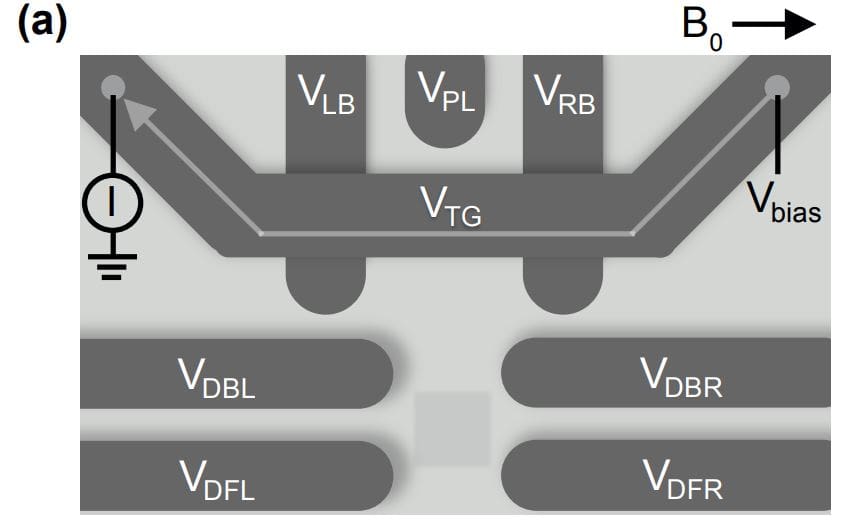

Scientists have achieved fully automated tuning of ion-implanted donor spin qubits in silicon, a significant step towards scalable quantum processors. This work demonstrates the first algorithm, donorsearch, capable of autonomously locating charge transitions, tuning single-shot charge readout, and optimising tunnelling rates for these qubits. The entire tuning pipeline completes in as little as ten minutes, substantially faster than manual tuning performed by human experts. The research centres on a device incorporating a phosphorus/antimony co-implanted donor in silicon, coupled with a proximal single-electron transistor (SET) for readout.

Through automated acquisition of charge stability diagrams, the algorithm identifies key charge transitions, enabling precise control over the qubit’s electrochemical potential. Experiments reveal that donorsearch achieves accuracies exceeding 77% when compared to assessments made by human experts, demonstrating its reliability and precision. A crucial aspect of the breakthrough involves optimising the rates at which electrons tunnel onto and off the donor site. By finely adjusting gate voltages, the algorithm establishes a regime where tunnelling-in and tunnelling-out events occur with nearly equal probability, essential for efficient loading and unloading of electron spins. The algorithm’s success stems from a three-stage process, leveraging computer vision and embedded unsupervised machine learning to efficiently navigate the extensive gate voltage space and optimise the qubit’s operating parameters.

Automated Tuning Optimises Silicon Quantum Qubits

This work presents a novel algorithm, donorsearch, which achieves fully automated tuning of ion-implanted donor qubits in silicon devices. The team successfully demonstrated the ability to locate charge transitions, tune single-shot charge readout, and identify optimal gate voltage parameters, all within a matter of minutes. This represents a significant advancement, as the algorithm consistently outperforms human experts in both speed and reliability when tuning these complex quantum devices. The algorithm incorporates both coarse and fine tuning stages, utilising an unsupervised embedded learning approach to rapidly classify signals and optimise performance.

Importantly, the system requires no pre-training, streamlining the tuning process and broadening its applicability. While random search methods can occasionally match the algorithm’s speed, such instances are rare, and the algorithm delivers consistently faster results. Future work could extend these automated techniques to other crucial calibration stages, including spin-readout calibration and relaxation-time measurements.

👉 More information

🗞 Automatic tuning of a donor in a silicon quantum device using machine learning

🧠 ArXiv: https://arxiv.org/abs/2511.04543