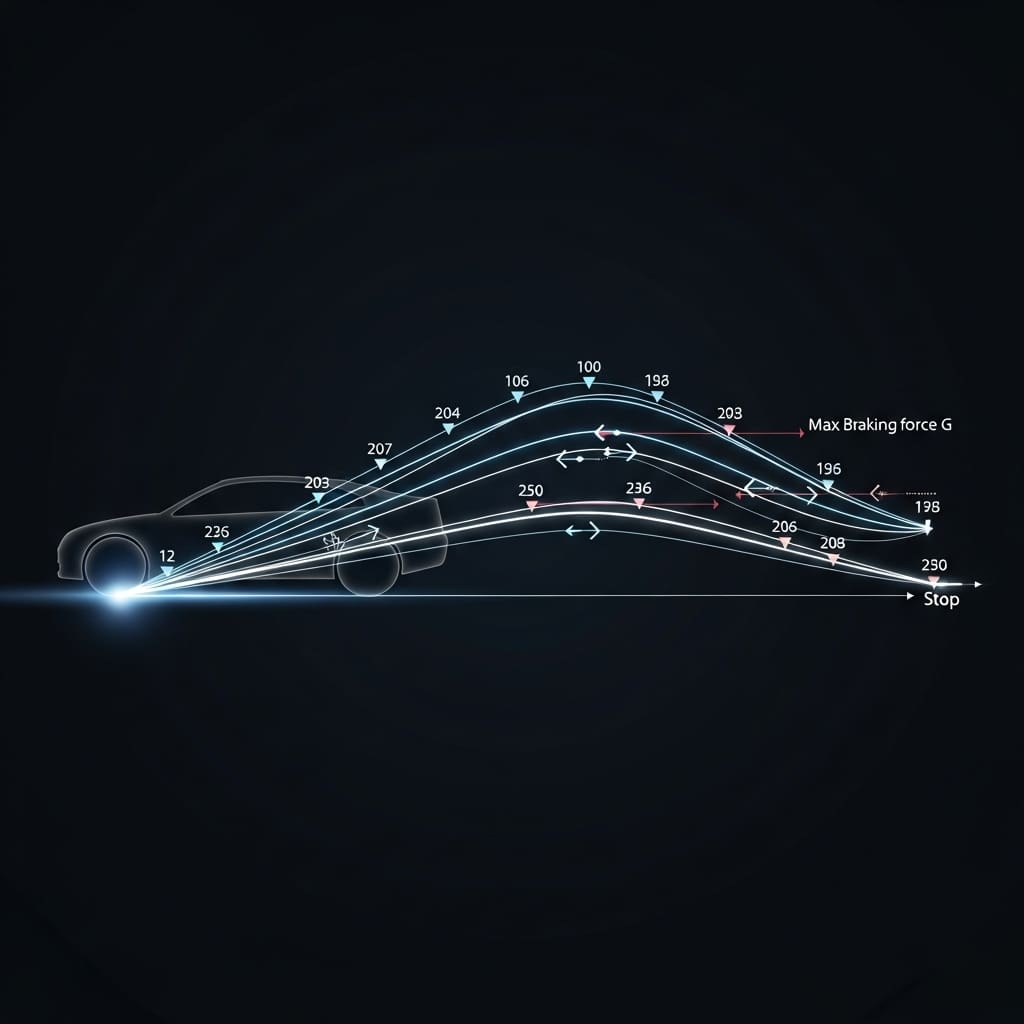

The challenge of preventing false emergency braking in commercial vehicles receives a significant advance through innovative work led by Zhe Li, Kun Cheng from China Jiliang University, Hanyue Mo, Jintao Lu, Ziwen Kuang from China Jiliang University, and Jianwen Ye. Their research tackles the problematic ‘zero-speed braking’ often caused by unreliable data from vehicle communication networks, particularly during low-speed manoeuvres. The team develops a vision-based system that accurately classifies vehicle motion, whether static, vibrating, or moving, by analysing video footage from blind spot cameras. This approach, utilising enhanced feature tracking and robust statistical analysis, demonstrably suppresses environmental interference and dramatically reduces false activations, achieving a remarkable 89% reduction in on-site testing and a 100% success rate in emergency braking scenarios, representing a substantial improvement in commercial vehicle safety systems.

Visio-Radar Fusion For Robust Emergency Braking

This research concentrates on the development of a robust and reliable automatic emergency braking (AEB) system, designed to function effectively in challenging operational conditions. The system integrates computer vision and radar technology, coupled with advanced data processing techniques, to enhance safety and mitigate the risk of collisions. A primary objective is to minimise false positives and improve detection accuracy, particularly in adverse weather conditions and complex driving scenarios where current systems often struggle. The emphasis on comprehensive dataset creation and rigorous evaluation methodologies underscores the critical importance of thorough testing and validation in the development of increasingly autonomous driving systems, moving beyond simulation to real-world performance assessment.,

The system leverages computer vision techniques, notably Scale-Invariant Feature Transform (SIFT) for robust feature extraction, and integrates this with radar data to improve overall robustness, especially in conditions of poor visibility such as heavy rain, dense fog, or nighttime operation. Comprehensive datasets, including the Ithaca365 dataset, which provides diverse urban driving scenes, and the ApolloScape dataset, known for its high-definition mapping and sensor data, are crucial for both training and evaluating the system’s performance. The research specifically addresses the challenge of improving AEB performance in inclement weather, where sensor degradation significantly impacts reliability, and minimising false positives to maintain driver trust and prevent unnecessary interventions. False positives can lead to driver disengagement and a rejection of the technology, hindering its widespread adoption.,

Vehicle Motion Analysis From Camera Data

Scientists engineered a vision-based system to address inaccuracies in speed readings commonly found in commercial vehicle automatic emergency braking (AEB) systems, particularly during low-speed operation where precise motion detection is paramount. The system processes video footage from a blind spot camera, employing enhanced feature extraction and matching techniques to precisely classify vehicle motion as static, vibrating, or moving. This detailed classification allows for a more nuanced understanding of the vehicle’s state, improving the accuracy of AEB activation and preventing unnecessary braking events. The approach achieves exceptional performance, with the system attaining a 99.96% F1-score for static detection and 97.78% for moving state recognition, demonstrating its high degree of accuracy in distinguishing between different motion states. The F1-score, a harmonic mean of precision and recall, provides a balanced measure of the system’s performance.,

The system employs a sophisticated feature extraction process combining Contrast Limited Adaptive Histogram Equalization (CLAHE) and Scale-Invariant Feature Transform (SIFT). CLAHE enhances image quality in low-contrast conditions by locally adjusting the histogram, improving the visibility of features, while SIFT identifies and describes local features that are invariant to scale, rotation, and illumination changes. Multiframe trajectory analysis, utilising a five-frame sliding window, assesses temporal consistency and minimises errors by tracking features over time and filtering out spurious detections. The system also integrates On-Board Diagnostics (OBD-II) data to dynamically configure a Region of Interest (ROI), focusing processing power on relevant areas of the video feed and reducing unnecessary computations, thereby optimising resource utilisation and improving real-time performance. This dynamic ROI adjustment ensures that the system prioritises processing areas where motion is most likely to occur.

👉 More information

🗞 Commercial Vehicle Braking Optimization: A Robust SIFT-Trajectory Approach

🧠 ArXiv: https://arxiv.org/abs/2512.18597