Image classification currently relies on powerful, yet classically constrained, computational methods, but researchers are now exploring quantum machine learning as a potential pathway to significant advances. Mingzhu Wang and Yun Shang, from the Academy of Mathematics and Systems Science, Chinese Academy of Sciences, and their colleagues, demonstrate a novel hybrid approach that combines the strengths of Vision Transformers and Quantum Convolutional Neural Networks. Their framework, ViT-QCNN-FT, effectively compresses complex images into a format suitable for current quantum computers, achieving a remarkable 99. 77% accuracy on the CIFAR-10 dataset. The team’s work not only reveals that carefully designed quantum entanglement enhances performance, but also surprisingly shows that noise can, in certain instances, improve accuracy, and crucially, establishes a clear quantum advantage over comparable classical systems, paving the way for practical quantum machine learning applications.

Quantum machine learning promises computational advantages, but progress on real-world tasks is hampered by the need for classical preprocessing and the limitations of noisy quantum devices. Researchers introduce ViT-QCNN-FT, a hybrid framework that integrates a fine-tuned Vision Transformer with a quantum convolutional neural network, or QCNN, to compress high-dimensional images into a format suitable for current quantum hardware. The method systematically explores entanglement strategies, revealing that quantum circuits with uniformly distributed entanglement consistently deliver superior performance in fusing complex, non-local features. Results demonstrate state-of-the-art accuracy, achieving 99. The core idea is to combine the strengths of classical deep learning, particularly ViTs known for their performance in image recognition, with the potential advantages of quantum computing, such as efficient feature extraction. The team addresses the limitations of fully quantum models by proposing a hybrid approach to achieve practical benefits, aiming for models that are not only accurate but also robust to adversarial attacks. The ViT and QCNN are combined through feature mapping, where ViT features are mapped into quantum states, followed by quantum processing using convolutions and pooling operations, and finally, measurement and classification. The research explores different methods for encoding classical image data into quantum states and employs techniques to train the models to be more resilient to adversarial attacks. The hybrid models demonstrate improved performance compared to purely classical or purely quantum models in certain scenarios, and exhibit increased robustness against adversarial attacks, suggesting that quantum processing can enhance security. The QCNNs effectively learn complex features from the ViT-extracted features, and the research investigates the expressibility and entanglement capabilities of the QCNNs, which are crucial for their performance. This research contributes to the growing field of quantum machine learning by demonstrating the potential of hybrid quantum-classical models for image classification, highlighting the benefits of combining classical and quantum computing.

Hybrid Quantum-Classical Network Achieves Record Accuracy

The research team developed ViT-QCNN-FT, a novel hybrid framework integrating a fine-tuned Vision Transformer with a convolutional neural network designed for quantum computation. They successfully compressed high-dimensional image data into a format suitable for current quantum devices, achieving state-of-the-art accuracy of 99. 77% on the CIFAR-10 image classification task. A surprising finding was the dual role of quantum noise; under certain conditions, specifically amplitude damping, noise actually enhanced accuracy by 2. 71%.

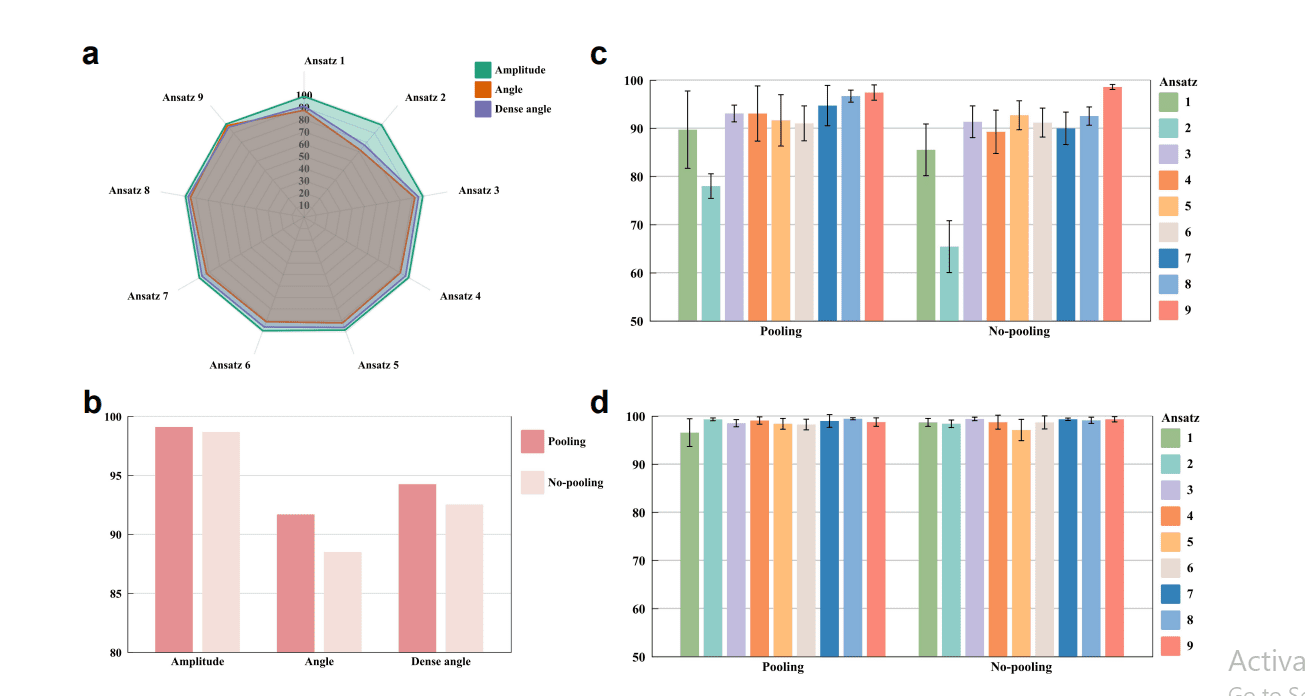

Detailed analysis revealed the efficiency of the QCNN component, as replacing it with classical convolutional neural networks of equivalent size resulted in a substantial 29. 36% drop in accuracy, providing unambiguous evidence of quantum advantage. Researchers systematically compared three quantum state encoding methods, finding that amplitude encoding consistently outperformed angle and dense angle encodings, particularly when compressing data to 10% of its original size. This compression, reducing the input from 3072 dimensions to approximately 10%, did not significantly impact performance, with the team achieving 99.

53% accuracy using 8-qubit amplitude encoding. Further experiments explored the impact of different QCNN designs, revealing that performance varied significantly, with the best design achieving an accuracy 33. 15% higher than the worst. The team also demonstrated that the framework achieves comparable performance with a reduced qubit count, maintaining high accuracy even after reducing the qubit count from 10 to 8 and compressing the classical feature space. These results establish a principled pathway for co-designing classical and quantum architectures, paving the way for practical quantum machine learning capable of tackling complex, high-dimensional tasks.

ViT-QCNN Achieves Record Image Classification Accuracy

This research demonstrates a new hybrid framework, ViT-QCNN-FT, which combines a Vision Transformer with a convolutional neural network designed for quantum computation. The team successfully compressed high-dimensional image data into a format suitable for current, noisy quantum devices, achieving state-of-the-art accuracy of 99. 77% on the CIFAR-10 dataset. A key finding is that carefully designed quantum circuits, utilizing uniformly distributed entanglement, significantly improve the fusion of complex, non-local features, enhancing performance. Notably, the study reveals a surprising effect of quantum noise; in certain instances, moderate levels of noise can actually improve accuracy, potentially by encouraging the model to learn more robust features. Crucially, replacing the quantum convolutional neural network with a classical counterpart of equivalent complexity resulted in a substantial performance decrease, providing strong evidence for a quantum advantage in this task.

👉 More information

🗞 Hybrid Vision Transformer and Quantum Convolutional Neural Network for Image Classification

🧠 ArXiv: https://arxiv.org/abs/2510.12291