Hyperdimensional computing offers a promising pathway to energy-efficient machine learning, but its memory demands often limit practical deployment, particularly for resource-constrained devices. Sanggeon Yun, Hyunwoo Oh, and Ryozo Masukawa, alongside Mohsen Imani, all from the University of California, Irvine, address this challenge with a novel approach called DecoHD. This research introduces a method for dramatically reducing the memory footprint of hyperdimensional models without sacrificing significant accuracy, achieving substantial savings through a learned decomposition of the model’s parameters. DecoHD maintains the core principles of hyperdimensional computing, binding, bundling, and scoring, while enabling extreme compression and delivering significant gains in energy efficiency and speed when implemented on dedicated hardware, surpassing the performance of both CPUs and GPUs and even existing hyperdimensional computing ASICs.

Class-Selective Decomposition Boosts Hyperdimensional Computing Efficiency

This research introduces Class-Selective Decomposition (CSD) to improve the efficiency of Hyperdimensional Computing (HDC), a biologically inspired computing paradigm. HDC, while promising for edge AI and low-power applications, can be computationally expensive and require significant memory, especially with complex datasets. CSD addresses these limitations by decomposing high-dimensional vectors into lower-dimensional, class-selective components, enabling more compact representations and faster computations. The method learns a set of basis vectors for each class, storing only the coefficients instead of the full high-dimensional vector, drastically reducing memory requirements.

Computations, such as similarity comparisons, are then performed on these coefficients, improving speed and allowing HDC to scale to larger datasets. The research demonstrates the method’s robustness to errors and evaluates CSD on benchmark datasets, achieving substantial reductions in memory usage and computational time while maintaining or improving classification accuracy. This work focuses on enabling HDC for resource-constrained edge devices. Furthermore, the researchers demonstrate that CSD can be combined with optimization techniques like quantization and sparse training to further enhance efficiency. This positions the work within the growing field of TinyML and edge AI, building upon existing work in HDC, sparse coding, tensor decomposition, and model compression. The authors highlight how their approach complements these techniques, addressing a critical challenge, efficiency, and opening up new possibilities for applications in wearable computing, IoT, and sensor networks.

Decomposition Reduces Hypervector Memory Footprint

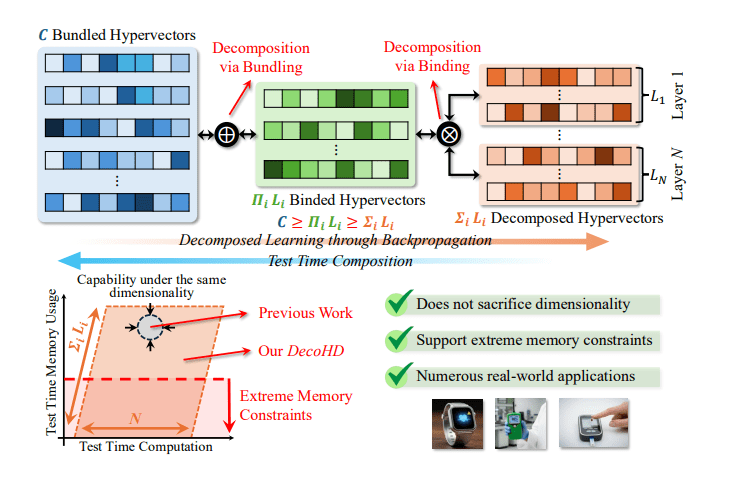

Scientists developed DecoHD, a novel approach to reduce the memory footprint of hyperdimensional computing (HDC) without sacrificing representational power. Conventional HDC struggles with memory bottlenecks when storing one dense hypervector per class, particularly in resource-constrained edge applications. Instead of compressing dimensionality, the team decomposed class prototypes into compositions of a small, shared set of learnable channels, achieving significant savings. The method employs a bind-bundle pipeline, where layers expose learnable channels, and selecting one channel per layer yields bound paths.

A lightweight, class-specific head then aggregates these paths to form logits, enabling a tunable trade-off between memory usage, inference cost, accuracy, and robustness. Crucially, the team trained the system end-to-end, optimizing both low-dimensional latents and the class bundling weights, preserving the holographic properties of HDC and enabling stable learning in the decomposed space. This approach reduces the number of trainable parameters by up to 97 percent. Experiments demonstrate that DecoHD achieves accuracy comparable to conventional HDC while delivering substantial performance gains on various hardware platforms. An ASIC implementation of DecoHD achieves up to 277times higher energy efficiency and 35times faster inference than a CPU baseline, and improvements over a GPU. The method relies solely on binding, bundling, and dot products during inference, ensuring compatibility with existing HDC accelerators and memory-centric hardware.

DecoHD Compresses Models With Minimal Accuracy Loss

The research team developed DecoHD, a novel approach to compressing hyperdimensional computing (HDC) models while maintaining high performance and significantly reducing memory requirements. This work addresses a key limitation in HDC, where reducing model size often erodes accuracy and robustness. DecoHD achieves compression by learning directly in a decomposed HDC parameterization, utilizing a shared set of channels across layers and employing multiplicative binding and bundling techniques. This innovative method creates a large representational space from compact factors, effectively compressing along the class axis.

Experiments demonstrate that DecoHD achieves remarkable memory savings with minimal accuracy degradation, consistently staying within approximately 0. 1-0. 15% of a strong, non-reduced HDC baseline. The team evaluated performance across different numeric precisions and hypervector dimensionalities, finding that DecoHD delivers consistent results and does not introduce precision-specific failure modes. Notably, DecoHD reaches its accuracy plateau with up to 97% fewer trainable parameters, showcasing its efficiency.

Further analysis reveals that DecoHD outperforms state-of-the-art feature-axis reduction methods, particularly under strict memory budgets. This advantage stems from DecoHD’s ability to preserve the full ambient dimensionality while compressing along the class axis, maintaining the geometric advantages of a large dimensionality. The research also demonstrates that DecoHD is more robust to random bit-flip noise, maintaining higher accuracy than conventional HDC. Hardware evaluations confirm the substantial benefits of DecoHD, delivering approximately 277times and 35times gains in energy and speed, respectively, compared to a CPU, and improvements over a GPU and a baseline HDC ASIC. These results establish DecoHD as a breakthrough in HDC compression, offering a pathway to deploy efficient and robust HDC models on resource-constrained devices.

DecoHD Achieves Compressed, Robust, Efficient Computing

This work presents DecoHD, a novel approach to hyperdimensional computing that achieves substantial memory savings without significantly compromising accuracy. Researchers successfully demonstrated that decomposing the computational space allows for highly compressed models, reducing the number of trainable parameters by up to 97% while maintaining performance close to a strong, non-reduced baseline. Importantly, DecoHD also improves robustness against noise, specifically random bit-flip errors, enhancing reliability in challenging environments. Hardware implementations of DecoHD, realized as an ASIC, deliver significant gains in energy efficiency, up to 277times greater than a CPU, and consistent speedups compared to both CPUs, GPUs, and conventional HDC ASICs. The team found that shallower decomposition depths maximize throughput and maintain accuracy, suggesting an optimal balance between model size and computational efficiency.

👉 More information

🗞 DecoHD: Decomposed Hyperdimensional Classification under Extreme Memory Budgets

🧠 ArXiv: https://arxiv.org/abs/2511.03911